Based on the previous introduction, this paper proposes the following hypothesis: different facial colors play an important role in the prediction and classification of TCM syndrome types of hypertension.

In order to verify the scientific hypothesis, these works firstly determine several facial regions that are supposed most relevant to TCM syndromes via Analytic Hierarchy Process (AHP) fuzzy comprehensive evaluation method. Then intelligent diagnosis models are designed to extract the distinguishing color features and predict the TCM syndromes given the selected facial regions. Two types of diagnosis model are proposed and the overall pipeline is shown as Figure 1.

Figure 1 Overall pipeline of TCM diagnosis model.

Intuitively, deep learning techniques might be an optimal solution to extract distinguishing color information, considering its powerful potency of abstraction and representation. However, several factors could restrict the performance of the diagnosis model based on DNN:

- Color differences of various TCM syndromes are subtle in Red, Green, Blue (GRB) color space. Whether the diagnosis model based on DNN can capture the subtle difference requires further validation.

- Due to the difficulty of the facial information collection of the patients with hypertension, the training samples are limited, which will aggravate the difficulty of extracting the subtle difference in RGB color space.

Considering that, explicit hand-craft feature extraction methods might be another feasible way. Specifically, we also developed a color spectral decomposition (CSD) algorithm to capture the subtle distinguishing color features and employ traditional regression tools (e.g., RF) to aggregate the features and predict the TCM syndromes. The detailed description of the AHP evaluation method and the two diagnosis models are depicted as follow:

Fuzzy evaluation of TCM diagnostic knowledge based on AHP algorithm

Traditional Chinese medicine believes that color changes in different parts of the human body indicate different diseases, and the face, nose, cheeks, lips, and eyes are divided into different organs to diagnose and predict diseases.28 Lingshu Five colors points out that the pathological position, severity level of the disease can be judged by observing the depth of complexion. You can understand the position of viscera limb segment and other lesions by observing the position of the sickly complexion. That is, if the sickly complexion is undertint, the disease is light, while, if it is dark, the disease is serious. Hypertension is characterized in different parts. Jing Sun et al., (2014)29 proposed that patients with hypertension could be treated according to the characteristics of the forehead, nose, ear, cheek, tongue, eye and hand. Different syndromes of hypertension show different color features in facial regions.30 The identification of TCM syndrome types of hypertension mainly depends on the experience of clinical experts in diagnosis. Due to lacking for the normative quantitative objectification criteria, it’s difficult to achieve the intelligent detection and monitoring of the evolution degree of hypertension based on combination of diseases and syndromes. Based on previous studies, this paper uses the AHP fuzzy comprehensive evaluation method to determine the regions with significant facial color features of hypertension. The algorithm adopted in this paper is as follows:

The evaluation index system of the facial partition of the patients with hypertension is composed of the first and second index layers, and the first index set is

.

Let the first-level index

have

second-level indexes, denoting as

, and

as the

th second-level index of

.

This paper adopts AHP method, and sets the weight of

as

, then the first-level weight set is:

.

Let the weight of the second-level index

be

, then the second-level weight set is

.

Evaluation grade is the basis for evaluation and measurement of facial partition. The evaluation set is divided into 5 grades, which are expressed as

in the indicator system. That is, the weight ratio of the secondary indicators will be calculated according to the selection of the team of clinical experts on hypertension.

According to the judgment of experts, each factor

of

has a degree of membership to the five review levels

, and the evaluation results of

factors can be expressed as a fuzzy matrix

of order

is the single-factor evaluation matrix of the first-level index fuzzy comprehensive evaluation of

, where

is the degree of membership of

which is rated as grade

. According to the determined weight set

, the first-level index fuzzy comprehensive evaluation matrix of y is:

Among them, ° is a composition operator.

The single factor evaluation matrix R of comprehensive fuzzy evaluation is composed of the fuzzy judgment matrix

of the first-level index. The comprehensive evaluation model is:

Therefore, the second-level fuzzy comprehensive evaluation set is:

Finally, according to the index with the largest corresponding weight in the evaluation results, the most suitable facial feature extraction regions to be screened were determined, namely, the cheeks, forehead and nose.

TCM diagnosis model based on CSD+FR

The feature extraction methods elaborately designed, namely color spectral decomposition (CSD), and are proposed to explicitly capture the subtle difference of color information between various types of TCM syndromes. The overall procedure is described as the upper part of Figure 1, and the details are illustrated as follow.31

Facial region extraction: According to the AHP results in Section II, the color information in cheeks, forehead and nose of the face is more relevant to the TCM syndromes. Ensemble of Regression Trees32 (implemented by Dlib33) is employed to automatically localize and extract the 4 facial regions namely A, B, C, and D (representing cheeks, forehead and nose of the face). Considering the tested facial images are captured by standardized equipment and need no calibration, the Ensemble of Regression Tress is preferable due to its light-weight and robustness.

Specifically, the 68 landmarks are localized by Dlib33 (shown as Figure 2a) and based on several (the 1st, 3th , 13th, 15th, 21th, 22th, 27th, 28th, 29th) of the 68 landmark points we can extract color information from the four specific facial regions, as illustrated in Figure 2b.

Figure 2 Face detection and specific facial region extraction.

Color spectral decomposition: After extraction of facial regions, the proposed color spectral decomposition (CSD) is involved to extract the subtle distinguishing color features in the 4 facial regions. Giving a pixel with color index

in RGB color space, the color spectral decomposition (CSD) method this paper proposes aims to construct a sparse representation of

that can transform the three-dimensional color index into a spectral vector with N dimension where N>>2, i.e.,

.

The color index

is firstly converted into HSV color space as

(

denote the hue, saturation, and luminance respectively;19 the values of s,v are in the range of [0,1] and the value of h is in the range of [

], which are demonstrated as Figure 3.

Figure 3 An illustration of HSV color space.

N solid colors with uniformly-spaced hue difference are selected as anchor points, which are denoted as

, where

. The intensity of p that is projected to

is calculated respectively as described in Figure 4.

Figure 4 An illustration of intensity calculation.

As shown in Figure 4, the red circle denotes all the solid colors in HSV color space, i.e.,

. N solid colors

are represented by blue circles. The intensity of p which is projected to a solid color

is defined as the function of the distance between p and

. Supposing the color index of p is

and

is

, their distance then can be derived as

. The analysis above is focused on colors with full luminance, i.e.,

. For an arbitrary p with color index

, its distance can be derived as

.

A function

is employed to map the distance between p and

to the intensity of p which is observed on

. Therefore, the intensity of p projected to

, denoted as

, can be derived as

(1)

λ is employed to control the attenuation of intensity. For instance, if the saturation and luminance of p is near to 1, then its intensity should concentrate in its nearest solid colors. On the contrary, if its saturation and luminance is significantly smaller than 1, its intensity should also spread a larger range of solid colors. In view of that, we set

where

is set to 0.1. A N-dimensional spectral vector

can be got by iterating all anchor points.

Supposing that four image patches

represent 4 different facial regions of a facial image respectively, each region is transformed via color spectral decomposition pixel-wise. For instance, all pixels

(

and denotes the special index) with color index [

] are converted into HSV color space, whose corresponding spectral vector

is calculated according to Eq.(1). It should be noticed that the hue of facial regions always concentrates in a limited range, which is about from 0.03

to 0.1

based on the observation of approximate 250 subjects. Therefore, the hue of each pixel p, denoted as hp, is mapped to range [0,1] by

. A demonstration of spectral vectors extracted from the four facial regions is shown as Figure 5, in which the horizontal axis denotes the hue of solid colors that is used as anchor and the vertical axis denotes the Intensity. N is set to 100 to show the detailed features of spectral vectors, which can also be set to other values. The spectral vectors in the same region are plotted in one single sub-figure.

Figure 5 An illustration of spectral vectors in different regions.

The central spectral vectors of region i, denoted as

, can be calculated by simply averaging all spectral vectors in the given region after outlier exclusion, which is illustrated as Eq.(2)

(2)

i denotes the region after outlier exclusion and

denotes the number of pixels in region i. The outlier exclusion procedure is employed to eliminate singular spectral vectors that are quite different from the mean vector, and 20% pixels (or structural vectors) are excluded. At last, the kurtosis, skewness, average and standard value of

are extracted as statistical features.

TCM syndrome classification and quantification based on machine learning: The kurtosis, skewness, average and standard value of four central spectral vectors (

) derived from the four facial regions, is denoted as

. They are served as inputted features and fed into the machine learning tools in order to predict the level of hypertension and the type of syndromes. The overall flowchart is depicted in Figure 6. Random forest algorithm [1] is served as the machine learning tools.

Figure 6 The overall flowchart of the model of quantification of syndromes of hypertension.

The prediction is comprised of two stages. For instance, supposing the 16-D as inputted features

in the first stage, random forest algorithm A can classify the given facial images into one of the four types of TCM syndromes or healthy people. In the second stage, the centroid is obtained by averaging all the inputted eigenvectors of the same syndrome type and the same level in the training set. The Euclidean Distance between the inputted characteristics of the patients to be predicted and the corresponding centroid of the three levels of each corresponding syndrome type is calculated respectively, and the closest level is taken as the level of the patients to be predicted. It should be noted that if the prediction results in the first stage are healthy, the prediction in the second stage will be terminated in advance.

TCM diagnosis model based on deep neural networks (DNN)

As shown in the lower part of Figure 1, a TCM diagnosis model based on Deep Neural Networks (DNN) is designed as comparison. Considering the limited training samples, the DNN framework is trained in a two-stage manner. Firstly, the Hour-Glass convolutional neural network (CNN) is trained in a self-supervised way to learn a low-dimensional representation in feature domain. Secondly, the CNN-extracted feature is employed to classify the TCM syndrome types via two fully connected layers. The detailed pipeline of the diagnosis model based on DNN is shown as Figure 7.

Figure 7 The flowchart of TCM diagnosis model based on DNN.

The input of the DNN is the channel-wise concatenation of Region A, B, C, D. Each region is resized into 128x128x3 with RGB format. The input size is therefore 128x128x12. In training stage I, the input X is fed into self-supervised CNN that contains an encoder

and a decoder

, which is similar as Ronneberger O, et al.32 The encoder is comprised of 4 down-blocks, each of which contains two convolutional layers with kernel size 3x3 and activated by Leaky Re Lu.34 A batch normalization layer and a max-pooling layer with size 2x2 is also included in down-blocks. After the 4th down-blocks, the feature map goes through an inception layer whose convolutional kernel size is 1x1. The decoder

is the inversion of encoder, which is comprised of 4 up-blocks. The structure of up-blocks is similar with that of down-blocks; the difference is the 1st layer in up-blocks is an up-sampling layer acting as the inversion of max-pooling.

As for the training stage II, the pre-trained encoder is fine-tuned by the labels indicating the syndrome types. The feature maps after inception layer is max-pooling and min-pooling channel-wise to obtain a feature vector with size 512x1, and such feature vector is then fed into

to predict the TCM syndromes. The training of the second stage is end-to-end, which means that the parameters in encoder is also trainable in training stage II. Since the training samples are limited, data augmentation is involved by rotating the facial regions 90, 180, 270 degrees respectively.

Training protocol

In this paper, a total of 250 samples of 50 patients with overabundant liver-fire syndrome (OLF), 50 patients with Yin deficiency with Yang hyperactivity (YDYH), 50 patients with excessive accumulation of phlegm-dampness (EAPD), 50 patients with Yin and Yang deficiency syndrome (YYD), and 50 healthy patients are tested. TCM syndromes and level distribution of patients are shown in Table 1.

TCM syndrome types |

Number of samples |

Number of samples of blood pressure at all levels |

I degree |

II degree |

III degree |

Over abundant liver-fire syndrome |

50 |

24 |

18 |

8 |

Yin deficiency with Yang hyperactivity, |

50 |

21 |

16 |

13 |

Excessive accumulation of phlegm-dampness |

50 |

17 |

19 |

14 |

Yin and Yang deficiency syndrome |

50 |

12 |

21 |

17 |

Healthy persons (no syndrome) |

50 |

- |

- |

- |

Table 1 Distribution of TCM Syndromes and Blood Pressure Levels

As for both the training of diagnosis model based on CSD+RF and DNN, about 50% of each type and level are randomly selected as the training set and the remaining data as the test set. When the number of data is an odd number, round up to an integer. Thus, the number of training samples in the five labels of OLF, YDYH, EAPD, YYD and healthy persons is 25,26,26,26,25 respectively. In total, 128 samples are used as the training set and 122 samples as the test set.

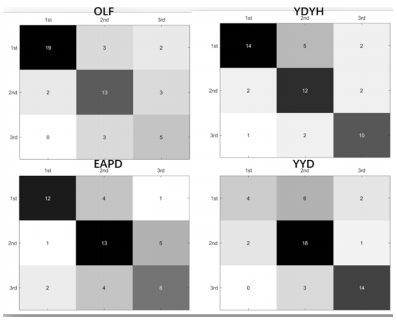

Experimental results of diagnosis model based on CSD+RF

The overall flowchart of the proposed diagnosis model based on CSD+RF is shown as Figure 6, and the detailed predicting performance in the testing set is shown in the following confusion matrix (Figure 8), in which the vertical axis represents the ground-truth label, and the horizontal axis represents the prediction. OLF, YDYH, EAPD and YYD represent overabundant liver-fire syndrome, Yin deficiency with Yang hyperactively, excessive accumulation of phlegm-dampness and Yin and Yang deficiency syndrome respectively.

Figure 8 Confusion matrix of stage I prediction.

As can be seen from the above confusion matrix, in general, the overall accuracy of the classifier in the stage I of the test set is 70.5%. The prediction accuracy of OFL, YDYH, EAPD, YYD and healthy persons is respectively 68.0%75.0%, 58.3%, 70.8% and 80%. The prediction accuracy of healthy persons is higher, and that of excessive accumulation of phlegm-dampness is the lowest.

The diagnosis model based on CSD+RF will continue to predict the level of syndrome types in the second stage after the TCM syndrome types of patients are classified. In the second stage, it is assumed that the prediction results of the first stage are accurate enough, that is, the types of inputted syndromes are all true values. At this time, according to the inputted characteristics of patients to be predicted and the distance between the centroid of each level and the corresponding syndrome type, the prediction results of the level can be obtained. The prediction accuracy of each syndrome type is shown as Figure 9. In this figure, the vertical axis represents ground-truth label and the horizontal axis represents prediction.

Figure 9 Confusion matrix of stage II prediction.

Experimental results of the diagnosis model based on DNN

As a comparison, the overall flowchart of the diagnosis model based on DNN is shown as Figure 7. The training/testing set division protocol is the same as the framework based on CSD+RF. Considering data augmentation, there are totally 512 training samples for training stage II. In training stage I and II, the minibatch size is 8, and the optimization strategy is Adam, et al.35 with learning rate 0.0005. After the training stage, the training loss of first stage is shown as Figure 10 and the experimental results in testing set is shown as Figure 11.

Figure 10 Training loss of the diagnosis model based on DNN in stage I.

Figure 11 Confusion matrix of the diagnosis model based on DNN.

Performance comparison

The performance comparison in terms of predicting accuracy in testing set between the diagnosis model based on CSD+RF and diagnosis model based on DNN are shown as Figure 12. In 4 (OLF, YDYH, YYD, and Healthy) out of the 5 classes, the diagnosis model based on CSD+RF outperforms the diagnosis model based on DNN by an obvious margin.

Figure 12 Performance comparison between the diagnosis model based on CSD+RF and the diagnosis model based on DNN in terms of accuracy.

Figure 12 indicates that the TCM syndrome diagnosis model based on CNN cannot effectively predict the TCM syndromes in the testing set, demonstrating the superiority of the diagnosis model based on CSD+RF.

We think the limitation of the training samples might be the main reason resulting in the unsatisfied accuracy of diagnosis model based on CNN. Although several tricks (e.g., pre-training stage and data augmentation) are involved aiming to relieve the overfitting problem, 128 original training samples are still insufficient to extract representative color features that relevant to TCM syndromes. On the contrary, the diagnosis model elaborately designed based on CSD+RF can capture distinguishing features of different TCM syndromes. Such feature extraction is explicit; therefore, it can achieve better performance than diagnosis model based on DNN when the training samples are limited. The effectiveness of the diagnosis model based on CSD+RF toward limited training samples are further discussed in ablation experiments.

Ablation experiment

In order to further validate the effectiveness of the proposed TCM syndrome & hypertension level prediction model, several ablation experiments are conducted. Firstly, the results of facial region extraction of several samples are listed to demonstrate that Ensemble of Regression Trees implemented by Dlib is acceptable for facial region extraction in this work, which is shown in Figure 13. The sample data information of this part is shown in Table 2.

Figure 13 Several examples of facial specific region extraction.

serial number |

gender |

age |

blood pressure classification |

TCM syndrome types |

blood pressure value |

level |

1 |

male |

55 |

SBP: 148 mmHg

DBP: 87 mmHg |

I degree of hypertension |

Over abundant liver-fire syndrome |

2 |

male |

61 |

SBP: 148 mmHg

DBP: 87 mmHg |

I degree of Hypertension |

Over abundant liver-fire syndrome |

3 |

male |

73 |

SBP: 151 mmHg

DBP: 80 mmHg |

I degree of Hypertension |

Excessive accumulation of phlegm-dampness |

4 |

female |

70 |

SBP: 153 mmHg

DBP: 83 mmHg |

I degree of Hypertension |

Excessive accumulation of phlegm-dampness |

5 |

male |

73 |

SBP: 142 mmHg

DBP: 86 mmHg |

I degree of Hypertension |

Yin deficiency with Yang hyperactivity |

6 |

female |

73 |

SBP: 145 mmHg

DBP: 88 mmHg |

I degree of Hypertension |

Over abundant liver-fire syndrome |

7 |

female |

58 |

SBP: 149 mmHg

DBP: 90 mmHg |

I degree of Hypertension |

Yin and Yang deficiency syndrome |

8 |

female |

50 |

SBP: 144 mmHg

DBP: 89 mmHg |

I degree of Hypertension |

Yin and Yang deficiency syndrome |

Table 2 Sample Data Information

Secondly, some comparisons are illustrated in Figure 14 to validate the effectiveness of the proposed CSD method. The facial regions of 50 samples (10 healthy people and 10 patients from each TCM syndromes) are collected. The central structural vector of region D is then extracted, and the kurtosis and skewness of the central structural vectors are calculated in the end (all regions are effective; region D is randomly selected to validate the color spectral decomposition method.). The distribution of the 50 samples is shown as Figure 14. The horizontal and vertical axis of upper sub-figure respectively denote the kurtosis and skewness of central spectral vectors of facial region D in each subjective. The lower sub-figure denotes several central spectral vectors. Figure 6 demonstrates the proposed color spectral decomposition can effectively extract distinguishing facial chroma features of different types of people.

Figure 14 Feature comparison between healthy people and four TCM syndromes.

Lastly, in order to further validate the effectiveness of the color spectral decomposition, this paper stacks all spectral vectors from region C (region C is also randomly selected) and constructs a matrix of size Mx100, where 100 is the dimension of spectral vector and M is the total number of spectral vectors in region C after outlier elimination. The covariance of the matrix for each TCM syndrome is shown in Figure 15, which further validates that the spectral vectors can extract distinguishing features from different TCM syndromes.

Figure 15 Distinguishing covariance matrix between healthy people and yin and yang deficiency syndrome.