International Journal of

eISSN: 2574-8084

Case Report Volume 12 Issue 1

1Department of Radiology, St. Jude Children’s Research Hospital, USA

2Department of Engineering, University of Memphis, USA

3Department of Biostatistics, St. Jude Children’s Research Hospital, USA

4Department of Biostatistics, St. Jude Children’s Research Hospital, USA

Correspondence: Zachary R. Abramson, MD DMD, Assistant Member, Clinical Radiology, Radiologist, Body Imaging, Department of Diagnostic Imaging, St. Jude Children’s Research Hospital, 262 Danny Thomas Place, Mail Stop 220, Memphis, TN 38105, Tel 901-595-2085

Received: January 25, 2025 | Published: February 12, 2025

Citation: Abramson ZR, Thompson D, Goode C, et al. The importance of stereoscopic vision in virtual surgical planning. Int J Radiol Radiat Ther. 2025;12(1):12-15. DOI: 10.15406/ijrrt.2025.12.00411

Rendering software provides opportunities to display 3D images on a 2D display for the purposes of pre-operative planning, though with inherent size and depth ambiguity. The addition of stereoscopy as provided by modern virtual reality (VR) devices to traditional rendering principles may provide a more accurate conveyance of 3D patient anatomy. However, the added benefit of stereoscopic vision to pre-operative virtual planning has not been rigorously studied. A small pilot study was conducted to evaluate the following question: among a cohort of pediatric oncologic surgeons, how does stereoscopic vision resolve size-distance ambiguity during 3D virtual modeling using a commercially available virtual reality VR headset? The findings and interpretation of the results are discussed here to promote awareness of the issue of ambiguity in virtual modeling in light of the increasing popularity of virtual reality devices. In summary, surgeons viewing virtual 3D models are often not aware of the inherent ambiguity in the scene. Stereoscopic vision as provided by commercially available VR headset helps resolve ambiguity inherent to virtual scenes containing structures of unknown size and location. Transparent rendering, a mainstay of virtual pre-operative planning, is an ideal use case for stereoscopic vision. The use of stereoscopic displays for 3D surgical planning may reduce unanticipated intra-operative findings.

Keywords: 3D rendering, surgical planning, virtual reality, stereoscopy

Virtual 3D modeling is commonly employed in the setting of pre-operative planning prior to tumor resection surgery.1 The virtual preview of what the surgeon may encounter in the operating room appears to improve surgeon confidence and allows for the anticipation of intra-operative challenges. Specifically, the spatial relationship of a tumor to critical structures including vessels, nerves and organs is of utmost importance for oncologic surgery where the goal is to maximize resection while minimizing damage to normal tissues.

Traditionally, virtual 3D models are displayed as 3D renderings on a 2D display, simulating depth and dimensionality using linear perspective and simulated lighting.2,3 These rendering effects rely on an inherent sense of object size to infer distance. An object that appears small could be truly small and in the foreground of the image or large and in the background and knowledge of the expected size of the object facilitates appropriate inference of depth. However, when rendering unfamiliar structures, such as an individual patient tumor, inferences can be misleading, hindering assessment of object size and relationship to other structures. Other monocular visual cues also assist in resolving size-distance ambiguity, such as object occlusion and shadows, yet a great deal of ambiguity remains.4,5

Stereoscopic vision can resolve the ambiguity of linear perspective.6 This involves the viewing of a 3D scene from slightly different perspectives by the right and left eyes, which when processed by the brain produces depth perception. Unlike traditional 3D rendering on a 2D display, modern 3D displays, such as virtual reality headsets or autostereoscopic flat panel displays, incorporate the stereoscopic effect by projecting different perspectives to each eye.7 The added benefit of stereoscopic vision to pre-operative virtual modeling has not been rigorously studied.

In this study we set out to answer the following question: among a cohort of pediatric oncologic surgeons, how does stereoscopic vision resolve size-distance ambiguity during 3D virtual modeling using a commercially available headset?

Participants

Pediatric oncologic surgeons who had prior experience with virtual reality pre-operative planning were recruited from our local institution. Three surgeons were included.

Model creation

Virtual models of varying size and position in space were created using the Slicer plug-in Slicer IGT (slicer.org). Two model sets were created: side-by-side spheres and nested spheres. The side-by-side spheres contained two spheres that either differed in size, distance, or both. For sets that differed in size, one sphere maintained a ten-millimeter radius while the radius of the other sphere was diminished to nine-, eight-, or seven-millimeters. For sets that differed in distance, the radii of both spheres were maintained at ten millimeters while the distance of one sphere was increased by five, ten, fifteen, or twenty millimeters from the viewer. For the set where both size and distance were varied, one sphere maintained a ten-millimeter radius and did not move while the other sphere was diminished to a nine-millimeter radius and brought forward until its silhouette appeared equal to the larger ten-millimeter sphere. A control set included two identical spheres of the same size and distance from the viewer. The nested spheres involved one large semi-transparent sphere with a smaller opaque sphere positioned in the anterior, center, or posterior region of the larger sphere.

Model display and data collection

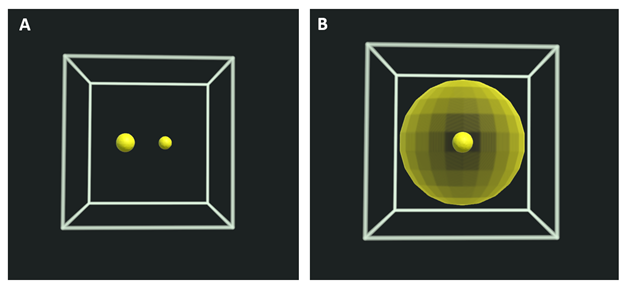

The model sets were displayed using virtual pre-operative planning software (Elucis, Realize Medical, Ontario, Canada) running on a head mounted display (Meta Quest 2, Meta, Menlo Park CA). Before models were displayed, the subjects manipulated a virtual box to be at eye level directly in front of them at a comfortable arm’s reach away. To preclude stereoscopic vision, subjects wore an eye-patch over one eye. The model sets were then displayed in a randomized order. For side-by-side spheres, subjects were asked two questions: Which sphere is bigger? Which sphere is closer? For these questions there were four options available: right; left, same; unknown (Figure 1A). For the nested spheres, subjects were asked to identify the position of the smaller opaque sphere as in the front, center, or back of the larger semi-transparent sphere (Figure 1B). For each response, the subject gave a confidence rating on a scale of one to five. Each scenario was repeated three times in random order. The eye patch was then removed, and the same set of models were again displayed with the full stereoscopic effect. Responses to the same questions were recorded.

Figure 1 A. First-person view of the side-by-side sphere test. The sphere on the right appears smaller, but it is ambiguous as to whether this reflects a smaller size or a position farther in the scene. B. First-person view of the nested sphere test. The position of the solid smaller sphere (front, middle, or back of the larger transparent sphere), is ambiguous without stereoscopic vision.

Statistical analysis

For the side-by-side sphere test, accuracy among surgeons without and with stereoscopic vision was compared using the McNemar test. For the nested sphere set, a binomial test was used to compare accuracy without and with stereoscopic vision. Confidence scores for side-by-side tests without and with stereoscopic vision were compared using paired t-tests. Confidence scores for the nested sphere tests without and with stereoscopic vision were compared using a signed rank test. Differences in accuracy among surgeons without and with stereoscopic vision were compared using the Chi-squared test, and Fisher’s exact test were used if there were subgroup with less than 5 counts.

Feasibility

The three surgeons completed all questions; none chose unknown as an answer for any of the questions. Each test took approximately 30 minutes to complete.

Accuracy and confidence

In the side-by-side test, the subjects were significantly more accurate (p = 0.014) and confident (p = <0.001) in perceiving size and distance with stereoscopic vision. Overall accuracy improved from 68% without stereoscopic vision to 86% with stereoscopic vision. Similarly, for the nested spheres test, surgeons were both more accurate (p < 0.001) and confident (p < 0.001) with stereoscopic vision when determining the position of the smaller sphere inside the larger semi-transparent sphere. Overall accuracy improved from 37% without stereoscopic vision to 100% with stereoscopic vision (Table 1). There were no significant differences in accuracy between surgeons without (p=0.621) or with stereoscopic vision (p=0.616). The confidence scores of correct and incorrect responses were not significantly different without (p = 0.268) or with stereoscopic vision (p = 0.398).

|

|

Side-by-side Spheres |

Nested Spheres |

||||

|

|

Without Stereoscopic Vision (N=78) |

With Stereoscopic Vision (N=78) |

P-value |

Without Stereoscopic Vision (N=27) |

With Stereoscopic Vision (N=27) |

P-value |

|

Accuracy (Proportion) |

0.67 |

0.86 |

0.014a |

0.37 |

1.00* |

< 0.001b |

|

Confidence Mean (Standard Deviation) |

4.10(0.97) |

4.50(0.64) |

< 0.001c |

3.33 (0.832) |

4.59 (0.636) |

< 0.001d |

Table 1 Analysis of collected responses and associated confidence scores

aMcNemar’s Chi Squared Test with continuity correction; *All subjects gave correct responses on Nested Sphere sets with stereoscopic vision; bExact binomial test; cStudent’s Paired t-test; dWilcox Signed Rank Test.

Evolved to perceive depth and dimension, the human visual system is a specialized instrument for comprehending and interacting with the 3D world. Yet until recently, virtual representations of the 3D world have been 2D: traditional computer monitors, projector screens, or mobile devices. Despite the limitations of 2D displays, 3D objects can be presented in a way that simulates depth.8-10 Conveying 3D information of an object on a 2D display is termed rendering, and it is built upon a host of monocular visual depth cues, such as shading, object occlusion, linear perspective, and shadowing, among others.3 However, these 3D-rendered images are still limited by depth ambiguity and a fixed perspective.4,5

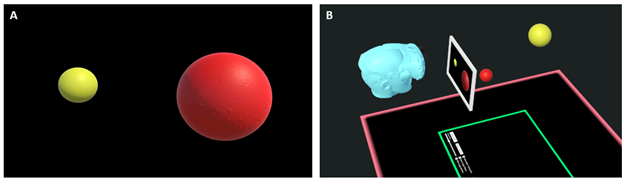

Depth ambiguity can be understood using a particular case termed size-distance ambiguity. This phenomenon occurs when the apparent size of an unknown object cannot be attributed with certainty to its dimensions or its distance from the viewer using monocular visual cues (Figure 2A). Recent developments in 3D display technology provide new opportunities to utilize binocular depth cues, specifically stereoscopic vision to convey spatial information and disambiguate size-distance uncertainty (Figure 2B).

Figure 2 A. Red and yellow spheroid objects 3D-rendered for presentation on a 2D display. While the lighting provides a sense of depth and dimensionality, the relative size and positions of the two objects are uncertain. B. Avatar viewing a simulated 2D display within a 3D virtual space. Notice how the red ball, as rendered on the 2D image appears larger than the yellow ball. The viewer cannot distinguish if this is due to the red ball being larger or closer than the yellow ball. Behind the 2D image is the 3D scene, clearly showing the yellow ball is larger and farther away. This phenomenon is known as size-distance ambiguity and can be resolved by stereoscopic vision.

In this study, a series of virtual scenes with inherent size-distance ambiguity were created to evaluate the ability for stereoscopic vision to resolve this ambiguity for a cohort of pediatric oncologic surgeons. Interestingly, despite the ambiguity of size and distance in the tests performed without stereoscopic vision, the subjects never chose unknown when asked about size or distance of the virtual objects. Further, the subjects were equally confident in their correct and incorrect answers. Taken together, these two findings suggest that the surgeons were not aware of the ambiguity in virtual scenes presented.

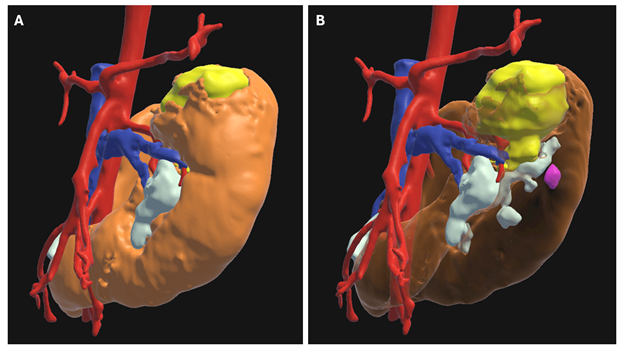

Intuitively, the addition of stereoscopic vision led to greater accuracy and confidence in determining the size and distance of unknown objects in a virtual scene. One notable finding is that this effect was greatest for the nested spheres test. Normal depth cues require solid objects to cause light reflections, shadows, and overlap, which help resolve size-distance ambiguity. As a structure is made more transparent, the value of these cues diminishes. It is in these circumstances where the stereoscopic effect may be most valuable. Transparent rendering plays an important role in virtual pre-operative planning, allowing a surgeon to understand 3D relationships of structures which will not readily be visible during dissection, such as the location of a tumor inside a solid organ, prominent blood vessels running through a tumor, or a vital structure occluded from view (Figure 3).11,12

Figure 3 3D model of a horseshoe kidney (orange) with a left superior pole Wilms tumor (yellow). A. The kidney is rendered opaque which allows for lighting and shadows to convey the external contour of the kidney but obscures the tumor from view. B. the kidney is rendered transparently, allowing visualization of the tumor within, but at the expense of losing monocular cues of shading to convey renal anatomy. This limitation can be overcome by the use of stereoscopic vision, which provides a binocular cue of depth independent from lighting and shading

The lack of inter-surgeon variability suggests that the difficulty in resolving size-distance ambiguity without stereoscopic vision is not particular to one surgeon. All surgeons showed similar improvements in accuracy with the addition of stereoscopic vision. This lack of variability is advantageous in the setting of pre-operative planning when multiple surgeons participating in an operation develop a shared perception of a 3D virtual scene, facilitating discussion and collaboration.

There are several limitations of our study design. This study did not assess the role of parallax in resolving size and distance ambiguity. Parallax was excluded intentionally to isolate the effect of stereoscopic vision. In addition, traditional monocular visual cues were removed, again for the purposes of isolating the effect of stereoscopic vision on the subjects. Finally, there are other cues to distance in the operating room not captured by 3D modeling, including normal anatomic structures for comparison, familiar surgical instruments, and of course, the tactile feedback of touch.13-16 However, the lack of these cues in the virtual space elevates the importance of stereoscopic vision during the planning process. Another limitation is the use of three surgeons at a single institution. The findings in this cohort may not be generalizable to other types of surgeons or surgeons at different institutions.

In conclusion, stereoscopic vision helps resolve ambiguity inherent to virtual scenes containing structures of unknown size and location. This ambiguity is typically encountered during virtual pre-operative planning, where pathology is displayed with limited normal structures to serve as a reference. The use of transparent rendering to reveal structures which otherwise would be occluded further diminishes the ability of monocular cues to resolve size-distance ambiguity, providing the ideal use case for stereoscopic vision.

A student author was supported by R25CA023944 from the National Cancer Institute.

Authors declare that there is no conflicts of interest.

©2025 Abramson, et al. This is an open access article distributed under the terms of the, which permits unrestricted use, distribution, and build upon your work non-commercially.