International Journal of

eISSN: 2574-8084

Research Article Volume 8 Issue 4

1PhD candidate, University of Tunis ElManar, Tunisia

2PhD, University of Tunis, Tunisia

3University of Tunis ElManar, Tunisia

4MD, Department of Nuclear Medicine, Salah Azaiez Institute, Tunisia

Correspondence: Hamida Romdhane, PhD candidate, University of Tunis ElManar, ISTMT, LR13ES07 LRBTM,Tunis, Tunisia

Received: August 29, 2021 | Published: October 12, 2021

Citation: Romdhane H, Cherni MA, Sellem DB. Early diagnosis of pulmonary embolism using deep learning and SPECT/CT Exams Fusion. Int J Radiol Radiat Ther. 2021;8(4):156-162. DOI: 10.15406/ijrrt.2021.08.00308

Background: Pulmonary embolism is a serious disease, which can be life-threatening. Its treatment and its detection are sometimes complicated. The two most commonly used imaging techniques are the computed tomography pulmonary angiography and the pulmonary scintigraphy. Currently, hybrid imagery, combining single photon emission computerized tomography and computed tomography (SPECT/CT), play an important role in the diagnosis of pulmonary embolism.

Objective: Our aim, in the analytical study, is to detect the pulmonary embolism. A whole new method based on the fusion of SPECT and CT images by the deep Siamese Neural Network is proposed to early detect this fatal disease.

Material and methods: This method consists of two main parts: fusion of SPECT and CT images and detection of the pathological lobes. It starts with the segmentation of both SPECT and CT images to obtain 3D binary images. Next, we detect the different pulmonary lobes in the CT images. Then, we merge the two SPECT and CT images by deep Siamese Neural Network. Afterward, they are compared to the image where the different lobes are identified to finally detect the pulmonary embolism and identify the pathological lobes.

Results: The validation achieved an accuracy of 90.5%, with a sensitivity of 88.2% and a specificity of 91.5%.

Conclusion: The obtained results prove the effectiveness of the proposed method in the detection of pulmonary embolism.

Keywords: image fusion, image processing, deep siamese neural network, SPECT/CT, pulmonary lobes

2D, 2 dimensional; 3D, 3 dimensional; AG, average gradient; CNN, convolutional neural network; CF, column frequencies; CT, computed tomography; CTPA, computed tomography pulmonary angiography; DWT, discrete wavelet transform; FP, false-positive; FN, false-negative; GFS, weighted average fusion; MI, mutual information; MST, multi-scale transform; PE, pulmonary embolism; RF, row frequencies; SF, spatial frequency; SD, standard deviation; SPECT, single photon emission computerized tomography; TN, true-negative; TP, true-positive

Pulmonary embolism (PE) is one of the most dangerous diseases.1 It is the partial or complete blockage of one or some of the pulmonary arteries. It occurs when a blood clot (embolus) arrives in pulmonary arteries.2,3 The thrombus often forms in the deep veins of the lower extremities, and then migrates through the venous system to achieve the right heart chambers and afterward the lungs.4 It is a frequent cause of death. Untreated PE can be deadly with a high mortality rate that can be decreased under rapid detection. It has well-recognized limitations.5 However, the symptoms and clinical signs of PE are nonspecific. The diagnosis is so difficult, particularly in patients with comorbidity factors.6 It requires additional tests, included imaging techniques. In recent years, there have been major developments regarding these diagnostic tests, raising new opportunities in the diagnosis of PE and implying new diagnostic strategies for acute PE Currently, the two most commonly used imaging techniques are the Computed Tomography Pulmonary Angiography (CTPA)7-9 and pulmonary scintigraphy6,10

CTPA is a radiological imaging exam. It consists of injecting intravenously an iodine- contrast agent (opaque to X-rays), in order to individualize the blood vessels and to observe them with an X-ray scanner. It allows to obtain images in section of sufficient resolution. In a normal CTPA scan, the contrast filling the pulmonary vessels appear as a bright white. In case of PE, the CTPA scan shows a filling defect and the thrombus appear dark in place of the contrast. This makes it possible to diagnose the site of artery embolism.11

Pulmonary scintigraphy is a nuclear medicine imaging technique. It consists of administering to the patient a radiopharmaceutical. The latter consists of a cold molecule with pulmonary tropism and a gamma-ray isotope tracer. It allows, thanks to a dedicated gamma-camera machine, the visualization of the distribution of this radiopharmaceutical. The standard protocol includes planar acquisitions. Depending on the radiopharmaceutical, there are two types of pulmonary scintigraphy. Pulmonary ventilation scintigraphy consists of inhaling a radioactive gas: krypton 81m or Technegas, which allows the visualization of ventilated regions. Pulmonary perfusion scintigraphy consists in injecting human albumin macroaggregates radiolabelled with 99mTc, which allows the visualization of perfused regions. For the diagnosis of PE, pulmonary perfusion scintigraphy has an excellent negative predictive value. Indeed, its normality makes it possible to exclude this diagnosis. However, its specificity is weak and depends on the existence of cardiorespiratory antecedents and especially of the presence or not of abnormalities on the radiography of thorax. The addition of pulmonary ventilation scintigraphy improves this specificity: a perfusional defect with normal ventilation is the characteristic sign of PE (mismatch).12-14 But, it cannot always be performed: no krypton 81m in Tunisia and the majority of nuclear medicine services do not have Technegas. In addition, pulmonary scintigraphy is a functional imagery poor in anatomical landmarks.

Recently, hybrid machines make it possible to combine the functional data from single photon emission computerized tomography (SPECT) with the anatomical data from low-dose Computed Tomography (CT) scans. They are largely used in daily practice and have ameliorated the diagnostic performances, especially the specificity of the pulmonary scintigraphy by diagnosing the non-embolic abnormalities.15 However, it was only a few studies concerning the utility of SPECT/CT in the diagnosis of PE, and it still lacks official recommendations of scientific societies regarding this method.

In this paper, we present a new method based on the fusion of SPECT/CT images by Convolutional Neural Network (CNN) to diagnose the PE and to assess its extent. The rest of the manuscript is organized as follows: The interest of the merger by CNN is presented in Section 2. Section 3 describes the proposed method. Discussions and experimental results of the proposed approach are reported in Section 4. The conclusions are provided in Section 5.

CNN model for image fusion

The goal of medical image fusion is to enhance clinical diagnosis accuracy. Thus, the fused image is produced by preserving details and salient features of the two source images. The CT images provide excellent soft-tissue details with high-resolution anatomical information. While the SPECT images provide functional information, pulmonary perfusion in the case of our work. Thus the goal of medical image fusion is combining the complementary information contained in different source images by producing a composite image for visualization that can help doctors make easier and better decisions for various aims.

In these recent years, a diversity of medical image fusion way has been proposed that can be classified into two classes: transform domain methods and spatial domain methods. Because of the difference in imaging principle, the intensities at the same location of different source images often vary significantly. Therefore, most of these fusion algorithms are introduced in a multi-scale way to pursue perceptually good results. Generally, these MST (multi-scale transform) based fusion algorithms consist of three steps: decomposition, fusion, and reconstruction. One of the most important problems in image fusion is calculating a weight map that incorporates the pixel activity data from different sources. In most existing fusion methods, this target is achieved by two steps known as level measurement and weight assignment activity. This type of activity measurement and weight assignment is usually not very robust resulting from many factors as the difference between source pixel intensities, misregistration and noise. To overcome the difficulty in designing robust weight assignment strategies and activity level upgrades, a Convolutional Neural Network (CNN) is trained from source images to the weight map, to encode a direct mapping.16

CNN is a typical deep learning model, which attempts to learn a hierarchical feature representation mechanism for image/signal data with different levels of abstraction. More concretely, CNN is a trainable multi-stage feed-forward artificial neural network and each layer contains a certain number of feature maps corresponding to a level of abstraction for features. In a feature map, each coefficient is named a neuron. The operations such as non-linear activation linear convolution and spatial pooling applied to neurons are used to connect are used to connect the feature maps at different layers.

The creation of a focus map in image fusion can be viewed as a classification problem. More precisely, the activity level measurement is known like feature extraction, whereas the role of fusion is identical to that of a classifier used in general classification tasks. Thereby, it is theoretically feasible to employ CNNs for image fusion. The CNN architecture for visual classification is an end-to-end framework, in which the input is an image while the output is a label vector that indicates the probability for each category. Between these two ends, the network consists of several convolutional layers (a non-linear layer as ReLU always follows a convolutional layer, so we don't explicitly mention it later), max-pooling layers and fully-connected layers. The convolutional and max-pooling layers are generally viewed as feature extraction parts in the system, while the fully-connected layers existing at the output end are regarded as the classification part.

We further explain this point from the view of implementation. For most existing fusion algorithms, either in spatial or transform domains, the activity level measurement is essentially implemented to extract high-frequency details, by designing local filters. On the one hand, for most transform domain fusion methods, the images or image patches are represented using a set of predesigned bases like wavelet or trained dictionary atoms. From the view of image processing, this is generally equivalent to convolving them with those bases. For example, the implementation of the discrete wavelet transform is exactly based on filtering. On the other hand, for spatial domain fusion methods, the situation is even clearer that so many activity level measurements are based on high-pass spatial filtering. Furthermore, the fusion rule, which is usually interpreted as the weight assignment strategy for different source images based on the calculated activity level measures, can be transformed into a filtering-based form as well. Considering that the basic operation in a CNN model is convolution (the full connection process can be viewed like convolution with the kernel size that equals to the spatial size of input data), it is practically feasible to apply CNNs to image fusion.

It was performed the fusion of lung 3D SPECT functional images with the 3D CT ones, to obtain anatomical information from patients suspected of PE. The methodology starts with a segmentation procedure to extract relevant information from both CT and SPECT scans, i.e., to extract lungs, and producing a 3D binary image. This would allow a correspondence between the SPECT and CT images of the pulmonary lobes, in order to identify the lobes containing perfusion defects. The proposed method is described in Figure 1.

Pre-processing

CT images

The segmentation method is applied to each CT image slice. The 3D CT binary image consists on associating all slices into a stack.

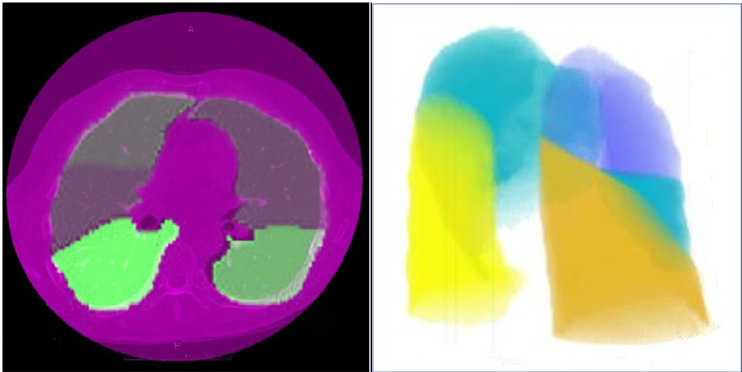

Figure 2 (a) Identification of the five lung lobes, (b) 3D surface rendering of the lung: right upper lobe (purple), right middle lobe (blue), right lower lobe (orange), left upper lobe (blue) and left lower lobe (yellow).

SPECT images

SPECT images were obtained from a hybrid machine combining an X-ray CT scanner and a dual-headed SPECT scanner juxtaposed to enable systematic registration of SPECT and X-ray CT images. Images were acquired, in tomographic mode 20 minutes after the injection of 55.5 MBq of macro aggregated human serum albumin radiolabelled with 99mTc.

To delineate the boundaries of the pulmonary region in the SPECT exam, the contour of the lungs is determined through a segmentation process.

Fusion

The fusion method is presented in Figure 3. The fusion method used in our work consists of four steps. In the first step, the two source images are fed to a pre-trained CNN model to output a score map. Every coefficient in this map indicates the focus property of a pair of corresponding patches from two source images. Next, a focus map with the same size of source images is obtained by averaging the overlapping patches from the score map. In the second step, the focus map is segmented (with a threshold of 0.5) into a binary map. In the third step, we refine the binary segmented map with two popular consistency verification strategies that are, guided image filtering and small region removal, to produce the final decision map. Finally, the fused image is obtained with the final decision map using the pixel-wise weighted-average strategy.18

In this paper, we adapted the Siamese neural network in order to fuse our SPECT/CT images.

Siamese neural network: A Siamese Network (sometimes called a twin neural network) is an artificial neural network that uses the same weights while working in tandem on two different input vectors to compute comparable output vectors.19,20 The main idea behind Siamese networks is that they can learn useful data descriptors that can be further used to compare between the inputs of the respective subnet-works. Hereby, inputs can be anything from numerical data (in this case the subnetworks are usually formed by fully-connected layers), image data (with CNNs as subnetworks) or even sequential data such as sentences or time signals (with RNNs as subnetworks). Table 1 shows the Siamese neural network model used in fusion algorithm. It can be seen that each branch in the network has three convolutional layers and one max-pooling layer. The kernel size and stride of each convolutional layer are set to 3×3 and 1, respectively. The kernel size and stride of the max-pooling layer are set to 2×2 and 2, respectively. The 256 feature maps obtained by each branch are concatenated and then fully-connected with a 256 dimensional feature vector. The output of the network is a 2 dimensional (2D) vector that is fully connected with the 256-dimensional vectors. In fact, the 2D vector is fed to a 2-way softmax layer that produces a probability distribution over two classes. The schematic diagram of the used algorithm is shown in Figure 4.

|

Layer |

Layer name |

Kernel*Unit |

Other layer parameter |

|

0 |

Input Layer |

256x256 |

- |

|

1 |

Convolution |

3x3 |

Stride=1 |

|

2 |

Convolution |

3x3 Stride=1 |

Stride=1 |

|

3 |

Max Pooling |

2x2 Stride=2 |

Stride=2 |

|

4 |

Convolution |

Stride=1 |

Stride=1 |

|

5 |

Final Layers |

1 x (2 output) |

softmax |

Table 1 Used parameters for all layers of the CNN Model

Registration: Before merging SPECT and CT images, a registration step is necessary in order to align them. The registration method used in this paper register two 2-D or 3-D images using intensity metric optimization. Its principle is described as follows: it passes by an image similarity metric and an optimizer technique to register two images. An image similarity metric takes two images and returns a scalar value that describes how similar the images are. The optimizer you pass to register defines the methodology for minimizing or maximizing the similarity metric.21

Evaluation of the fusion method

The purpose of image fusion is to preserve all useful information in the source images. During this process, it should not produce any artifacts. To verify the effectiveness of a given fusion algorithm, we need some quantitative measures. Many fusion metrics have been proposed in the literature. Latest among them is the Petrovic Metric. A brief discussion of performance evaluation is presented below. Consider an input image f (m,n) is of size p×q.

This metric is used to find overall information level (activity level) present in the regions of the fused image. It is given by the square root of summation of squares of row frequencies (RF) and column frequencies (CF).

, (1)

Where ,

Based on the gradient information representation, an objective image fusion performance characterization is considered in addition to above fusion performance evaluation metrics. This method provides much deeper insight into the benefits and drawbacks of image fusion methods by estimating information contribution of every source image and by measuring the fusion gain.

The degree of clarity and sharpness in the fused image is given by average gradient as

(2)

It measures the overall information present in the fused image with respect to the source images and is given by

, (3)

Where . is the mutual information between source image X and fused image F . (m) and (n) indicate the probability density functions of source images X and Y, respectively.

pXF(m, n) is the joint probability density function of source image X and the fused image F. Similarly is the mutual information between Y and F.

And, represents the correlation coefficient between source image Y and fused image F.

It indicates spread in the data, that is, the variation of the current pixel intensity value with respect to the average pixel intensity value in the fused image.

(4)

In order to analyze quantitatively the proposed method, we compared the four metrics (SF, AG, MI and SD) to those of two other fusion methods: Weighted average fusion (GFS)22 and Discrete wavelet transform (DWT) based fusion23 (Table 2). We notice that the GFS based fusion method has the lowest values of metrics MI and SD and that the DWT based fusion method has the lowest values of metrics SF and AG. Whereas, the CNN based fusion method has the best values of the four metrics. We can conclude that our method has the best image contrast (best SD), the best image clarity and sharpness (best AG), the best overall mutual information of fused image compared to the source images (best MI) and the best overall activity level present in the fused image (best SF). This shows the superiority of the proposed method.

|

Metric |

Weighted average fusion |

Discrete wavelet transform |

Convolutional Neural Networks |

|

Spatial frequency |

0.66 |

0.21 |

0.72 |

|

Mutual information |

3.263 |

4.371 |

6.447 |

|

Average gradient |

9.926 |

7.041 |

10.756 |

|

Standard deviation |

13.991 |

16.621 |

21.415 |

Table 2 Quantitative analysis of the different CT and SPECT image fusion algorithms

Evaluation of the proposed method

Our proposed method was applied on 21 pulmonary SPECT/CT exams. The results obtained by our method were compared to those obtained by the reference method (gamma-camera).

Our evaluation consisted of three stages. We started with the evaluation of the effectiveness of our method in the detection of emboli (evaluation by exam and evaluation by lobe). Finally, we evaluated the identification of pathological lobes.

Firstly, we built the confusion matrix for the evaluation by exam (Table 3). Secondly, we built the confusion matrix for the evaluation by lobe (Table 4). The correct interpretation of SPECT/CT exams can be either a true-negative (TN) response (i.e., the correct decision that there is no PE) or a true-positive (TP) response (i.e., the correct detection of PE). On the other hand, the false interpretation of SPECT/CT exams is described as either a false-negative (FN) response (i.e., the PE is missed) or a false-positive (FP) response (i.e., the false suggestion of PE that does not exist).

|

Reference method |

||||

|

Proposed method |

Pulmonary embolism Not pulmonary embolism Total |

Pulmonary embolism |

Not pulmonary embolism |

Total |

|

6 1 7 |

2 12 14 |

8 13 21 |

||

Table 3 Confusion matrix for the evaluation by SPECT/CT exam

|

Reference method |

||||

|

Proposed method |

Pulmonary embolism Not pulmonary embolism Total |

Pulmonary embolism |

Not pulmonary embolism |

Total |

|

30 4 34 |

6 65 71 |

36 69 105 |

||

Table 4 Confusion matrix for the evaluation by lobe

The results of the confusion matrix are presented in the Table 3 and the Table 4. From these results, we can calculate the metrics presented in the Table 5.

|

Metrics |

Accuracy |

Precision |

Specificity |

Sensitivity |

AUC |

|

Values (by exam) |

86% |

75% |

86% |

86% |

0.86 |

|

Values (by lobe) |

90.5% |

83.3% |

91.5% |

88.2% |

0.88 |

Table 5 Evaluation parameters

(5)

(6)

(7)

(8)

From Table 5, we can conclude that 86% of patients with PE are correctly detected and that 90.5% of lobes with at least one perfusion defect are correctly diagnosed. Secondly, we were able to correctly identify, from the images SPECT/CT obtained by our method, the suspected pathological lobes. Figure 5 shows two examples: a PE in the right middle lobe (Figure 5b) and a PE in the left lower lobe (Figure 5c).

This study aims to early detect pulmonary embolism that is a very dangerous disease. The main character of this anomaly is the difficulty of detection. We proposed a new method based on CNN algorithm. We evaluated, first, the CNN based fusion method that gives the best values of the four metrics MI, SD, SF and AG. This shows the superiority of the proposed method. Then, we detected and identified the suspected pathological lobes. The validation of this method achieved an accuracy of 90.5%, with a sensitivity of 88.2% and a specificity of 91.5%.

In this paper, we presented a new method to detect PE based on the fusion of SPECT and CT images using deep Siamese Neural Network. The main novelty of this method is learning a CNN model to achieve a direct mapping between source images and the focus map. We firstly, evaluated the effectiveness of the fusion method used. It has been compared to two literature methods which are GFS and DWT. The results showed that the used method gives the best results of SF, MI, AG and SD. Secondly, we evaluated the effectiveness of this method in the detection of the emboli (evaluation by exam and evaluation by lobe) and in the identification of the pathological lobes. We compared our results to those obtained by the expert. They were satisfactory.

Authors wish to thank the team of the department of nuclear medicine at Salah Azaiez Hospital, Tunis, Tunisia, for their permission to employ SPECT/CT lung data.

H.R., M.A.C., and D.B.S. conceived and designed the study. H.R. analyzed and interpreted the data and wrote the manuscript. The authors read and approved the final manuscript.

Not applicable.

All data generated or analyzed during this study are included in this published article and references.

There is no conflict of interest.

©2021 Romdhane, et al. This is an open access article distributed under the terms of the, which permits unrestricted use, distribution, and build upon your work non-commercially.