PMP Estimation and uncertainty due to frequency factor

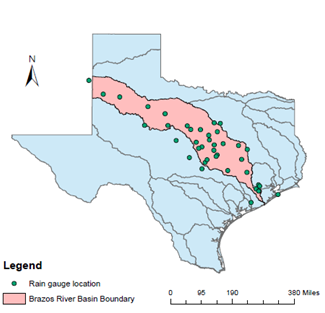

1-hour precipitation data for 39 raingauging stations was used from NCDC NOAA (https://www.ncdc.noaa.gov/cdo-web/) as shown in Figure 1. The stations had an average 30 years of record length. From the data of 1-hour duration the data for other durations 2, 3, 6, 12, and 24 hours were generated. Annual maximum precipitation series based on different durations were compiled for each station. The average mean precipitation values for different durations were 43 mm for 1 hour, 51 mm for 3 hour, 60 mm for 6 hour, 70.3 mm for 12 hour and 89.4 mm for 24 hour duration. Then, the basin-specific enveloping curve was constructed and uncertainty from its use was quantified. Frequency factor

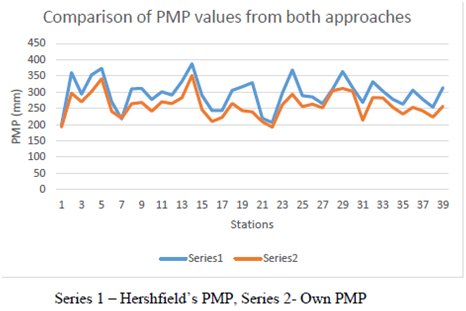

was calculated for 1, 2, 3, 6, 12, and 24 hour durations and for 39 gain gauging stations in Brazos River basin from the enveloping curve. Figure 2 shows the enveloping curve of km with mean of annual precipitation series. Figure 3 shows the enveloping curve of

based on 39 stations in the Brazos River basin and the enveloping curve provided by Hershfield for computing PMP on an hourly basis. From Figure 3, it is seen that both curves generally followed the same trends but did not match properly. Brazos River basin has a much smaller number of stations as compared with 2645 stations that Hershfield used, since the frequency factor markedly depends on the number of stations used. The enveloping curve specific for the Brazos River basin is lower than the Hershfield curve, which was constructed using some of the highest precipitation producing regions with long-term records. The Hershfield enveloping curve seems to give higher values of

as the mean increases. Hence, it is more conservative than the basic-specific one for one hour duration. The same can be said for other durations as the basin-specific curves followed the same trend and Hershfield’s

was higher for other durations than the basin-specific one. The same procedure was applied for 6-, and 24-hour durations and the same trend were observed. Using Hershfield’s curve rather than basin specific can increase

by 16% for 1 hour duration, 17.9% for 6 hour duration and 22.1% for 24 hour duration which corresponds to the uncertainty associated with the use of

values.

was also calculated by using the PMP values published in the HMR documents (HMR, 51). The range of PMP values varied from 863.6 mm (station at Pep) to 1198.8 mm (station at Houston Alife) for 24-hour duration. The value of

was calculated as:

(2)

Where

is the PMP values from the HMR documents. Using the PMP values and the mean and standard deviation of stations, the range of

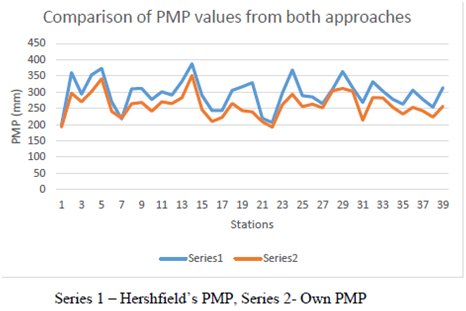

was from 22.2 to 26.6. It was too high with a narrow difference between the highest and lowest values. It is because the PMP values published in HMR are too high as compared to the average precipitation amount and the PMP estimated using basic-specific enveloping curve. This shows the significance of constructing the basin-specific enveloping curve for calculating PMP. PMP values were estimated for every duration and each station using both basin-specific curve and Hershfield’s curve. Figure 4 compares Hershfield’s PMP estimates against PMP estimates for Brazos River basin based on 1-hour duration. It was observed that for the 1-hour duration PMP values were 16.8% higher using Hershfield’s curve than using the basin-specific curve, 18.5% for 6-hour duration, and 23.4% for 24-hour duration which shows the uncertainty in the PMP estimates from the use of enveloping curve or using different values of it.

Figure 1 1-hour duration rain gauge station locations.

Figure 2 Enveloping curves of for different durations.Enveloping curves of for different durations.

Figure 3 Comparison of Hershfield’s enveloping curve of with Brazos River basin enveloping curve.

Series 1 – Basin-specific curve, Series 2- Hershfield curve

Figure 4 Comparison of Hershfield’s PMP estimates against PMP estimates for Brazos River basin based on 1-hour duration (mm).

Frequency analysis and Hazard rate

To quantify the uncertainty in PMP estimates, the best-fit probability distribution for the study area was determined. Using the same data of annual maximum precipitation, frequency analysis was performed using 24 alternative distributions that were Generalized Extreme Value distribution, Burr XII distribution, Dagum, Log-logistics (3 parameter), Pearson 5 (3 parameter), Generalized Gamma (4 parameter), Pearson 6 (4 parameter), Log-normal (3 parameter), Generalized Gamma (3 parameter), Burr (4 parameter), Fretchet (3 parameter), Pearson 6 (2 parameter), Generalized Beta of the second order (4 parameter), Gumbel max, Log-Pearson 3, Log Gamma, Johnson SB, Inverse Gaussian (3 parameter), Dagum (4 parameter), Inverse Gaussian (2 parameter), Log-logistics (2 parameter), Frechet (2 parameter), Pearson 5 (2 parameter), and Log-normal (2 parameter). Three goodness of fit (GOF) tests, Kolmogorov-Smirnov (K-S) test, Anderson-Darling (A-D) test, and Chi-square (C-S) test, were employed to check whether a hypothesized distribution function fitted the sample data (Chakravarti et al.,1967). The hypothesis for the GOF tests was: H0=The precipitation data followed the specific distribution; and H1=The precipitation data did not follow the specific distribution.

These tests were performed at the significance level (α=0.05) for choosing the best-fit probability distribution (Sharma and Singh, 2010). Q-Q plot and Root Mean Square Error (RMSE) were also used to find the best-fit probability distribution. Extreme precipitation data were fitted to all the distributions and parameters of the distributions were estimated by the maximum likelihood estimation and L-moments. The probability density functions (PDFs) were determined and plotted. Matlab and R-statistics were employed for fitting the probability distributions. A ranking system, based on the lowest test static value of GOF tests, was employed to determine the best-fit probability distribution. In the initial processing, all 24 common statistical distributions were used in this step. Some of the common distributions are shown in Table 1 along with their PDFs and CDFs. For each station and duration the test statistic values of Kolmogorov-Smirnov, Anderson Darling, and Chi-square were calculated for every distribution. For each of the three tests the distributions were ranked according to the lowest test statistic value. The distribution having the 1st rank was assigned a score of 24, 2nd rank distribution a score of 23, and so on. The total scores from the three tests of each distribution were added to see which distribution had the highest score, the second highest, and so on. At least 5 to 6 distributions were considered for further analysis. The stations were ranked according to the least RMSE value and best Q-Q plot (Here best means Q-Q plot is linear or the specified theoretical distribution is the correct model.). Sometimes the tests are also biased so it is helpful to see the PDFs of the distributions to see how well they fit the data at the right tail to make sure the results are consistent with the GOF tests or not. The PDFs of the selected 5 or 6 distributions were compared to see if our results were consistent with the PDF graph or not.

Distributions |

Probability density

function (PDF) |

Cumulative density function (CDF) |

Parameters |

Generalized Extreme Value (GEV) |

|

|

k = continuous shape parameter,

= continuous scale parameter (>),

= continuous location parameter,

|

Burr XII |

|

|

k = continuous shape parameter (>0),

= continuous shape parameter (>0),

= continuous scale parameter (>0) |

Dagum |

|

|

k = continuous shape parameter (>0),

= continuous shape parameter (>0),

= continuous scale parameter (>0) |

Log-Logistics (3parameter) |

|

|

= continuous shape parameter (>0),

= continuous scale parameter (>0)

= continuous shape parameter (>0), |

Pearson 5 (3 parameter) |

|

|

= continuous shape parameter (>0),

= continuous scale parameter (>0)

= continuous location parameter |

Generalized Gamma (4 parameter) |

|

|

= continuous shape parameter (>0),

= continuous scale parameter (>0)

= continuous location parameter

k = continuous shape parameter (>0) |

Log-normal (3 parameter) |

|

|

= continuous location parameter,

= continuous parameter (>0),

= continuous parameter |

Table 1 Probability density function and Cumulative density function of distributions along with parameters

In the last step, for those stations and durations for which the difference in the PDF graphs of selected distributions was not too much or there were contradicting results by observing the quantiles of the distributions with the observed values against the MSE and Q-Q plot results, the ranking system was used again. The top 5 or 6 distributions from step 1 were selected. The distributions were ranked according to the test statistic value from the K-S, A-D, C-S, RMSE tests and visually seeing Q-Q plots. A score of 5 or 6 was assigned to the best distribution for a particular test and so on. The distribution having the highest combined score from the 5 tests was regarded as the best distribution. After the best distribution was selected, it was analyzed to see which distribution fitted most of the stations and for different durations overall. The Burr XII distribution was chosen to be the best distribution for the Brazos River basin covering 30 to 40% of the stations for different durations. For other stations also, it was, in most of the cases, one of the top three best distributions. Hazard rate is defined as the instantaneous rate of failure for the survivors to time t during the next instant of time. In flood frequency analysis it can be defined as the probability of extreme flooding in an infinitesimally small time period between t and t+dt given that no flooding has occurred until time t or the rate of event occurrence per unit of time. It is not a density or probability but a measure of risk. As greater the hazard between times t and t1, greater is the risk of failure in this time interval. Hence, once the best-fit distribution was determined using different GOF tests it was seen how the hazard function varied with other distributions and durations to study more about the distribution, as it has more physical significance. As in case of agricultural lands, the hazard due to flooding will increase to a particular extent and then will become constant or can even decrease as the water will saturate to a certain limit and at that point no further damage would result, hence the hazard rate will tend to become constant. It was therefore decided to calculate the hazard rate for different distributions and durations to determine which distribution performed better. The hazard rate (h(t)) was calculated as22

(3)

where

is the probability density function or the probability that the value (failure or death) will fall in a specific interval and

is the cumulative distribution function.

can be defined as a survival function or the probability of surviving up to time t.

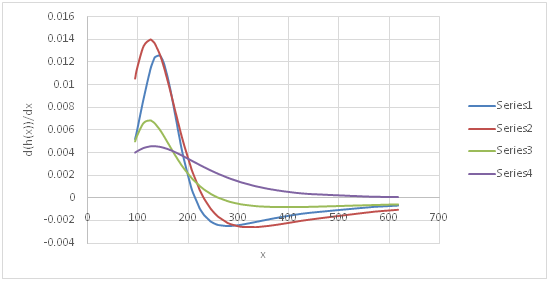

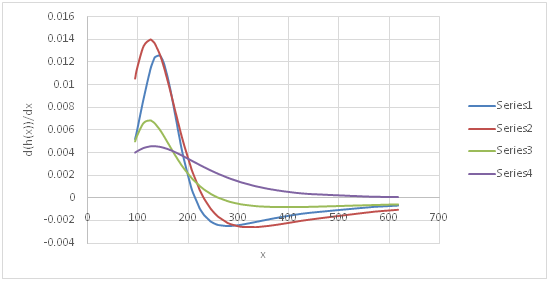

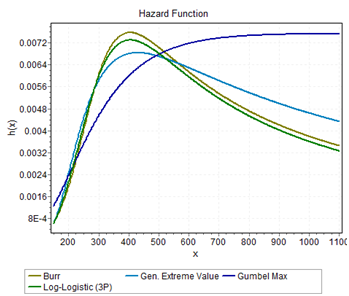

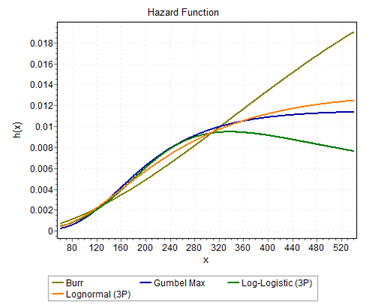

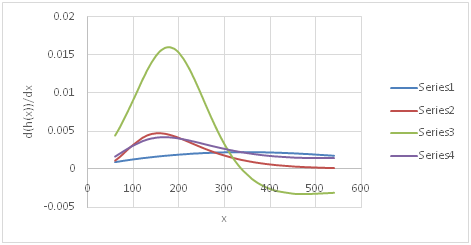

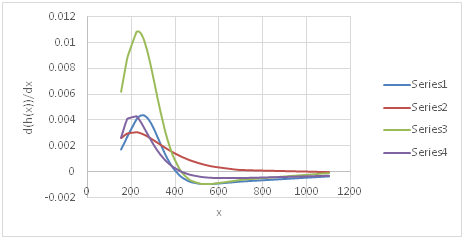

Based on equation (3), the hazard rate was calculated for different distributions and durations. For distributions with 2 parameters there was a general trend of increasing hazard rate. Figure 5 shows the hazard rate for 5 common distributions for 2-hour duration at station Flat. It is observed that Gumbel Max and Inverse Gaussian (2 parameter) had an increasing hazard rate which then tended to become constant, while the Burr XII and log-logistic (3 parameter) and GEV had an increasing hazard rate which then became constant and started decreasing. It makes sense physically, as station at Flat lies in Post Oak Belt in Texas where Prairie grasslands are scattered throughout the area, the damage rate due to flooding will increase to a certain extent and then will become constant and start decreasing. To determine how the hazard rate was changing, it was differentiated with respect to extreme precipitation x. Using the probability density function (PDF) and cumulative distribution function (CDF) of the distributions d(h(x))/dx was calculated. Figure 6 shows the rate of change of hazard rate for the same 2-hour duration at station Flat. The rate of change of hazard rate generally followed the same trend for all four distributions, except that the rate of change was non-negative for Gumbel distribution. It shows how quickly the hazard rate is increasing or decreasing with respect to extreme precipitation. To further study these characteristics it was also determined how hazard rate and rate of change of hazard rate varied in different climatic regions in Brazos River basin. There was no significant pattern, but it was observed that for stations near the Gulf there was an increasing hazard rate for most of the distributions. For stations away from the Gulf, the hazard rate followed the trend of increasing first, becoming constant and then decreasing. It can be due to the reason that the average annual rainfall decreases with increasing distance from the Gulf of Mexico (Singh and Hao, 2012).

Figure 5 Hazard rate for different distributions for 2-hour duration at station Flat.

Figure 6 Rate of change of hazard rate for different distributions for 2-hour duration at station Flat.

Series 1 – Burr, Series 2- Log logistics (3P), Series 3 – GEV, Series 4 – Gumbel max.

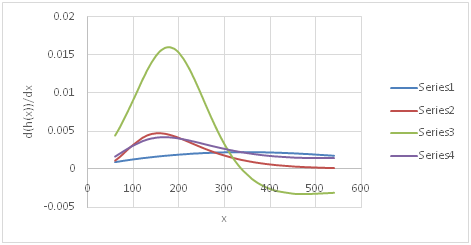

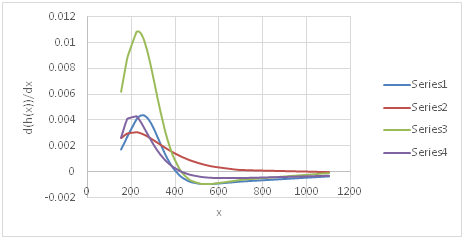

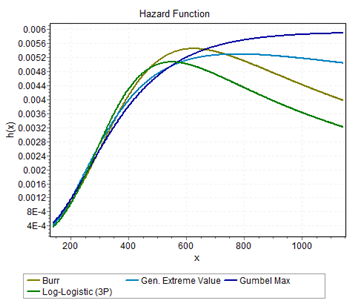

It may be noted that for stations close to the Gulf the histogram was smooth but had more variation. As the distance from the Gulf increased the histogram began to become sharp with less variation. Figure 7, Figure 8& Figure 9 show the hazard rate for different distributions at three stations, Lubbock (upstream), Coryell (middle), and Houston Addicts (downstream), for 24-hour precipitation. For higher durations of precipitation, the GEV distribution also began to reach a constant hazard rate. The rate of change of hazard rate was also calculated for the same distributions and durations. The log-logistic distribution consistently gave a higher rate of change and the Gumbel distribution gave a non-negative rate of change. Figure 10, Figure 11 & Figure 12 shows the rate of change of hazard rate for the same distributions and stations as above. The pattern varied across the study area but the Burr XII and GEV distributions performed better except in regions close to the High Plains division in which the maximum precipitation derives from thunderstorms during the summer season. Burr XII also performed better, based on different GOF tests as stated earlier.

Figure 7 Hazard rate for different distributions for 24-hour duration at station Coryell.

Figure 8 Hazard rate for different distributions for 24-hour duration at station Houston Alife.

Figure 9 Hazard rate for different distributions for 24-hour duration at station Lubbock.

Figure 10 Rate of change of hazard rate for different distributions for 4-hour duration at station Houston Addicts.

Series 1 – Burr, Series 2 - Gumbel max, Series 3 – - Log logistic (3P), Series 4 – GEV

Figure 11 Rate of change of hazard rate for different distributions for 24-hour duration at station Coryell.

Series 1 – Burr, Series 2 - Gumbel max, Series 3 – - Log logistic (3P), Series 4 – GEV.

Figure 12 Rate of change of hazard rate for different distributions for 24-hour duration at station Lubbock.

Series 1 – Burr, Series 2 - Gumbel max, Series 3 – - Log logistic (3P), Series 4 – GEV.

Uncertainty in PMP estimates due to sample mean and sample standard deviation

To quantify the uncertainty of PMP due to sample mean and sample standard deviation, a procedure developed by Salas et al.,23 was followed. To quantify the uncertainty required the calculation of variance (

) of PMP estimates which was calculated as16,24

(4)

Where

the variance of the mean is,

is the variance of standard deviation and

is the covariance of mean and standard deviation. The requirement was to calculate

and

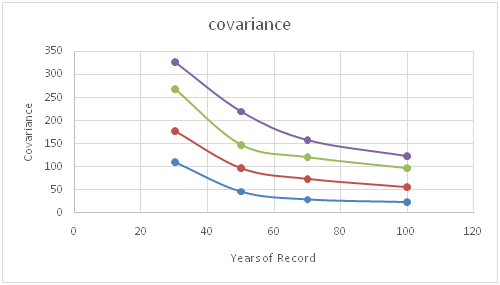

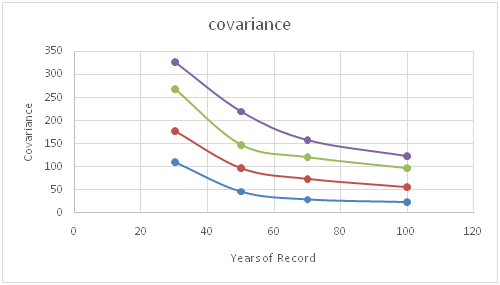

. To calculate it,16 used Monte Carlo simulation experiments assuming Gumbel distribution (GEV-Type I) as the underlying distribution of the process under construction. Then, for easy application, the variance and covariance terms were determined based on the normal approximation, and a correction factor used for obtaining them for the Gumbel distribution. Furthermore, this study was extended by assuming the log-Gumbel (GEV-Type II) distribution.23 However, for Brazos River basin using precipitation data the best fit distribution was Burr XII, hence, a different methodology was developed which would be suitable for the study area.

The values of first shape parameter, second shape parameter

and scale parameter

of the Burr XII distribution were used along with Monte Carlo analysis. The value of the first shape parameter was in the range of 3.5 to 6.5 and the value of the second shape parameter

was in the range of 0.5 to 1.5 with a few exceptions like for 24-hour extreme precipitation at Groesbeck the value was 3.16, and for 1-hour extreme precipitation at Jeweet the value was 3.33. But the value of scale parameter

varied readily from 150 to 400 and was even around 500 in a few cases. The value of

tended to increase for higher duration of extreme precipitation. Since the values of shape parameters had narrower ranges, the average values of

= 4.8 and

= 1 were used by taking the average of all the values. 1000 samples of different sizes n = 30, 50, 70, 100 and different scale parameter values of 150, 200, 300 and 400 were simulated and then the values of

and

, i = 1,...,1000, were computed.

and

were estimated, based on the pair of 1000 values, as shown in Table 2. It was observed that with increasing scale parameter

and

increased, but decreased with increasing record length (n). It can be expected because by increasing the scale parameter the spread increases, hence that leads to a larger variance.

Record length (n) |

|

|

|

30 |

150 |

250 |

110 |

50 |

150 |

140 |

45 |

70 |

150 |

70 |

28 |

100 |

150 |

45 |

22 |

30 |

200 |

400 |

178 |

50 |

200 |

290 |

97 |

70 |

200 |

150 |

74 |

100 |

200 |

108 |

56 |

30 |

300 |

510 |

269 |

50 |

300 |

373 |

147 |

70 |

300 |

243 |

120 |

100 |

300 |

170 |

96 |

30 |

400 |

580 |

327 |

50 |

400 |

440 |

219 |

70 |

400 |

325 |

157 |

100 |

400 |

218 |

122 |

Table 2 Values of

and

for different record lengths and scale parameters

To check whether the values of

= 4.8 and

= 1 would give reliable results, simulations were also run for the shape parameter values

= 5.3 and

= 0.8. These values were the average values for 24 hour duration extreme precipitation. The scale parameter value of 150 for the first simulation and 300 for the second simulation were used. It was because 150 was the least scale parameter value and 300 was the average value for 24 hour duration extreme precipitation series. The results for different record lengths of 30, 50, 70, and 100 years were not very different from the one using the values of

= 4.8 and

= 1, as shown in Table 3. Hence, it was decided to use the values of

= 4.8 and

= 1 as the average values for calculating

and

. Then, a nomograph of varying scale parameter

and varying record length was constructed, as shown in Figure 13 & Figure 14. From these figures the values of

and

were determined for all the stations with different durations having the Burr XII distribution as the best distribution. Then,

was determined based on equation (4).

Record length |

|

|

|

30 |

150 |

263 |

121 |

50 |

150 |

168 |

51 |

70 |

150 |

87 |

32 |

90 |

150 |

56 |

25 |

30 |

300 |

514 |

261 |

50 |

300 |

401 |

153 |

70 |

300 |

256 |

128 |

90 |

300 |

179 |

89 |

Table 3 Simulation results using

= 5.3,

= 0.8, changing record length and scale parameter

As in Salas et al.,16 the expected value of PMP estimate

was calculated as:

(5)

Where

is the expected value of the sample mean and

is the expected value of the sample standard deviation. It can be shown24 that:

(6)

and

(7)

Where

and

are the population mean and standard deviation,

is the incomplete gamma function with argument a, and n is the record length. Using equations (6) and (7) the expected value of PMP value was calculated as:

(8)

Where

and

are the population mean and standard deviation which were replaced by the corresponding sample estimates after appropriate adjustments for outliers as needed.

is the incomplete gamma function defined as:

(9)

Where a is the argument. Following Salas et al.,16 the design risk PMP value

was calculated as:

(10)

Where

is the standard deviation of PMP estimates, and c>1 is the parameter.

is the value of uncertain PMP value P whose distribution is not known but only the estimates of its mean

and standard deviation

are known. Table 4 compares 1-hour PMP values at Thompson, Texas, obtained using the statistical method and the method considering the Burr XII distribution for calculating

and

. At Thompson, for 1-hour duration of extreme rainfall the value of adjusted

and

was 50.28 mm and 22.18mm. The value of

obtained from the enveloping curve was 7.86. The best-fit distribution at the station was the Burr XII distribution with scale parameter

=165.43 and record length (n) = 52. From Figure 6 & Figure 7, the values of

and

were obtained as 190 and 50. Using equations (8) and (9) and

were 223.29 mm and 3218.1 mm, respectively, and

was calculated by taking the square root of

. For different values of c the design risk PMP

was calculated. With c = 1 and +sign

gave = 251.88mm. The value of c = 3 which was a more conservative estimate than using c=1 which gave

309.06mm. Using Hershfield’s original method along with basin-specific enveloping curve the PMP estimate P was 253.45mm after multiplying the PMP value with 1.13 for fixed observational time interval. The PMP values were also calculated using the Salas et al.,16 method. It gave a PMP value of 241.2mm and using normal distribution and it gave a PMP value of 231.65mm. Results showed some differences between PMP values using all the three methods. It can be concluded that a bigger value of PMP must be selected, considering the associated exceedance probabilities (risk). The analysis was performed for other durations and stations having the Burr XII distribution as the best distribution. Table 5 shows the 24-hour design risk values for different values of c. As in Salas et al.16 Chebyshev’s inequality25 was used to have a bound of the probability on PMP estimates based in equation (7):

(11)

Using the same data for 1-hour PMP values at Thompson, Texas, it can be shown that:

For c = 1

For c = 2

For c = 3

Hershfield PMP |

Design Risk PMP using

|

c= 1 |

c= 2 |

c = 3 |

253.45 |

251.88 |

280.4 |

309.06 |

Table 4 Comparison of Hershfield PMP and Design Risk PMP values at Thompson, TX, for 1-hour duration (mm)

Station |

Original PMP |

Design risk PMP |

|

c=1 |

c=2 |

c=3 |

Albine |

421.81 |

462.52 |

504.54 |

546.57 |

Cherroke |

401.33 |

457.07 |

515.71 |

574.34 |

Coryell |

420.5 |

470.26 |

522.05 |

573.85 |

Cranfills |

432.35 |

470.78 |

511.43 |

552.08 |

Cresson |

408.29 |

454.25 |

501.61 |

548.98 |

Evant |

450.06 |

510.11 |

571.94 |

633.76 |

Groesbeck |

364.26 |

410.8 |

459.53 |

508.25 |

Jayton |

470.2 |

507.18 |

545.68 |

584.19 |

Jeweet |

447.98 |

491.33 |

536.74 |

582.15 |

Kopperl |

400.79 |

441.78 |

484.05 |

526.33 |

Spicewood |

437.85 |

488.37 |

541.08 |

593.79 |

Stillhouse |

413.6 |

459.6 |

507.58 |

555.56 |

Waco |

362.77 |

413.82 |

466.53 |

519.24 |

Wheelock |

387.32 |

425.35 |

464.67 |

503.99 |

Bay City |

414.97 |

449.56 |

485.98 |

522.4 |

Houston Alife |

416.43 |

473.28 |

505.89 |

538.51 |

Santa Anna |

419.52 |

457.44 |

496.58 |

535.72 |

Table 5 24-hour Design risk PMP values (mm) values up to only two decimal places

Figure 13 N=57; Epidemiological distribution of the pathological fractures, traumatic fractures, and nonunion.

Series 1: = 150, Series 2: = 200, Series 3: = 300, Series 4: = 400

Figure 14 Covariance of mean and standard deviation as a function of record length.

Series 1: = 150, Series 2: = 200, Series 3: = 300, Series 4: = 400

It shows that there is less than 11% probability that the PMP estimate P is bigger than 309.06mm and smaller than 137.52 for c=3. The probability bound suggested that the value of 251.88 mm had a higher chance to be exceeded because of the uncertainty associated with the estimates of

,

and record length of 52. The value of 309.06 mm corresponded to a more conservative estimate that was less likely to be exceeded because of the uncertainty associated with

and

. It took into account the effect of uncertainty and the associated probability of exceedance.

Uncertainty in PMP estimates using first order second moment analysis

The first order second moment (FOSM) analysis was used to determine the uncertainty introduced in the PMP estimates due to various parameters. Let PMP be a function of mean, standard deviation and frequency factor. Then,

,

Then, in terms of PMP formula,

can be expressed as:

(12)

Where

=The mean of PMP estimates,

=The standard deviation of PMP estimates, and

=The frequency factor of PMP estimates.

From Taylor series expansion up to second orders about the mean it can be shown that:

(13)

Taking the first order approximation the expected value of PMP estimates were determined, based on26

where

and

is the mean value of mean of PMP estimates,

is the mean value of standard deviation, and

is the mean value of frequency factor. The variance of PMP due to each random component around the mean was calculated as:

(14)

Where, xi, i = 1…k, are random variables and

is the standard deviation of each component.

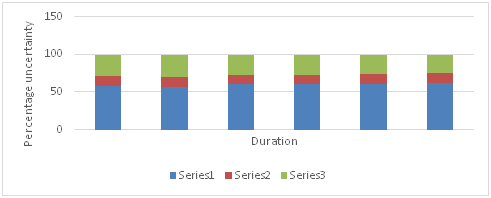

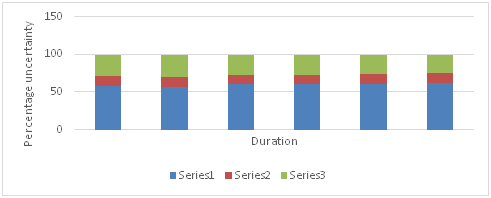

The expected value of PMP estimates for 1-hour duration was 276mm with the standard deviation of 32mm due to mean, 22mm due to frequency factor, and 16 mm due to standard deviation. For the 6-hour duration the expected value was 346mm with the standard deviation of 39mm due to mean, 26.2mm due to frequency factor, and 17.9mm due to standard deviation. For 24-hour duration the expected value was 423mm with the standard deviation of 45.3 due to mean, 29.4mm due to frequency factor, and 21.4mm due to standard deviation. To determine the relative contribution of each random component to the total uncertainty of PMP, the coefficient of variation was calculated as:

(15)

Where the term

of each random component was calculated for the total uncertainty of PMP estimates. It was observed that for 1-hour duration 58% uncertainty was introduced due to mean, 27% due to frequency factor and 14% due to standard deviation. For 6 hour duration the uncertainty was 60.4% due to mean, 27.2% due to frequency factor and 12.5% due to standard deviation. For 24-hour it was 60.95% due to mean, 25.5% due to frequency factor and 13.5% due to standard deviation. Figure 15 shows the relative contribution of each component to uncertainty for different durations of 1, 2, 3, 6, 12 and 24 hours. It can be observed that the relative contribution to uncertainty for different durations was almost the same with the maximum uncertainty introduced due to the mean of annual maximum precipitation series.

Figure 15 Relative uncertainty of each component to the total uncertainty in PMP estimates.

Series 1- Mean, Series 2 – Standard deviation, Series 3- Frequency factor

Data collection and manipulation for Risk analysis of extreme precipitation

The flood damage data for Harris County was requested from FEMA which maintains the National Flood Insurance Program (NFIP). The data comprised the flood event, amount of damage in dollars for a particular flood event on a particular area which was characterized by 5 digit zip codes, the date of the event and the amount paid by NFIP. The flood events were from the period of year 1978 to 2002. In the Harris County there were two rain gauges Houston Alife and Houston Addicts. For gathering precipitation events at the two stations associated with the flood event hourly precipitation data from NCDC NOAA was used. To account for the effect of wet catchment before a storm, antecedent precipitation index (API) was used. Wet catchment and heavy rain can lead to flooding. API was calculated as27

(16)

Where

is the Antecedent Precipitation Index for day n,k is the empirical decay factor less than one, and

is the precipitation for day n. The catchment wetness decline each day by the factor k. API goes up again by any rain. A value of 0.90 was used for k.4

Precipitation events associated with the flood date were selected. Point rainfall estimates (rainfall depth reading at the station) covers an area of 25×25km2 area and rainfall events which occurred at those stations were taken. There were other stations in the county but it was seen from the precipitation database and storm record that only those events which had storm center across the location were taken. It may be noted that two precipitation events were considered independent when there was a certain dry period in between without or with minor precipitation. Urban drainage systems have a lagged response to precipitation and need a certain time to restore the equilibrium state. The flood damage can be completely related to a particular precipitation event if systems are in equilibrium state before a precipitation event. A typical time for sewer systems to restore equilibrium state is between 10 and 20hours.20 hence, the precipitation events were selected that had at least 10- to 12-hour time difference between them. Also, the precipitation amount less than 1 mm/hour was treated as no precipitation. That prevented unrealistic long precipitation events to exist because of very small precipitation amounts between precipitation events. The damage amounts in (US) dollars for the flood events were arranged and adjusted for Consumer Price Index Inflation.28 The damage data was given for zip areas in Harris County; hence, for calculating the total damage due to a precipitation event the damages in all the zip areas up to which the event extended were added. As a point rainfall estimate is applicable to a 25×25km area, the zip areas which covered that area were also used for damage analysis. A weighted average function was developed, depending upon the amount of precipitation for the rain gauges, the duration of precipitation and the closeness of zip area to the rain gauge. The total damage values were distributed among the zip areas for precipitation events occurring at both the stations and were added to have the total damage due to a particular precipitation event.

Estimation of risk and assessment of damage

The exceedance probabilities were calculated for precipitation events associated with flood events from frequency analysis. The expected loss or risk associated with a precipitation event was calculated as (11):

(17)

Where Ei is the expected loss for a given event, pi is the probability of exceedance of a precipitation event, and Li is the associated loss.

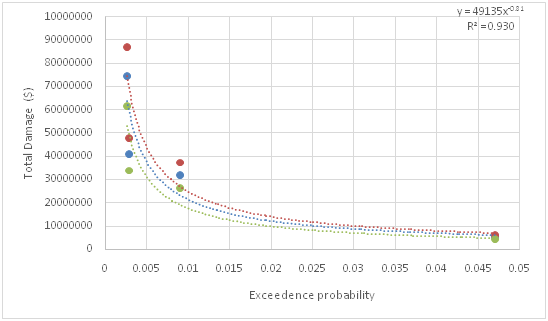

Table 6 shows the exceedance probabilities of precipitation events, total damage and the expected loss or the associated risk in a given year for Houston Alife station. As can be seen, the expected loss due to precipitation events was very high. Due to the limitation of data, there were not many precipitation events of different durations so that the probability of exceedance can be correlated with damage amount. The exceedance probability was plotted against the total damage for 12-hour flood events at Houston Addicts station and 6-hour flood events at Houston Alife station. Figure 16 & Figure 17 show the correlation curves for both stations along with the fitted regression equation. The exceedance probability of PMP was fitted to the equations for both stations. Table 7 shows the exceedance probability of PMP value and total damage. As can be seen, the total damage amount is very high. The damage due to a single PMP event of 12-hour duration can be as high as 2 billion dollars. It shows how much damage a single PMP event can cause. It may be noted that more events would have given better results.29‒32

Total damage (Dollars) |

Exceedance probability |

Expected loss (Dollars) |

5273021 |

0.047 |

247832 |

2786696 |

0.063 |

175561.8 |

74343336 |

0.0026 |

193292.7 |

16499400 |

0.02395 |

395160.6 |

7264661 |

0.02798 |

203265.2 |

27063964 |

0.00795 |

215158.5 |

1194753 |

0.1598 |

190921.5 |

1691062 |

0.07746 |

130989.6 |

31907133 |

0.009 |

287164.2 |

268499.5 |

0.0705 |

18929.2 |

9214725 |

0.00808 |

74454.9 |

5260444 |

0.0231 |

121516.3 |

40911349 |

0.0029 |

118642.9 |

45063692 |

0.00226 |

101843.9 |

6356198 |

0.01782 |

113267.5 |

12825956 |

0.01647 |

211243.5 |

9035821 |

0.02222 |

200775.9 |

1221863 |

0.16268 |

198772.7 |

20373836 |

0.0094 |

191541.1 |

Table 6 Expected loss due to precipitation events

Station |

Duration (hour) and exceedance probability of PMP event |

Expected damage (Dollars) |

Duration |

Probability |

Houston Alife |

12 |

0.00003 |

2.43E+09 |

Houston Addicts |

6 |

0.00064 |

2.03E+08 |

Table 7 Expected damage due to PMP events in both stations

Figure 16 Correlation curve between exceedance probability of PMP and total damage for 12 hour duration precipitation at Houston Alife.

Figure 17 Correlation curve between exceedance probability of PMP and total damage for 6 hour duration precipitation at Houston Addicts.