eISSN: 2576-4500

Research Article Volume 6 Issue 3

Department of Pure and Applied Sciences, University of Urbino Carlo Bo, Italy

Correspondence: Federico Sabbatini, University of Urbino, Via S.Chiara, Urbino, Italy

Received: July 15, 2022 | Published: July 26, 2022

Citation: Sabbatini F, Grimani C. Symbolic knowledge extraction from opaque predictors applied to cosmic-ray data gathered with LISA Pathfinder. Aeron Aero Open Access J. 2022;6(3):90-95. DOI: 10.15406/aaoaj.2022.06.00145

Machine learning models are nowadays ubiquitous in space missions, performing a wide variety of tasks ranging from the prediction of multivariate time series through the detection of specific patterns in the input data. Adopted models are usually deep neural networks or other complex machine learning algorithms providing predictions that are opaque, i.e., human users are not allowed to understand the rationale behind the provided predictions. Several techniques exist in the literature to combine the impressive predictive performance of opaque machine learning models with human-intelligible prediction explanations, as for instance the application of symbolic knowledge extraction procedures.

In this paper are reported the results of different knowledge extractors applied to an ensemble predictor capable of reproducing cosmic-ray data gathered on board the LISA Pathfinder space mission. A discussion about the readability/fidelity trade-off of the extracted knowledge is also presented.

Keywords: LISA Pathfinder, ensemble regressor, explainable AI, symbolic knowledge extraction

GCR, galactic cosmic ray; LPF, LISA Pathfinder; SKE, symbolic knowledge extraction

Data gathered by space missions and ground experiments are increasingly used to create training data sets for machine learning algorithms. These tools are exploited to reach different objectives, as pattern recognition and variable prediction. Recent examples are the automatic detection of interplanetary coronal mass ejections,1 the prediction of the North-South component of the magnetic field embedded within interplanetary coronal mass ejections2 and the prediction of the global solar radiation.3 A powerful exploitation of machine learning models is also the prediction of data gathered by space missions no more active, by using as training set the data taken by an experiment during its lifetime. An example may be the galactic cosmic-ray (GCR) data gathered on board the European Space Agency LISA Pathfinder (LPF) mission, ended in 2017. The large amount of available data make it possible to train machine learning models to reproduce the observed GCR flux variations on the basis of contemporaneous and preceding observations of the interplanetary medium, magnetic field and plasma parameters. Resulting models may be even more valuable if some kind of human-explainable knowledge is provided together with the output predictions. This goal may be achieved by means of symbolic knowledge-extraction (SKE) techniques, explicitly designed to explain the behaviour of machine learning models in human-interpretable formats.

In this paper we report the results of two SKE techniques, namely CART and GridEx, applied to an ensemble predictor reproducing the GCR data gathered on board LPF. We focus in particular on the readability/fidelity trade-off, to show that it is possible to obtain high degrees of human-intelligibility for the predictions, but at the expense of the corresponding predictive performance.

The LISA Pathfinder mission

LISA Pathfinder4 was an European Space Agency mission aimed at testing if the current technology for the detection in space of gravitational waves with interferometers was mature. The mission achieved exceptional results, demonstrating the feasibility of placing 2 free falling masses in space with a residual acceleration smaller than a millionth of billionth of the gravitational acceleration. LPF was the precursor of the scientific mission LISA,5 which goal will be the detection of super massive black-hole coalescence. The LISA mission is scheduled to be launched in 2037.

LPF was launched at the end of 2015 from Kourou (French Guyana) on board a Vega rocket. Its final orbit around the Lagrangian point L1 was reached on January, 2016 and the mission lifetime ended on July, 2017. We recall that the L1 point is at 1.5 million km from Earth in the Earth-Sun direction. Mission orbit was inclined of 45 degrees w.r.t. the ecliptic plane and required approximately six months to be completed by the satellite. Minor and major axes of the LPF orbit were about 0.5 and 0.8 millions of km, respectively. The spacecraft rotated around its own axis with a period of six months.

LPF was equipped with a particle detector to monitor the flux of GCRs and particles originated from the Sun energetic enough to traverse the spacecraft and reach the test masses. The test masses were cubes of platinum and gold, penetrated and charged by protons and ions having energies > 100 MeV n-1. The test-mass charging induced spurious forces on the test masses.6 Monte Carlo simulations have been exploited to study this process before the mission launch.7–9 Noise control has been periodically performed by using ultraviolet light beams to discharge the test masses.10 The LPF particle detector enabled the observation of the GCR integral flux with a nominal statistical uncertainty of 1% on hourly binned data.

GCR flux short-term variations observed with LISA Pathfinder

GCR flux short-term variations are characterised by duration shorter than the solar rotation period (27 days) and are associated with the passage of magnetic structures having solar or interplanetary origin. GCR flux is generally anti-correlated with increasing solar wind speed and interplanetary magnetic field amplitude. As a consequence, GCR flux, interplanetary magnetic field, solar wind plasma and geomagnetic activity indices show nominal quasi-periodicities related to the Sun rotation period and higher harmonics equal to 27, 13.5 and 9 days.11,12 A quantitative assessment of the correlation between GCR flux depressions and increases of the solar wind speed and/or interplanetary magnetic field intensity is still missing, since the evolution of single short-term depressions is unique and may differ even in presence of similar interplanetary medium conditions. The particle detector hosted by LPF allowed to study GCR short-term flux variations during Bartels rotations from 2490 through 2509 (from February 18th, 2016 through July 18th, 2017). We recall that Bartels rotations are 27-day periods defined as complete apparent rotations of the Sun viewed from Earth. Day 1 of rotation 1 is arbitrarily fixed to February 8, 1832.

GCR percent variations are compared to interplanetary magnetic field intensity and solar wind plasma contemporaneous observations for each Bartels rotations in order to focus on recurrent periodicities consistent with the Sun rotation period and higher harmonics.

Symbolic knowledge extraction

Machine learning models are currently adopted to face a wide variety of tasks, since they exhibit an impressive predictive performance.13 These models usually require a prior training phase, during which models learn from training data some kind of generalised knowledge to be used to draw predictions. A large subset of machine learning predictors store the acquired knowledge in the form of internal parameters (i.e., sub-symbolically), making it difficult for human users to understand the process leading to the model predictions. These predictors are commonly defined as opaque, or black boxes.14

There exist critical applications (e.g., those having great impact on human lives) that may benefit from the adoption of decision support systems, however these contexts require human awareness about the system internal behaviour. For this reason systems based on opaque models are not reliable, even if they provide accurate suggestions/predictions. Amongst the various solutions,15,16 the explainable artificial intelligence community proposes SKE methods aimed at explaining the internal functioning and/or the outputs of opaque models.17 Amongst the available techniques to achieve the goal of explainability there is the creation of a surrogate model, that is a non-opaque predictor able to mimic the opaque one. In this case, the opaque predictor is called underlying model. Critical applications that benefit from SKE are, for instance, medical diagnosis,18,19 credit-risk evaluation20–22 and credit card screening.23

CART24 and GridEx25 are examples of algorithms applicable to black-box models. The former induces a decision tree having conditions on the input variables as nodes and output predictions as leaves. Paths from the tree root to single leaves represent human- intelligible rules mimicking the underlying model predictions. On the other hand, GridEx operates a hyper-cubic partitioning of the input feature space in order to find subregions of instances whose associated output predictions are similar. Each hyper-cubic region is then described in terms of the input variables (i.e., hyper-cube sides are equivalent to variables whose values lay in specific intervals) and is associated to an output value. The output value for a hyper-cube is calculated by averaging the predictions provided by the underlying model for the training data belonging to the hyper-cube. Thus, descriptions are human-understandable and may be used to draw interpretable predictions.

In this work we rely on the CART and GridEx implementations included in the PSyKE framework.26–28 PSyKE is a Python library providing several interchangeable SKE algorithms. Explainability in PSyKE is achieved via the extraction of rules in Prolog syntax.

Explaining an ensemble model for the LPF GCR data

Since the performance of knowledge extractors is usually bounded to the specific task at hand and there is not a universally best choice, a comparison between the CART and GridEx has been performed and reported here. Both algorithms have been applied to the same ensemble model reproducing the LPF GCR data. Information about the training data set and the ensemble predictor are also reported in the following. Design choices about the data set creation and the predictor hyper-parameters have been optimised and described in another work currently not yet published.

Data set

A data set has been created to train the ensemble predictor and the extractors. Since GCR flux variations show a strong correlation with solar wind speed and interplanetary magnetic field intensity, these parameters have been used as data set input variables. In particular, GCR observations have been temporally aligned with those of magnetic field and solar wind, then for each time instant the following input variables have been selected:

The data set has been completed by adding the output variable, that is the GCR flux decrease observed by LPF at the considered instant w.r.t. the flux value 9 days before. As for the number of instances, the data set encompasses all the available observations between the starting and ending time of LPF, resulting in about 11000 instances.

Ensemble model reproducing LPF GCR data

The ensemble model29,30 adopted to reproduce the GCR data observed on board LPF consists of 10 support-vector machines.31,32 These base regressors have been aggregated to obtain a bagging regressor providing output predictions by averaging the base model outputs. An ensemble approach has been preferred since the exploitation of multiple learning algorithms enables more robust and accurate predictions than single machine learning models. In this particular case, support-vector machines are supervised learning algorithms requiring 3 hyper-parameters to be tuned:

For this work radial basis function kernels have been chosen as kernel functions, i.e., non-linear generalised kernels commonly exploited to perform linear separations of non-linearly separable data. This is achieved by mapping the input features into a new input space having higher dimensionality. The regularization parameter, controlling the tolerance for outliers, has been fixed to 1. Finally, the maximum error ε has been chosen equal to 0.1, to not penalise predictions having error < 0.1 during the training phase of the models.

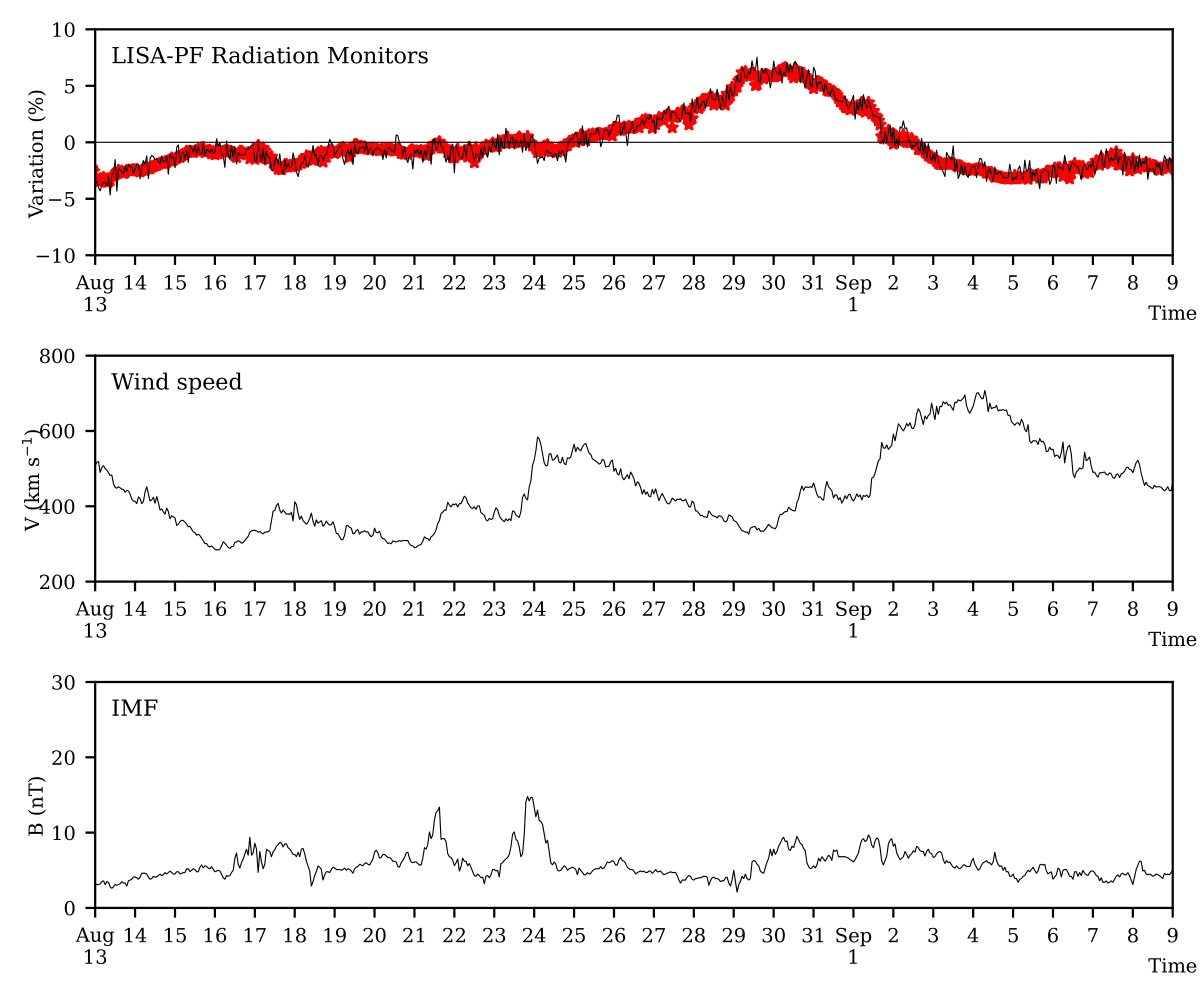

Since our goal is the knowledge extraction from the ensemble model, and not to obtain future predictions, all the available data have been used to train the model, without keeping apart a test set. This allowed us to obtain a predictor perfectly reproducing the GCR data gathered by LPF. Table 1 reports the mean absolute error measured for the ensemble model outputs averaged per Bartels rotation. In the Table are reported all the Bartels rotation during which LPF gathered data. As an example, model outputs are also reported in Figure 1 for the Bartels rotation 2497.

Bartels rotation |

MAE (%) |

Bartels rotation |

MAE (%) |

2491 |

0.52±0.42 |

2500 |

0.48±0.38 |

2492 |

0.53±0.41 |

2501 |

0.49±0.37 |

2493 |

0.54±0.43 |

2502 |

0.47±0.35 |

2494 |

0.50±0.40 |

2503 |

0.46±0.36 |

2495 |

0.51±0.40 |

2504 |

0.45±0.34 |

2496 |

0.49±0.38 |

2505 |

0.43±0.31 |

2497 |

0.50±0.38 |

2506 |

0.51±0.42 |

2498 |

0.50±0.38 |

2507 |

0.49±0.39 |

2499 |

0.51±0.38 |

2508 |

0.46±0.37 |

All |

0.49±0.38 |

Table 1 Mean absolute error (MAE) and standard deviation measured for the ensemble model outputs for each Bartels rotation during the LPF mission

Figure 1 LPF GCR flux data (top panel), solar wind speed (middle panel) and interplanetary magnetic field intensity (bottom panel) observed in L1 during the Bartels rotation 2497. Ensemble model predictions (red stars in the top panel) are reported superposed to the contemporaneous GCR data.

Knowledge extraction

The trained ensemble model is used as underlying predictor for the CART and GridEx extractors. Knowledge extraction from the model is preferred over direct rule induction from the data set, since it enables the adoption of the underlying model as an oracle to augment the training set.

CART

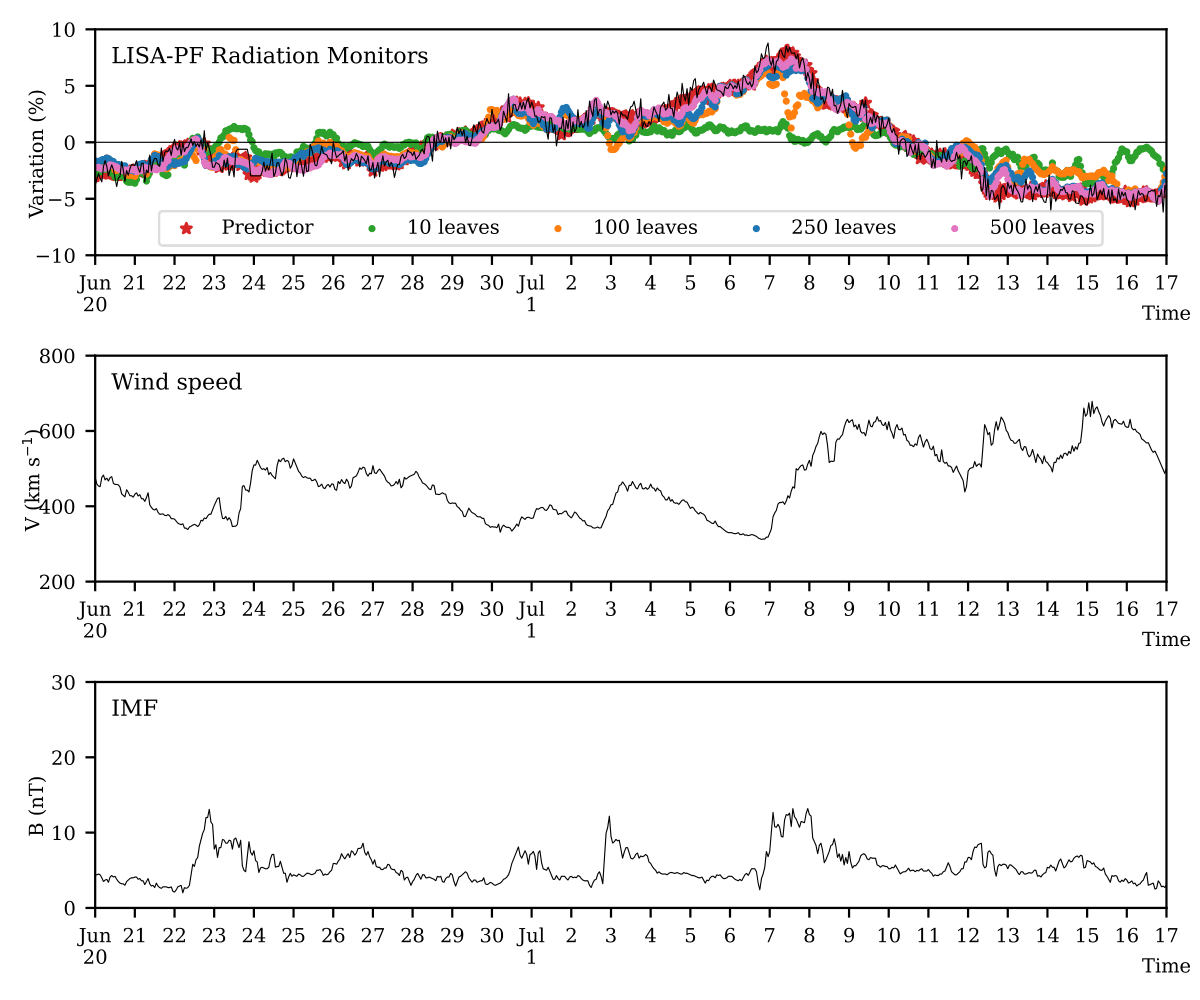

The predictive performance of CART strictly depends on the number of output leaves of the induced decision tree. Indeed, CART provides constant predictions and introduces an undesired output discretisation. The effects of the discretisation are limited if the output variable to be predicted is discrete or if there is a large amount of leaves. However, a large amount of leaves, and thus of output human-readable rules, hinder the readability of the model.

We trained several instances of CART, with different values for the maximum allowed number of leaves. The readability/fidelity trade-off is problematic in the present applicative context, since it is possible to obtain a good fidelity (small predictive error w.r.t. the ensemble model predictions) only with a very large amount of leaves. In particular, a good agreement between LPF GCR data and CART predictions can be found with more than 200 leaves. Readability of CART rules is also hindered by the number of antecedents per rule.

Whereas shallow leaves are translated into rules having few antecedents, deeper leaves may result in rules having too many conditions to be still considered as human-readable. Since the number of antecedents in a rule is equal to the associated leaf depth, it is possible to limit this drawback by setting a maximum depth in the tree induction. However, this in turn worsens the extracted rule fidelity.

In Table 2 the predictive performance and the number of leaves of the tested CART instances are reported. Predictive performance is expressed as mean absolute error with respect to both the data and the ensemble model predictions. A visual comparison of CART with 10, 100, 250 and 500 leaves is reported in Figure 2 for the Bartels rotation 2495.

# of leaves |

MAE (%) |

|

Data |

Model |

|

10 |

1.70±1.32 |

1.57±1.24 |

25 |

1.52±1.20 |

1.39±1.11 |

50 |

1.37±1.07 |

1.23±0.98 |

100 |

1.18±0.94 |

1.03±0.85 |

150 |

1.07±0.85 |

0.91±0.75 |

250 |

0.91±0.74 |

0.74±0.62 |

500 |

0.73±0.58 |

0.51±0.43 |

Table 2 Number of leaves and mean absolute error measured for CART w.r.t. the data and the ensemble model predictions

Figure 2 CART output predictions for different values of the maximum leaf amount (equal to 10, 100, 250 and 500) (top panel) for the Bartels rotation 2495. Panels are the same as in Figure 1.

Examples of CART rules are the following:

Variable GCR0 represents the GCR flux value 9 days before the considered instant. V is the solar wind speed in the same instant, while Vi is the average solar wind speed value taken in the i-th time window before the considered instant. Wind speed is always expressed in km s-1 and time windows have dimensions equal to 36 hours. The same holds for B1, that represents the interplanetary magnetic field intensity averaged in the 24 hours before the considered instant. Magnetic field is expressed in nT. It is evident how the first rule, with only 3 antecedents, is more readable than the second, having 7 antecedents.

GridEx

To bypass the readability limitations of CART also the GridEx algorithm has been applied to the ensemble predictor. Thanks to the adaptive splitting of GridEx it is possible to create output rules by only involving the most relevant input features in the precondition, resulting in a more controlled rule readability. GridEx requires the fine tuning of a set of parameters, namely:

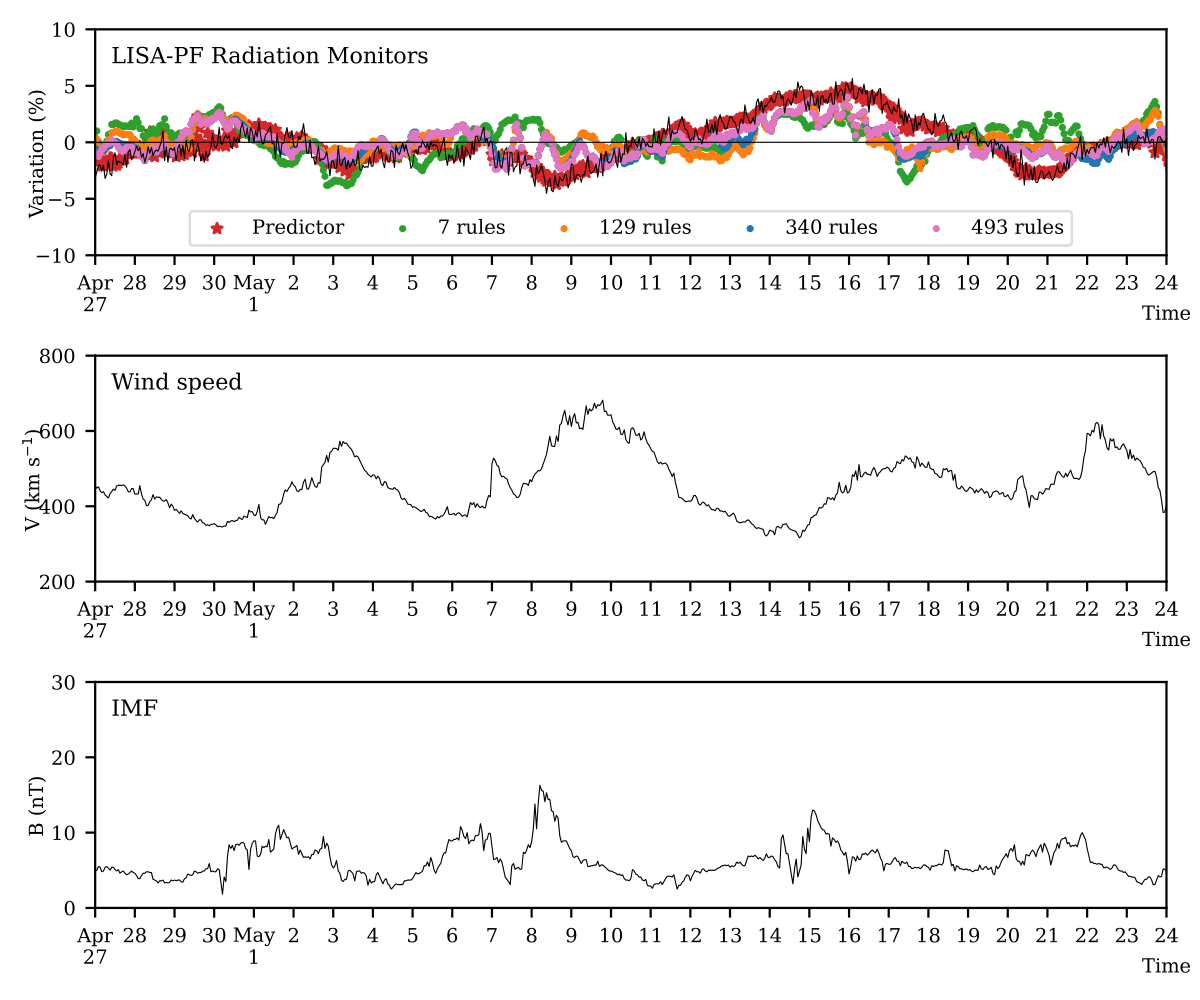

In all our experiments, the minimum amount of samples has been fixed equal to 100. This means that if less than 100 training instances are included in an input space subregion, during the training phase of GridEx the data set is augmented by generating random input samples inside the region and predicting them by using the underlying ensemble model as an oracle. The depth of the partitioning controls how many times the input space subregions have to be split. Regions are split only if the predictive error inside them is greater than the user-defined error threshold. Finally, adaptive strategies have been chosen as splitting strategies. In particular, in all the experiments the less relevant input features have not been split, while the more relevant ones have been split into 2 partitions at each iteration. Features are considered as more relevant if their relevance is greater than a relevance threshold, not constant for all the experiments. The parameter values adopted for the experiments are reported in Table 3. A visual comparison of different instances of GridEx is reported in Figure 3 for the Bartels rotation 2493.

# of rules |

MAE (%) |

||||

Depth |

Error threshold |

Relevance threshold |

Data |

Model |

|

1 |

0.6 |

0.1 |

7 |

2.05±1.54 |

1.95±1.47 |

2 |

0.6 |

0.1 |

24 |

1.86±1.40 |

1.74±1.33 |

3 |

0.6 |

0.1 |

49 |

1.74±1.32 |

1.62±1.24 |

3 |

0.6 |

0.05 |

129 |

1.70±1.30 |

1.58±1.23 |

3 |

0.55 |

0.05 |

219 |

1.58±1.23 |

1.45±1.15 |

3 |

0.5 |

0.05 |

340 |

1.45±1.16 |

1.31±1.08 |

3 |

0.45 |

0.05 |

414 |

1.40±1.14 |

1.26±1.06 |

3 |

0.4 |

0.05 |

493 |

1.36±1.12 |

1.21±1.04 |

Table 3 Parameters, number of extracted rules and mean absolute error measured for several instances of GridEx w.r.t. the data and the ensemble model predictions

Figure 3 GridEx output predictions for different values of its parameters, resulting in different amounts of output rules (top panel) for the Bartels rotation 2493. Panels are the same as in Figure 1.

Examples of GridEx output rules are:

Variables follow the same convention as for CART output rules. In this case all the extracted rules have the same readability, since the number of rule antecedents is fixed to 3 via the splitting strategy parameter tuning.

Comparison between CART and GridEx

By comparing the output models obtained via the CART and GridEx extractors, it is possible to notice that

Indeed, even by accepting growing amounts of output rules, GridEx is not able to provide predictions with sufficient quality. We believe that better results may be achieved by using an algorithm able to approximate local predictions with non-constant outputs, as for instance a linear combination of the input variables. At the moment an extraction algorithm capable of doing so when applied to a complex underlying predictor (as an ensemble model) is still missing in the literature. A comparison of the mean absolute error measured for both CART and GridEx w.r.t. the data as well as the ensemble model predictions is reported in Figure 4. It is clearly noticeable that CART performs better than GridEx and that the performance of the latter does not sensibly improve by increasing the number of extracted rules.

In this work SKE techniques have been applied to a machine learning ensemble model capable of reproducing the GCR data gathered by LPF with an error smaller than the nominal uncertainty of LPF GCR hourly binned data. Namely, CART and GridEx have been applied to the model in order to extract human-readable rules expressing the intensity of GCR flux variations. The adopted extractors are able to provide good predictions only in limited regions of the input feature space. This is reasonable since the peculiarities of the interplanetary structures and solar wind high-speed streams make impractical a global approximation of the GCR flux variations for all the possible solar wind speed and interplanetary magnetic field intensity input values.33 Thus, only local human-readable approximations can be suited to substitute opaque predictions of an ensemble model. In our future works we plan to enhance the extraction of knowledge from machine learning models reproducing the LPF GCR data by obtaining fewer rules with smaller predictive error, i.e., we plan to obtain higher degrees of readability and fidelity. This goal can be achieved by substituting the constant output values of the extracted rules with local linear combinations (or other kinds of functions) of the input variables.

None.

The Authors declares that there is no Conflict of interest.

©2022 Sabbatini, et al. This is an open access article distributed under the terms of the, which permits unrestricted use, distribution, and build upon your work non-commercially.