eISSN: 2574-8092

Short Communication Volume 5 Issue 3

Department of Architecture, University of New Mexico, United States of America

Correspondence: Alexander Webb, Department of Architecture, University of New Mexico, United States of America, Tel 5053124748

Received: May 15, 2019 | Published: May 24, 2019

Citation: Webb A. The heuristics of agency: on computation, big data & urban design. Int Rob Auto J. 2019;5(3):121-126. DOI: 10.15406/iratj.2019.05.00183

Big Data and urban design have found themselves in a contentious relationship. At the time of writing, more authors have published books writing against so-called Smart Cities than for them, despite the obvious opportunities for material and resource savings that data-driven design offers.

This article questions this tension, suggesting that alternative strategies of integration could prove more fruitful and demonstrate significant resource savings. Citing Stafford Beer, Brian Eno, Rodney Brooks and describing projects by Sarah Williams and NASA, this article argues for a heuristic strategy with increased robotic agency for urban scale artificial intelligence.

Keywords: urban design, Microsoft, Internet of Things, project’s, robotic agency, designers

AI, artificial intelligence; NASA, national aeronautics and space administration; IoT, Internet of Things; VSM, viable system model

Big Data and urban design have found themselves in a contentious relationship. At the time of writing, more authors have published books writing against so-called Smart Cities than for them, despite the obvious opportunities for material and resource savings that data-driven design offers. The seemingly sole advocates for an urban strategy of ubiquitous sensors are multinational corporations like Cisco and Microsoft, as they manufacture urban conditions oriented around ideas of efficiency and resource use while appearing to ignore privacy and social engagement. As the Internet of Things (IoT) is heralded as “The next big thing”, the emergence of IoT on an urban scale and its requisite Big Data collection has been met with controversy.1

Big Data collection is still in its nascent stages, and so far its inception has been fraught with challenges. In 2011, an under-observed default setting allowed users of the activity tracking service Fitbit to have their sexual behavior published without their knowledge.2,3 A study conducted in 2014 by security tech company Symantec showed that one in five wearable devices transferred user credentials (user names, passwords, email addresses) insecurely, and over half of the device’s parent companies did not have privacy policies.4 In 2015, Ancestry.com’s DNA database was used to link the participant of a Mormon genealogy project’s offspring to a murder case in Idaho twenty years before.5

One could argue that the issues around data collection is not the collection itself, but the loss of ownership of the information, the attachment of identity to the information, and the dissemination of that information. This assertion is supported when placed in the realm of urban data collection- few are disconcerted when a stop light senses they are waiting for it to turn green, the information neither is neither intimate, identifiable nor profited upon. Yet if a Smart City like Masdar can identify it’s occupants’ location, health, finances and other personal information there is concern. Much of the published criticism of Big Data focuses on the large datasets that can be used to determine the most intimate details of one’s life, combined with the potential for distribution of those details- it could be argued there is little controversy regarding the collection data or the agency of data itself.

The argument here is that the decoupling of the process of data collection from the invasions of privacy that are associated with it is a critical step for planners, designers and architects. This article will assume that ethical data collection through technological, legislative or other means is a viable possibility. The argument here is that the potential shift in contemporary architectural production implied by data collection is too significant to abstain from the discussion until critically fundamental issues are resolved, particularly the issues outside the scope of architectural and urban design. While these issues are significant and should not be ignored by the design community, the integration of urban behavioral datasets into a design process has the potential to fundamentally reframe the operating mechanisms that govern architectural and urban design.

The potential of Big Data integration is not to simply augment established computational design processes, but to engender more directly actionable mechanisms of production. This article will primarily focus on the potential relationships between Big Data and urban design, with the assumption that similar strategies will prove productive for architectural design as well. The suggestion of this article is that, despite the issues presented by data collection, data may in fact serve to provide significant agency for the humans that produce it.

In 1995, WIRED editor Kevin Kelly interviewed the legendary electronic musician, producer and artist Brian Eno in an article entitled Gossip is Philosophy. Like much of Eno’s music, the interview was a composite- a series of phone, email and direct conversations, all filled with provocative statements, an appropriate documentation of one of the most provocatively enduring electronic musicians at the time and to date. Among Eno’s insightful musings was, “The problem with computers is that there is not enough Africa in them.”6 These words occur in the text immediately after a description of the intricate and social nature of African music, how sounds within a single composition are independent and untethered to the overall rhythms and tempos of the piece. In African music, as Eno described, the composition defines its own structure in real time.

By comparison Western music, particularly orchestral Western music and by extension contemporary computer-generated Western music, is frequently locked into a structural arrangement before creation. The tempo of electronic music is typically determined before any sounds are made, and any adjustments to the tempo after sounds are programmed are either totalitarian and effect everything created before it, or segmented into other movements within the piece. This ultimately creates a rigid hierarchy within the music, the tempo reigns supreme and all other components follow suit.

The lack of dynamic flexibility is not the product a technical limitation by generating music through a computer, but a result of the computer being driven by a cultural expectation of musical production. If western music was founded upon ideas of interplay, collaboration, and the social flexibility, as is African music, it is not likely that Western music software and hardware would engender these values? Compared to a computer driving a car, enabling a dynamic musical tempo would appear to be an easy task.

Parametric design as deployed by architecture and urban design has similar hierarchies, traditionally operating through a process of simulation, and then responding to it with a prescriptive model, which then is deployed in the context as a design. This abstracted simulation operates in a similar fashion to a music track’s rhythm- it can updated and altered, but updates are singular or cumbersome. The simulation not only abstracts the context but it dates it, freezing it in stasis within the moment when it was created.

Many design disciplines can afford framing a design-related simulation around a singular consideration. An architectural design can address solar irradiation in isolation, for example, due to the fact that the sun’s rays are in flux but are roughly predictable for the lifespan of a building. The simulation of a metropolis is a completely different condition- a city is comprised of numerous variables, many of which oscillate rapidly compared to the lifespan of a project. The constant shifting and repositioning of an urban environment resists static representation, and currently the interaction of people in urban space defies simulation. If one takes an ecological perspective on an urban condition, to approach the city as a set of relationships, the perspective suggests that the simulation of a city should be conducted in its entirety, less one eliminate a critical relationship in the dynamic.

The problem of urban simulation is significant, and perhaps the paradoxical nature of the dilemma suggests an alternative approach. The work of cybernetician Stafford Beer demonstrates a capacity for deploying computation and data in a direct application, rather than through abstraction. Throughout his career Beer argued that cybernetics and computation should drive action, not create mathematical models.7 Those using computation at the time were not “solving problems, they are writing bloody Ph.D. theses about solving problems.”8 The direct application of computational methods would remain a central focus for all of Beer’s career, with his last book Beyond Dispute focusing on methods of solving political issues through geometrically complex social structures.

Beer’s Project Cybersyn was a manifestation of these attitudes- a deployment of a neural network synthetic to an existing condition. The project was an attempt to centralize the Chilean economy during the brief period socialist President Salvador Allende was in office, from 1971 to 1973. Beer worked with a team of British and Chilean cyberneticians to network every factory in Chile to one central computer. The network was driven by 500 Telex machines, a kind of proto-fax machine that sent text messages to other Telex terminals.

Wanting to avoid the Soviet totalitarian model, the team also acknowledged the need for a centralized decision-making process.9 To address this, Beer developed an intricate series of checks and balances, largely based on Beer’s Viable System Model (VSM), that allowed facile adaptation as well as empowering factories and individuals on all levels of the system. Local issues were addressed locally, issues that could not were escalated through a five-tiered system, with the most significant presented to a select group of seven workers and bureaucrats in the Opsroom, a room for seven workers and bureaucrats to debate on issues of a national scale and vote.

While Gui Bonsiepe’s deliciously stylish design of the Opsroom has served as the icon for the project, the project’s connection to data through its structural organization is what is particularly relevant. Beer’s experience deploying computation in corporate management served to create an intricate system of checks and balances throughout the project that brought agency to individuals and the factories themselves, while empowering a collective expertise. As Andrew Pickering describes, Project Cybersyn’s architecture, the VSM, was essentially a work of both ontology and epistemology, a investigation of both being and becoming simultaneously.10

Project Cybersyn’s organizational structure is empowered by the extensive data collection system. Though crude by today’s standards, the Telex machines provided contextual and timely information, providing enough support to make actionable decisions. However, the project was cancelled before it became fully operational and in deployment there were computational lags as much as several days.11 Despite these lags, the decisions regarding a large, complex system could be deployed in (effectively) real time, integrating design decisions into the context itself directly instead of through a process of abstraction. This was demonstrated during a labor strike, when the system was able to help maintain production by identifying which roads were open and where resources were located.12

Here, Big Data serves Beer as providing alternative to the issue of simulation. The data itself, provides agency to the computational system without the abstraction of creating models. According to Pickering, Beer did not “trust” representational models and was “suspicious of articulated knowledge and representation.”13 “(For Beer) A world of exceedingly complex systems, for which any representation can only be provisional, performance is what we need to care about. The important thing is that the firm adapts to its ever-changing environment, not that we find the right representation of either entity.”14 This distinction between representation as a means to produce performance, but not the only means to produce performance runs counter to contemporary architectural and urban design production.

Project Cybersyn can be seen as an implementation of Big Data as a mechanism for empowerment, rather than the oppressive, privacy-invading tools deployed by wearables manufacturers, multinational corporations and governments. By providing information directly to a network, efficiencies are discovered while empowerment is enabled. Though the contemporary use of Big Data can be alarming, it is how the data is utilized, collected and managed- not the process of harvesting the data itself.

If the potential and unregulated abuses of Big Data can be viewed as a separate, resolvable issues divorced from the potential agency of data itself, it is Big Data that can facilitate Eno’s desire to put more Africa into the computer. If African music is social, adaptable, and fluctuating, then these are conditions that are facilitated through direct participation and agency of a population. The participatory nature of Project Cybersyn is grounded within the collection and dissemination of data, implemented with an organization that provides agency to all participants.

It is not coincidental that reading Beer’s Brain of the Firm has been cited as turning point in Brian Eno’s career.15 Eno and Beer were robustly connected, as Eno considered Beer a mentor and Beer considered Eno a protégé.16 When describing Beer’s work, Eno will discuss the organization and dynamism of the work most articulately and directly, but it is the incorporation of data that enables these capacities. Without the translation of corporate organizations and national economies into zeros and ones, there is no capacity for the computer to synthesize with physical space and enable the empowering dynamism that Beer sought and Eno appreciated. While Eno’s critique of the computer is that of an overly-rigid structural system, it is far more rigid when it is divorced from and unresponsive to real time information.

During the interview with Kelly, Eno described Beer’s use of heuristics. “...Beer had a great phrase that I lived by for years: Instead of trying to specify the system in full detail, specify it only somewhat. You then ride on the dynamics of the system in the direction you want to go. He was talking about heuristics, as opposed to algorithms. Algorithms are a precise set of instructions, such as take the first on the left, walk 121 yards, take the second on the right, da da da da. A heuristic, on the other hand, is a general and vague set of instructions. What I'm looking for is to make heuristic machines that you can ride on.”6

Part of the distinction between heuristics and algorithms can be understood as the level of precision of the instructions, but perhaps the more relevant here is the level of precision of the contextual simulation. An algorithm, as methodology, relies upon a degree of certainty of what the system is, where the specific instructions are only beneficial with a specific context. A heuristic, relative to computer science, is a far faster method to produce an approximation of a solution. With computer science, it is frequently computational power that is the limitation on producing a complex solution directly, deploying a heuristic approach provides an approximation in far less time. Relative to urban design, a heuristic strategy provides two opportunities: it frees the process from having an absolute simulation and it prototypes solutions faster.

The capacity to prototype solutions rapidly is a critical advantage, as it allows cities to respond to users quickly and test various strategies within the context. Rather than developing a “perfect” prescriptive model, dependent on a “perfect” simulation, imperfection is accepted and embraced. Instead of optimization, strategies of variation are deployed- while maintaining a feedback loop of evaluation to determine success. Less planning, more heuristic permutations and attempts.

Roboticist Rodney Brooks’ paper Fast, Cheap, and Out of Control: A Robot Invasion of the Solar System, co-written with electrical engineer Anita Flynn, summarizes the advantages towards less planning. Brooks and Flynn suggest that if complex missions and complex systems are inherently expensive, that their failures have suggested an increase of planning and investment for future missions- leading towards inherently more expensive solutions with more expensive failures.17 The solution, suggest Brooks and Flynn, is not to increase planning but to decrease it, creating cheaper solutions that follow heuristics rather than instructions, and to iterate between prototypes quickly. The title of the paper would later inspire the title of the Errol Morris documentary, which featured Brooks as a central subject (Figure 1).

The difference between Brooks’ techniques and a traditional method is articulated by the difference in approach between NASA’s standard Mars Rover and their prototype Tumbleweed Rover. A typical Mars rover is 2000 pounds, and needs significant logistical and resource support to travel to Mars and then to explore specific areas of the planet. The Tumbleweed is an alternative approach, a large inflatable ball that could be deployed to Mars en masse. The lightweight structure of the Tumbleweed inflates upon the Martian surface, and is blown by the wind until it reaches a destination of interest. The ball deflates, records the data it is interested in, and re-inflates until it reaches another area of interest.18

By eliminating the precision of the rover, the Tumbleweed removes much of the cost and planning duration. Rather than following the precise algorithms instructed to the standard rover, a set of heuristics are embedded into the material choices of the Tumbleweed. Inflatable spheres are light, have mass, and enjoy motion. They do not need the complexity required to move mechanical wheels, arms and optic sensors. They respond to the virtue of their form, only resisting at times when necessary to record information.

When applied to urban design, the difference between a heuristic strategy and an algorithmic one is demonstrated through the comparison between traditional transit services and transit network provider Uber. A Western city might provide transit services through bus lines, a series of fixed routes that buses travel at designated times. The transit needs of the city are predetermined, potentially through modeling or simulation, and an algorithmic set of instructions are deployed. A specific bus line will travel from a specific location to another specific location at a specified time. There may be variations in schedules from weekdays to weekends, but changes to routes and times are the accumulation of months, if not years, of gathered intelligence, deployed in another rigid, fixed set of routes and schedules.

By comparison, a networked transit services such as Uber are far more dynamic. The system responds to ridership need directly through networked communication, riders communicate to the system via a mobile app and the system connects them with an available and local driver. Uber’s driver organization follows a heuristic- loose, vague instructions that allow the capacity for leveraging driver intuition as well as centralized expertise. Uber provides their drivers with heatmaps of demand, maps of intelligence where riders and potential riders are and could be. The drivers determine whether to engage with the heatmaps, but the information has the potential to be leveraged.

The advantage of a heuristic approach is clear- traditional transit strategies have a significant delay in response to need. A networked system is inherently more dynamic, drivers not only directly respond to need but the system has the capacity to become predictive and position drivers in locations before need is identified. When considering the material and resource use of these systems, to provide an approach that more directly responds to user need has significant advantages.

If the comparison between a networked cab service and a generic bus system seems imbalanced or misplaced, consider the municipalities that are deploying networked strategies for their public transit. Helsinki has developed a similar app to Uber, Kutsuplus, which collectively pools passengers on shared minibuses. Boston, Kansas City and Washington D.C. have launched similar microtransit programs with Bridj, a minibus provider that operates on similar technology. San Francisco-based Chariot provides a similar service as well- running fixed routes, but updating the routes based on demand.

While many of these projects have been met with varied levels of success, they do demonstrate a capacity for direct engagement with ridership as a proof of concept. The abstracted simulation of a city is not necessary, the transit system responds directly to ridership. The tempo of a bus schedule is replaced with the fluctuating rhythms of rider demand and need. Efficiencies of deploying only the vehicles necessary are intuitively immense- energy use is no longer a constant, but fluctuates and oscillates relative to what is required (Figure 2).

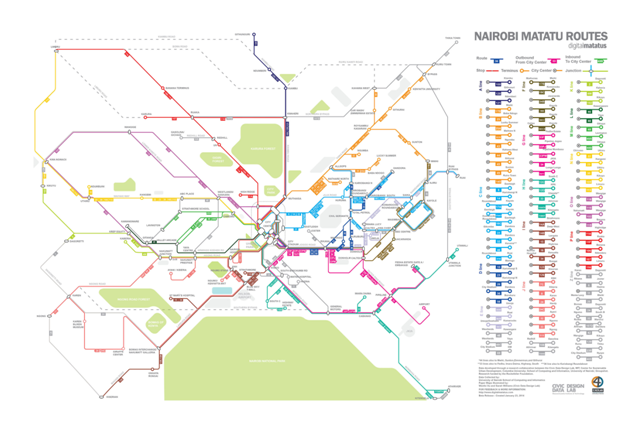

Figure 2 Digital Matatus, Civic Data Design Lab at MIT, Center for Sustainable Urban Development at Columbia University, C4D Lab at the University of Nairobi, and Groupshot.

MIT’s Civic Data Design Lab worked with the University of Nairobi to map their informal transit system, the matatus, to provide a real time map of the ever-shifting matatu routes by recording locations through cell phones.19 The transit aspects of urban design are the most easily addressed through heuristic strategies. Buses, minibuses, cars and other vehicles are already mobile, their behaviors most strongly dictated by abstracted models. Rather than predict possibilities if and when cities are dominated by robotic architecture, transportation design suggests an approach where the dynamism of motorized vehicles can be guided through computation (Figure 3).

When one considers that most spaces in most urban areas are empty for the majority of a 24 hour period, there certainly are opportunities for an increased efficiency of spatial use. The project Open Source City proposed a flexible spatial strategy, where spaces would accommodate different programs during the day.20 A proposal by University of New Mexico architecture student Stefan Johnson, these hyper-flex spaces would radically adapt to co-programming strategies. An early-morning yoga studio morphs into an office during the day and a gallery at night, a coffee shop adapts into a bar or lounge. Suggesting that architecture could behave similar to an Arduino microprocessor or Raspberry Pi, the assertion here is that the built environment could become spatially and programmatically reconfigurable.

While Open Source City is borne out of an interest in material, resource, and energy efficiency, the dynamic condition of the program has the potential to be spatially arresting. Program and its associated architecture and signage fluctuate diurnally, but through other cycles as well. Open Source City identifies that some program is better suited for the weekend, as well as potentially for certain seasons, for certain years- this capacity to vibrate between programs has the potential to create a dynamic and socially active space.

A critical component of this proposal is the participatory nature of the process. Users have the capacity to determine which program exists when, a capacity enabled by the collection of user data. In this scenario, the data gives users a voice, enabling a dynamic, amorphous urban condition, rather than a static hierarchical one. As Jane Jacobs wrote, “Cities have the capability for providing something for everybody, only because, and only when, they are created by everybody.”21

While the controversy of data harvesting persists, the use of data has the potential to provide significant agency to a populus. The development of data-responsive technologies and success of Uber suggest that if the privacy issues of data collection are mitigated, the information collected can enable more responsive systems. Uber is a particularly useful example, as the corporation’s rampant growth has been unhindered by the scandal around their “God View” and “Creepy Stalker View” technology- software that allowed employees to view the specific locations of Uber’s users at any time.22 The relative lack of controversy around a clear violation of user privacy, combined with rampant user growth should not be understood to be an endorsement of flawed practices, but rather a blend of ignorance, apathy, and an deployment of Rosalind Williams’ suggestion of the social behaviors of Large Technological Systems.

Williams describes systems of connection as mechanisms of voluntary control. “The systems of connection described in this essay are quite different in quality from a panopticon. They are primarily routes, not buildings- systems, not devices. However, these spatial arrangements too permit a high degree of social control. This is not the control of surveillance, and no one is forced into the systems. Instead, people “choose” to use the systems because they are the fastest, most efficient, most “rational” way of circulating ideas and goods.”23 Driving along a highway is faster and more efficient than driving off-road, therefore more people choose to relinquish a degree of freedom and only take the exits the highway system provides. For most motorists, the convenience of the system is worth the cost.

If the digital applications and sensors that collect Big Data can be thought of as mechanisms for circulating ideas, goods, and providing other efficiencies, then similar dynamics will persist. Products will compete with each other to provide more efficiency and convenience, and in doing so will use their advantages to collect information about their users. The relatively short history of digital technology has already shown that users will gladly give their information away in return for better products- Google’s Gmail is a prime example, with its over 1 billion users who are seemingly unconcerned with the platform’s lack of privacy.24 As Williams describes, ultimately users sacrifice a degree of autonomy for convenience and efficiency.

This dynamic is convenient for urban resource use- assuming that data collection standards will be developed and adhered to, this collection of data ultimately gives a population agency within the system. Data has the capacity to provide a voice to a populus, to ultimately enable the participatory design architects and urban designers have struggled to develop. Data enables a more directionally actionable mode of engagement, and ultimately provides a more dynamic relationship between design and context.

If Beer’s approach towards computation as a design tool can be understood as deployed by Uber and similar providers, the dynamism of his heuristic approach is apparent. If we can see overlaps in building typology, the urban fabric becomes far more efficient- one building could potentially suffice for two or three. But beyond the efficiencies provided through this approach, ultimately this methodology allows the capacity for our constructions to respond, repurpose and reflect us in real time. Through iterative computation, the urban condition could become the rhythms and sounds that the populus interacts with, adapting the urban fabric to be as both disparate and cohesive as a Senegalese quilt. Perhaps the opportunity is not just to put more Africa in the computer, but to put more Africa in the Smart City.

The author would like to thank Sanford Kwinter, Benjamin Bratton, Neil Leach, Keller Easterling, Brian Goldstein, Sarah Williams, John Quale, Amber Dodson, and Stefan Johnson.

The author declares there is no conflict of interest.

©2019 Webb. This is an open access article distributed under the terms of the, which permits unrestricted use, distribution, and build upon your work non-commercially.