Research Article Volume 2 Issue 4

Saliency detection via boundary prior and center prior

Linhua Jiang, Hui Zhong, Xiao Lin

Regret for the inconvenience: we are taking measures to prevent fraudulent form submissions by extractors and page crawlers. Please type the correct Captcha word to see email ID.

School of Optical-Electrical and Computer Engineering, University of Shanghai for Science and Technology, China

Correspondence: Xiao Lin, The college of information, mechanical and electrical engineering, Shanghai Normal University, China, Tel 8618801907006

Received: May 13, 2017 | Published: June 14, 2017

Citation: Jiang L, Zhong H, Lin X. Saliency detection via boundary prior and center prior. Int Rob Auto J. 2017;2(4):134-139. DOI: 10.15406/iratj.2017.02.00027

Download PDF

Abstract

In this paper, we present a salient object detection algorithm that based on boundary prior and center prior. First, according to the principle of boundary prior, we construct the Boundary–based map by means of colour distinction. Second, we used colour and location cues to receive the Centre–based map, which based on center prior hypothesis. Then, we exploit the Centre–Bayes map within the Bayesian framework. Finally, we integrated Boundary–based map and Centre–Bayes map into the final saliency map that combined with each features and advantages. Comparing with sixteen methods, experimental results on seven datasets show that our proposed algorithm achieves favourable results in terms of the precision and recall, f–measure and MAE.

Keywords: saliency map; boundary prior; center prior

Introduction

Recently, as the important field of computer vision, image processing has received considerable attention. In addition, Abundant efforts have been made to find a favourably way to express the content of the image by extracting useful information from image. In this context, the research of salient detection comes into being, the model of saliency is used to find the highlight object in image, and then, it is convenient for the subsequent operations in image processing. Saliency detection can be applied to many modern computer vision tasks, for instance, image classification,1 image compression,2 object location,3 image segmentation.4 In the matter of information processing methods, saliency detection algorithms can be divided into top–down and bottom–up methods. The detection algorithm of top–down approaches5–8 always related to a particular task or target. Before the top–down algorithms find the highlight objects, these algorithms need to obtain the basic properties of target. In this case, the top–down algorithms can quickly and effectively find the salient target in image. But, these kinds of algorithms need to take the supervised learning. On the contrary, the approaches of bottom–up4,9–12 adopt the low–level visual information without the cues of a certain target. Compared with top–down algorithms, the bottom–up approaches would be more applicable. One of the mostly used bottom–up methods, which measure the distinction between a pixel and region with its neighbourhoods.13 Owing to lack of the prior knowledge of the object size, the approach of centre–boundary contrast often calculates the saliency in the multi–scale space; it may increase the computation complexity in some extent. In addition, some researcher adopts the boundary priority,12 they consider the boundary of image would be more likely to become the background. It is undeniable that the boundary has the high probability being the background, but not all the region in the boundary would be background. Once the salient object locates at the boundary of the image, it may lead to a poor result.

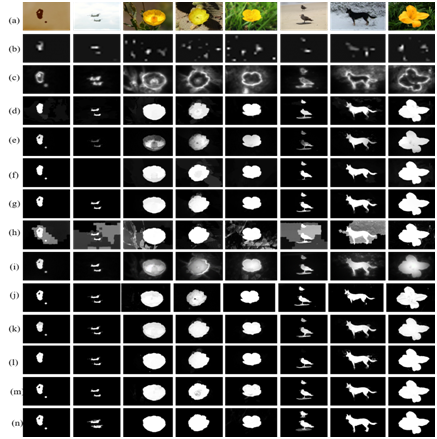

In this paper, we proposed an effective algorithm to solve these problems. For example (Figure 1). Firstly, we consider only one side of the boundary may be the background, and compute the contrast by using the global colour distinction, so we receive a primary saliency map called Boundary–based map, this map can comprehensively find the salient target, but the contrast between the foreground and background may not be significant. So we continue to construct the Centre–based map, this map is based on the center prior assumption.14 Later the Centre–Bayes map was received by putting the Centre–based map into the Bayesian framework.15,16 Since the Centre–based map favourably makes up the weakness of the Boundary–based map, we decide to integrate the Boundary–based map and the Centre–Bayes map into the final saliency map.

Figure 1 Saliency maps generated by the proposed algorithm. Brighter pixels reveal higher saliency values.

a. Input image b. Ground truth, c. Bound-based map, d. Centre-Bayes map, e. Saliency map

Related work

Recently, the number of algorithms in favour of considering the boundary of image as the background has increased. In Wei et al.17 construct a feature matrix by contrasting with the boundary, and receive the final saliency map. Qin et al.13 Construct a global colour distinction toward boundary seeds, then the saliency map obtained by the iteration of cellular automata. In Yang et al.18 calculate the relevance between the boundary and whole area of image, and regard the relevance as the saliency for each region. In Wang et al.12 consider the boundary connectivity as the standard to evaluate the saliency. In addition, some efficient algorithms choose to take the assumption of center prior. Tong et al.19 exploit a weak map that based on the center prior, and labelled the weak map as the training sample for the strong models. In Chen et al.20 find the salient object with the help of the soft abstraction. In addition, some researcher devotes to the Bayesian framework. In Rahtu et al.4 utilize the theory of Bayes to optimize the saliency map. In Xie et al.15,16 compute the prior probability and likelihood probability of the map; receive the probability of the map by using the Bayes formula. Since those algorithms reveal different effects in detection, and each one has its unique advantages, while we decide to absorb their strengths. Consequently, we proposed our algorithm that based on boundary prior and center prior, and obtained a satisfying result.

Proposed algorithm

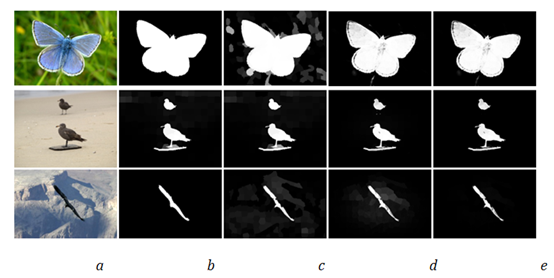

The detailed steps of our salient object detection algorithm showed in Figure 2. At beginning, we consider only one side of image as background. On this account, we construct four global colour distinction maps. Then we integrate them into the Boundary–based map. Using colour and position cues, the Centre–based map exploited by adopting the center prior assumption. After that, we generated the Centre–Bayes map by putting the Centre–based map into the Bayesian framework that based on convex hull. Finally, we construct the final saliency map by integrating the Boundary–based map and the Centre–Bayes map.

Figure 2 The process of our algorithm.

Image features

In order to receive the structure information of image preferably and well preserved salient object boundary. We decide to take the simple linear iterative clustering (SLIC)21 algorithm to separate the original image into N small super pixels. SLIC also improves the efficiency of the whole algorithm favourably. Then, we describe the each super pixel rely on the mean colour features and mean coordinates of pixels. We adopt three features, which contained RGB, LAB and Location12 to represent each super pixel. The experimental results prove that both of the colour features act complementary role in the salient object detection. Also, the Location features can properly compensate the weakness of colour feature in some cases, thus the results of algorithm will be more effective in complex scenes.

Boundary prior

Generally speaking, many algorithms consider the assumption that image boundary will be the background,12,13,17,18 while some salient object may lie in the boundary of image, therefore, it will impact the quality of saliency map to some extent. To minimize this influence, we consider one side of image boundary as the background. Based on this consideration, we construct four boundary prior maps by the colour distinction. The element

stand for the saliency value of super pixel i in t–th side of image boundary in CIELAB colour space and is calculated as follow

(1)

Where

is the Euclidean Distance between the superpixel i and j in the colour space of CIELAB, and

(t =1, 2, 3, 4) is the number of super pixels that belonging to each side of image boundary. a, b Is the balance weight. As is showed in the Figure 2, we can intuitively see four boundary prior maps that based on different edge of image. However, these maps are not perfect, but it really reduces the impact of the assumption that the entire image boundary is background. In addition, each prior map has its own advantages, in other words, each of them have some high accuracy areas. Consequently, we integrate this four boundary prior maps into the Boundary–based map:

(2)

Center prior

The center prior also is considered as an important role in recent detection algorithms [19, 20]. Based on this consideration, we first exploit a location center prior model

, it can make the image center more salient and restrain the boundary saliency. Then we construct three correlation matrices by accepting different colour features and Location for all adjacent super pixels (i,j). Later, we compute their relevance [12]

as the Euclidean distance between each super pixel.

(3)

Where N is the number of the super pixels. According to the experiential information, we define that a superpixel has no relevance to itself, so we define

. Then, we generate the Centre–based map that combined with three correlation matrices

and location center prior model

. The method was shown as shown below:

(4)

The

represent different feature spaces,

represent the RGB,

represent the CIE Lab, and

represent the Location feature.

is the number of the boundary super pixels. There is a point here, we must normalized

and

into [0, 1]. Predictably, Centre–based map has the characteristic that the super pixel which closer to the center of image has a higher weight. In spite of the most of the object will locate at the center of image, but this assumption will easily influence the prior map by the object distribution. To reduce the impact, we decide to put the Centre–based map into the Bayesian framework. In Bayesian framework, we calculate the likelihood probability with the help of the convex hull,15,16 For one hand, the framework makes up the insufficient of center prior model, on the other hand, it really strengthens the saliency of object further. A Centre–Bayes map that entirely different with the Boundary–based map has constructed.

Integration

Now, we already generated two saliency maps roughly. Boundary–based map and Centre–Bayes map have complementary properties. The Boundary–based map not only works well in revealing the global colour distinction, but also perfectly preserves the whole information of the salient object. However, the Boundary–based map may have the low contrast ratio for the foreground and background, thus it will reduce the accuracy of the algorithm. On the contrary, the Centre–Bayes map favorably highlighted the saliency regions, but sometimes the saliency map would be affected by the object distribution and cannot emphasize object completely. According to this situation, we make full use of advantages of each saliency map and integrate them into the final saliency map:

(5)

Where

is the number of the super pixels.

is the Boundary–based map and

is the Centre–Bayes map.

is the final saliency map in our algorithm.

Experimental results

We evaluate the algorithms based on seven widely used datasets in saliency detection filed. They are ASD,22 MSRA–5000,23 THUS,24 ECSSD,11 THUR,25 PASCAL26 and SED2.27 ASD contains 1000 images, and most images are relatively simple. MSRA5000 contains 5000 images with accurate masks. THUS contains 10000 images, labelled with pixel–wise ground truth masks. ECSSD contains 1000 semantically meaningful but structurally complex images. THUR contains 6232 images, which have complex backgrounds. PASCAL contains 1500 images with complex scenes. SED2 contains 100 images with two targets. We compare our algorithms with the comparatively advanced or classic algorithms. They are IT98,28 FT09,22 CA10,29 RC11,30 SVO11,31 XL11,15 GS12,17 SF12,32 XL13,16 PCA13,33 HS13,11 LMLC13,20 GC13,17 GMR13,18 wCO14,12 LPS15.34

Evaluation metrics

In this paper, we take the standard precision–recall curves to evaluate all algorithms, which we decide to compare with. We draw P–R curves by using threshold value segmentation. First, we normalized the saliency map, which we evaluate into the range [0,255], then set a threshold every 5 value, and we receive a series of binary map. We contrast with the ground–truth to compute the precision and regression rate, and draw the P–R curves. An efficient algorithm not only required high precision but also a high recall value, thus we consider F–measure as the comprehensive evaluate principle:

(6)

Where, according the previous paper [13], we set the

to 0.3 to show the relative importance. In addition, we compute the value of mean absolute error (MAE) [22], it similar to P–R curves, MAE calculates the average distinction between the saliency map and ground–truth:

(7)

The evaluate method mainly used in the area of image segmentation, and it reveal the similarity between the salient map and ground–truth.

Validation of the proposed algorithm

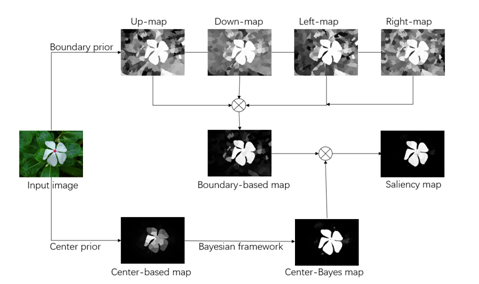

To show the effectiveness of our method, we test our algorithm in seven datasets. P–R curves and F–measure histograms in Figure 3 and MAE values in Table 1 reveal that: 1, our method generate a favourably result. 2, our method can adapt different image background. Figure 4 is the comparison of saliency maps on different datasets.

Figure 4 Comparison of saliency maps on different datasets.

(a) input images (b) IT (c) CA (d)RC (e) SF (f) HS (g) GMR (h) LMLC (i) PCA (j) GC (k) wCO (l) LPS (m) Ours (n) GT

|

Ours |

LPS |

wCO |

GMR |

GC |

LMLC |

HS |

PCA |

SF |

GS |

SVO |

RC |

CA |

FT |

IT |

ASD |

0.09 |

0.07 |

0.064 |

0.074 |

0.101 |

0.135 |

0.114 |

0.156 |

0.13 |

0.13 |

0.337 |

0.238 |

0.233 |

0.205 |

0.194 |

MSRA5000 |

0.133 |

0.127 |

0.11 |

0.129 |

0.101 |

0.135 |

0.162 |

0.189 |

0.17 |

0.144 |

0.358 |

0.269 |

0.25 |

0.24 |

0.254 |

PASCL |

0.168 |

0.19 |

0.154 |

0.184 |

- |

- |

0.223 |

0.209 |

0.216 |

0.181 |

0.368 |

0.295 |

0.264 |

0.261 |

0.26 |

ECSSD |

0.243 |

0.237 |

0.225 |

0.236 |

0.256 |

0.296 |

0.269 |

0.29 |

0.274 |

0.255 |

0.42 |

0.235 |

0.343 |

0.327 |

0.313 |

THUR |

0.132 |

0.15 |

0.15 |

0.18 |

0.192 |

0.246 |

0.218 |

0.198 |

0.184 |

- |

0.382 |

0.168 |

0.248 |

0.241 |

0.199 |

SED2 |

0.165 |

0.14 |

0.127 |

0.163 |

0.185 |

0.269 |

0.157 |

0.2 |

0.18 |

- |

0.348 |

0.148 |

0.23 |

0.206 |

0.245 |

THUS |

0.136 |

0.124 |

0.108 |

0.126 |

0.139 |

- |

0.149 |

0.185 |

0.175 |

0.139 |

0.331 |

0.137 |

0.237 |

0.234 |

0.213 |

Table 1 The MAEs of different methods

Conclusion

In this paper, we proposed a bottom–top method to exploit a saliency map based on the prior principle of boundary and centre. Based on the assumption, we consider the difference and similarities between the boundary and background, thus we can exploit the Boundary–based map. Meanwhile, because of center prior, we construct the Centre–based map that has high precision, then we take it into the Bayesian framework to achieve a further improved map called Centre–Bayes map. Our algorithm benefits their advantages, and improves their weakness by integrating them into the final saliency map, which has higher precision and recall. Experimental proved that our algorithm could receive a favourable result in different datasets.

Acknowledgments

The research was supported by the National Science Foundation of China (No. 61502220, No. U1304616 and No. 61472245).

Conflict of interest

Author declares that there are none of the conflicts.

References

- Siagian C, Itti. Rapid Biologically–Inspired Scene Classification Using Features Shared with Visual Attention. IEEE Trans Pattern Anal Mach Intell. 2007;29(2):300–312.

- Itti L. Automatic foveation for video compression using a neurobiological model of visual attention. IEEE Trans Image Process. 2004;13(10):1304–1318.

- Gao D, Han S, Vasconcelos N. Discriminant saliency, the detection of suspicious coincidences, and applications to visual recognition. IEEE Transactions on Pattern Analysis & Machine Intelligence. 2009;31(6):989–1005.

- Rahtu E, Kannala J, Salo M, Janne Heikkilä. Segmenting Salient Objects from Images and Videos. Computer Vision–ECCV. 2010. p. 366–379.

- Alexe B, Deselaers T, Ferrari V. What is an object? IEEE Conference on IEEE. 2010. p. 73–80.

- Jiang H, Wang J, Yuan Z, et al. Salient Object Detection: A Discriminative Regional Feature Integration Approach. IEEE Computer Society. 2013. p. 2083–2090.

- Ng AY, Jordan MI, Weiss Y. On Spectral Clustering: Analysis and an algorithm. Proceedings of Advances in Neural Information Processing Systems. 2002. p. 849–856.

- Yang J. Top–down visual saliency via joint CRF and dictionary learning. IEEE Trans Pattern Anal Mach Intell. 2017;39(3):576–588.

- Hou X, Zhang L. Saliency Detection: A Spectral Residual Approach. IEEE Conference on Computer Vision and Pattern Recognition. 2007. 1–8.

- Klein DA, Frintrop S. Center–surround divergence of feature statistics for salient object detection. IEEE Computer Society. 2011;50(2):2214–2219.

- Yan Q, Xu L, Shi J, et al. Hierarchical saliency detection. IEEE Conference. 2013. p. 1155–1162.

- Zhu W, Liang S, Wei Y, et al. Saliency Optimization from Robust Background Detection. IEEE Conference on Computer Vision and Pattern Recognition. 2014. p. 2814–2821.

- Qin Y, Lu H, Xu Y, et al. Saliency Detection via Cellular Automata. IEEE Conference. 2015. p. 110–119.

- Liu T, Yuan Z, Sun J, et al. Learning to detect a salient object. IEEE Transactions on Pattern Analysis & Machine Intelligence. 2011;33(2):353–367.

- Xie Y, Lu H. Visual Saliency Detection Based on Bayesian Model. IEEE International Conference on Image Processing. 2011;263(4):645–648.

- Xie Y, Lu H, Yang MH. Bayesian saliency via low and mid level cues. IEEE Trans Image Process. 2013;22(5):1689–1698.

- Wei Y, Wen F, Zhu W, et al. Geodesic Saliency Using Background Priors. European Conference on Computer Vision. 2012. p. 29–42.

- Yang C, Zhang L, Lu H, et al. Saliency Detection via Graph–Based Manifold Ranking. IEEE Conference on Computer Vision and Pattern Recognition. 2013. p. 3166–3173.

- Tong N, Lu H, Xiang R, et al. Salient object detection via bootstrap learning. IEEE Conference on Computer Vision and Pattern Recognition. 2015. p. 1884–1892.

- Cheng MM, Warrell J, Lin WY, et al. Efficient Salient Region Detection with Soft Image Abstraction. IEEE ICCV. 2013. p. 1529–1536.

- Achanta R, Shaji A, Smith K, et al. Slic super pixels. EPFL Technical report. 2010. p. 1–15.

- Achanta R, Hemami S, Estrada F, et al. Frequency–tuned salient region detection. IEEE International Conference on Computer Vision and Pattern Recognition. 2009. p. 1597–1604.

- Liu T, Yuan Z, Sun J, et al. Learning to detect a salient object. IEEE Transactions on Pattern Analysis & Machine Intelligence. 2011;33(2):353–367.

- Cheng MM, Mitra NJ, Huang X. Salient Object Detection and Segmentation. IEEE Transactions on Pattern Analysis & Machine Intelligence. 2011;37(3):1–14.

- Cheng MM, Mitra NJ, Huang X, et al. Salient Shape: group saliency in image collections. Visual Computer International Journal of Computer Graphics. 2014;30(4):443–453.

- Everingham M, Gool LV, Williams CKI, et al. The Pascal, Visual Object Classes (VOC) Challenge. International Journal of Computer Vision. 2010;88(2):303–338.

- Alpert S, Galun M, Basri R, et al. Image Segmentation by Probabilistic Bottom–Up Aggregation and Cue Integration. IEEE Transactions on Pattern Analysis & Machine Intelligence. 2007;34(2):1–8.

- Itti L, Koch C, Niebur E. A Model of Saliency–Based Visual Attention for Rapid Scene Analysis. IEEE Transactions on Pattern Analysis & Machine Intelligence. 1998;20(11):1254–1259.

- Goferman S, Zelnik–Manor L, Tal A. Context–aware saliency detection. IEEE Trans Pattern Anal Mach Intell. 2012;34(10):1915–1926.

- Cheng M, Zhang G, Mitra NJ, et al. Global contrast based salient region detection. IEEE Conference. 2011;37(3):569–582.

- Chang KY, Liu TL, Chen HT, et al. Fusing generic object ness and visual saliency for salient object detection. IEEE International Conference on Computer Vision. 2011. p. 914–921.

- Hornung A, Pritch Y, Krahenbuhl P. Saliency filters: Contrast based filtering for salient region detection. IEEE Conference on Computer Vision and Pattern Recognition. 2012. p. 733–740.

- Margolin R, Tal A, Zelnik–Manor L. What makes a patch distinct? Computer Vision and Pattern Recognition (CVPR). 2013. p. 1139–1146.

- Li H, Lu H, Lin Z, et al. Inner and inter label propagation: salient object detection in the wild. IEEE Transaction on Image Processing. 2015. p. 3176–3186.

©2017 Jiang, et al. This is an open access article distributed under the terms of the,

which

permits unrestricted use, distribution, and build upon your work non-commercially.