eISSN: 2574-8092

Research Article Volume 4 Issue 2

1Engineering Research Center of Fujian Provincial Academics for Industrially Intelligent Technology and System, Engineering College, Hua Qiao University, China

2Department of Information Management, National Taichung University of Technology, Taiwan

Correspondence: Li-Hong Juang, Engineering Research Center of Fujian Provincial Academics for Industrially Intelligent Technology and System, Engineering College, Hua Qiao University, China, Tel 86-15875474613

Received: April 01, 2018 | Published: April 17, 2018

Citation: Juang L, Wu M, Lin2 C. The 3d school guide system with affective computing of posture emotion expression. Int Rob Auto J. 2018;4(2):138-141. DOI: 10.15406/iratj.2018.04.00110

In recent years, in order to promote the user-friendly on human-computer interaction system, there are more and more studies regard human emotions and behaviors as a key element for it, and try to introduce affective computing into polybasic application system. Human emotions can not only be naturally expressed by language or facial expression; body action will also reveal a person’s emotional state. Therefore, the purpose of this study is to combine the identification of emotion expression in body action and posture with 3D guide system. The system can judge user’s posture to identify the emotional expression, so that it can give appropriate feedback and assist user interacting with the guide system in 3D campus situations.

Keywords: human-computer interaction, affective computing, posture, emotion expression, guide system

Human emotional expression is not only limited to use language way for exchange, face expression also unveils the inner implied mood state,1–5 proposed six basic mood types, and its corresponds of face mood features, respectively for surprised, fear, angry, happy, sad and disgust, using human face features reveal human inside real mood reaction. In addition, there are other academic studies have suggested that other than facial expressions, speech recognition or physiological identification of inner emotional state can also be used as one of the clues.6–10 In addition, despite the posture reflecting inner emotional state has been controversial, however, there are more and more research pointing out an opposite view in recent years, who think that body language can also be associated with the emotion and the identification can be used as an important feature of a person's inner emotions.11–16 The research believes that the emotion is not only a tool of communication for person to person,6 but it can also be another means for human to interact with computers. If we want the computer to become smart, then we have to let the computer has emotions as well as has the ability to identify emotions and is able to feedback something. Therefore, in order for human-computer interaction (HCI) mechanisms can be more human and more natural interaction,17–20 the emotion is used as a key factor in human-computer interaction in recent years, and trying to combine affective computing in a wide range of applied researches have been more and more increased.21,22 Currently, many the combined emotional operation related researches use human face mood of features identification, or in accordance with physiological identification to give appropriate feedback. However, posture for body mood identification of application is less, especially in guide overview system, although there are many applications using body action as browse 3D virtual environment of operation tool, but body language for emotional analysis and interaction with the joined mood feedback of application does not have too much. To do so, we combine the body of mood identification with the emotional operation into the 3D body sense of guide overview system. Then, analysis user in operating system process in the posture state judges user now body action implied mood, except, system will make in 3D environment mobile of operation planning according to action instruction and also judge posture mood to give appropriate feedback to help user for 3D campus guide overview system usability,22 interaction and contribution into the integrated system.

This development combined with the affective computing of 3D campus guide system can be roughly divided into three parts, respectively, 3D navigation environment on campus build-up, using body sense identification for position judgment, combining with the affective computing body emotional feedback, as shown in Figure 1, and explained as follows:

Campus tour

The Unity is a free multi-platform game engine and has human operation interface and multiple interactive content. It is open support, can also select using JavaScript, C#, and Boo three script languages for programming, so this study selects Unity for system development and built reset, and uses JavaScript and C# language for programming of 3D campus guide overview system as shown in Figure 2. It is a case study of school library and information management systems for the 3D campus guide system. Users are free to watch the floor appearance and internal based on a real 3D model of campus environment construction, and the system will correspond to the display of regional environment information in real time, so that users can learn more in depth information related to school equipment and resources demonstration.

Body sense

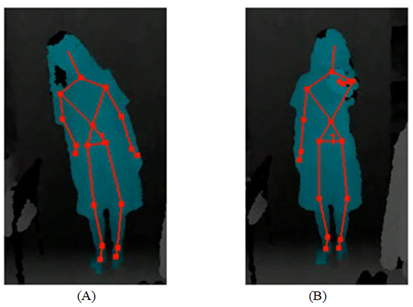

In parts of the body sense identification, this research conducted using the Kinect camera identification on the body movement, because it is based on user’s body skeleton position to judge, therefore we will set up cameras in about two meters away from user to capture and identify the whole body. In addition, user physical action will be identified in the navigation system for environment tours, movement judgment, and physical basis for emotional identification.

Affective computing

In addition, the body action will also combine affective computing for the emotional identification to judge the interaction between user and system in real time by mood performance, and do the corresponded information feedback according to the specified mood types to help user and the system has more natural human interaction and upgrade system operation easily. For example, in response to the detection of user watching information, showing interest in emotions, it will show more detailed information for the user that will avoid displaying too much information at once while traveling this environment and cause user’s confusion on watch and visual fatigue.

Systematic development

In parts of the system as shown in Figure 3, user is through the Kinect camera to capture the depth information and the skeleton to detect the position for identification, and interactive with 3D campus guide system. The interactive mobile content can be divided into the general directive control, physical emotion recognition and feedback.

Mobile posture control: User can define the basic operations directly, body movement control will move with the perspective of virtual characters, when the object is near the designated area and the role, the system will be established according to the database content, and display the corresponded information on screen, which is a brief introduction for the basic area.

Physical emotion recognition: In this part, this study combines emotional operation to identify the body of mood, anger, anxiety, and interesting for the three selected emotion categories, and judge them through the position detection, finally makes the appropriate response and feedback.

Feedback information: The feedback information is as follows: operating instruction description, the full regional environment map, and the information content of the complete system. In addition, emotion returning the rendered content is this example in Figure 3 processes and the body movement instruction set will have a detailed description in the next section.

Position identification and emotional feedback

In this section further shows the instruction category used in this system, the study proposes two main types: movement control and affective feedback information.

Movement control: The movement character uses the first-person controller actions from the perspective view in the Unity built navigation system. In addition, unlike third-person camera controller is mounted behind the avatar followed the shooting, in the first-person controller, user is not going to see avatar characters, can only see the captured view of the role. The character movement control is based on the sense of depth image information captured by the camera for identification and control as shown in Figure 4, Table 1 lists the basic operation of the system established by mobile control orders, hoping to operate the whole system interaction by the body posture identification actions.

Action classes |

Action behaviors |

Interactive display contents |

Right hand straight forward |

The distance is above 30cm between the straightforward |

Role walks forward |

Left hand straight forward |

The distance is above 30cm between the straight-forward |

Role walks backward |

Body right tilt |

Body right tilt above 15。 |

Role turns right |

Body left tilt |

Body left tilt above 15。 |

Role turns left |

Jump |

Two feet above ground 10cm |

Role jumps |

Table 1 Posture and movement control instructions

Figure 4 The deep images from the body sensing camera (A) body left tile. (B) Right hand straight forward.

Affective feedback information: These researchers4,7 mentioned that body mood can roughly be divided into positive to and negative emotion types, the upper body in positive motion (head and chest) will be more straight, even cling to backward tilt, and negative motion is instead, most will be rendering bent phenomenon. In addition, the study also references the researcher4 defined for the emotional behavior related with the body angle, which is the basis for the identification of body tilt angle, and slightly amended according to the system demand as shown in Table 2. On the other hand, the researchers3 also proposed 12 body behavior modes of posture description and relative emotion types. These researches also display when detects user being on positive and the negative emotion larger fluctuations, for example, interesting and anger, hand action will also be range larger; and low fluctuations of motion changes, for example, pleasure, anxiety and worry are instead. Based on the above, this study in accordance with the positive and negative emotions, further with the shifts in hand identify the emotion expression and give responses related to the content as shown in Table 3.

Emotion classes |

Emotion types |

Body emotion behavior |

Positive emotion |

Interesting |

Body straight up or backward tilt above 10。 |

Negative |

Angry, Anxiety |

Body forward tilt above 30。 |

Table 2 Positive and negative affection and body behavior

Emotion class |

Body emotion behavior |

Feedback display contents |

Angry |

Body forward tilt, Hands big straight up |

Display operation instruction |

Anxiety |

Body forward tilt, Hands straight up or interlace |

Display local environment map |

Interesting |

Body backward tilt or straight up, Hands big straight up |

Display detail information contents |

Table 3 Emotion and body combination and affective feedback Information

User never using the related 3D guide overview system will not be adapted in system operation compared with these experienced users. Although in beginning this system will have related posture operation description, but many studies found that user for system familiar degree will also affect user for system using feel, so this research hopes standing on user’s position for the interactive mechanism development. When user encounter on operational difficulties and feel unhappy or even angry, the system will be in time according to description of the angry emotion in response to user action. On the other hand, in order to make the interaction having a diversified and personalized response. When users lost in the system environment and do not know where their seats are and anxious to find some place, the system will respond to the completed map of the demonstrated floor as well as where the location is, in order to help users quickly find the present location. In addition, when user is interested in the currently displayed environment information profile, the system will display more relevant information for the interested people to further understand.

This system used the library and the department building for the 3D campus guide overview system case. The basic picture rendering at the right-upper site will display the floor region and small map as shown in Figure 5, let user understand currently where the location and environment are, and can tour the environment in the guide overview system through the basic mobile control action instruction. When user controls the virtual character role closed to the specified regional range, the system picture will render out the simple environment introduction. If user in watching the brief information introduction and performance is in the interested body action, the system will display more detail information for user according to emotion judgement. This automatically rendering method combines the emotional operation of interactive mechanism as shown in Figure 6. Figure 6A is the close rendering content area displaying the information picture; Figure 6B determines user's posture being interested emotion, it displays a more detailed description contents in the same area. In addition, when detects the negative emotions of anxiety and anger, the system will also be based on the emotional feedback response for the real-time assistance as shown in Figure 7. Figure 7A is that when user produces the anxiety of body emotion reaction, it will be as the judgement for the environment direction loss and immediately displays a full of floor map information. Figure 7B represents that user produces angry emotion reaction when this operation is encountered on difficulty, it may be forgot the action instruction or remember action of posture not clear. Therefore, it will display the operation instruction description on the guide overview system picture once again.

In the past, many researches about the combined emotional operation, almost of them used the human face emotion and semantic identification or physiological identification. For example, brain wave and pulse are as emotion feature identification. However, the body action also reveals out human emotion expression state, and in recent years increasingly more researches support such theory. So in this research, the body sense method for human-machine interactive operation was used through body action by emotion expression to do further interaction and feedback. Moreover, achieve the following advantages:

My Institute’s Engineering College, Hua Qiao University representative is fully aware of this submission.

The author declares there is no conflict of interest.

©2018 Juang, et al. This is an open access article distributed under the terms of the, which permits unrestricted use, distribution, and build upon your work non-commercially.