eISSN: 2378-315X

Proceeding Volume 5 Issue 3

FSP Head, GCE Solutions Inc, India

Correspondence: Anant Avasthi, FSP Head, GCE Solutions Inc, India

Received: March 02, 2017 | Published: March 15, 2017

Citation: Avasthi A. What a man can think- machine can do! Biom Biostat Int J. 2017;5(3):105-106. DOI: 10.15406/bbij.2017.05.00135

Machine Learning and Artificial intelligence are the next gen technologies which will help us in evolving from conventional ways of exploring data specially, in the field of clinical research. The research and development programmers run by the giant Pharma companies can be optimized and time taken for the screening of blockbuster molecule considerably reduced.

The concept which I plan to present through this abstract will help us develop novel ways of designing and optimizing the clinical research. The data driven approach will govern us in strategizing the drug development and will be a stepping stone in driving research. The current research focuses in reviewing and analyzing the data “In Silo”. The genomic scientist will look at the genomic data, clinical data manager will mostly look at the clinical data, and the toxicology expert will look at the preclinical data and so forth. With such rapid advancements in IT technologies and our statistical ways of modelling the data, it gives us an opportunity to integrate data from different sources and identify key link which may not be visible just by looking at a single source of data.

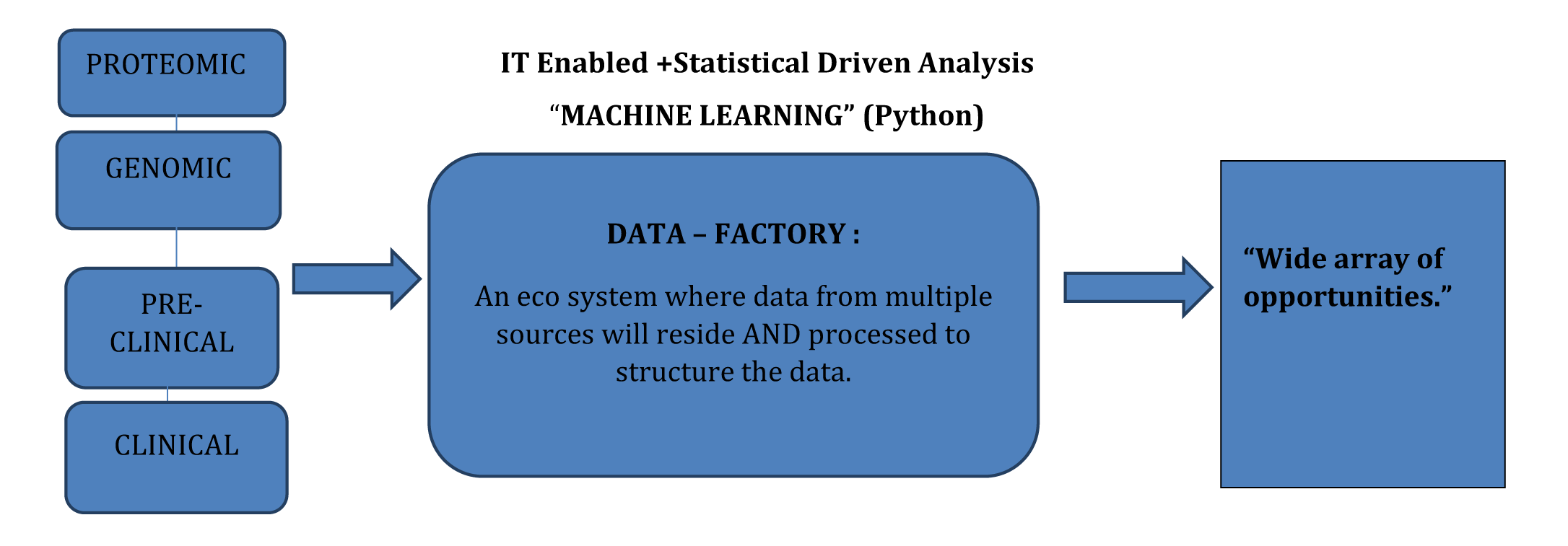

The idea is to develop an Eco system which will be a placeholder for data from multiple sources to sit and communicate with each other. This will provide a holistic view of the data across multiple and different sources. Once the data can communicate with each other, data mining can be performed to identify signals which can lead us to adaptive clinical trial design and identifying key elements in clinical research. (The below figure) Figure 1 depicts and summarizes the entire flow of data which has been conceptualized. When selecting the source of data between proteomic and Genomic, preference should be given to Proteomic data as proteomics is a study of proteins which are functional molecules in cell and represent actual condition.

So, while drawing statistical inferences from the data a high score should be should be given to proteomic Data base. The data standardization will be another key factor that needs to aligned, so that the data from multiple sources can communicate and we can seek crucial information from the data factory. The data standards such as CDASH, SDTM (for clinical) and SEND (nonclinical) will all come under single umbrella where there will be a uniform standard to understand integrated data from Data Factory. The next and the crucial stage will be developing key algorithms which can familiarize themselves with the integrated data and help to simulate a critical clinical research model. Future is exciting the question is how quickly we can embrace this change.

With such deluge of data, cloud based technologies and data science can provide deep insight and bring the data alive. Let’s step forward and unlock these mysteries.

None.

None.

©2017 Avasthi. This is an open access article distributed under the terms of the, which permits unrestricted use, distribution, and build upon your work non-commercially.

2 7