eISSN: 2576-4543

Mini Review Volume 1 Issue 6

M Nodia Institute of Geophysics, I Javakhishvili Tbilisi State University, Georgia

Correspondence: Tamaz Chelidze, M Nodia Institute of Geophysics, I Javakhishvili Tbilisi State University, Aleksidze Street-0160, Tbilisi, Georgia

Received: August 23, 2019 | Published: December 19, 2017

Citation: Chelidze T. Complexity of seismic process: a mini–review. Phys Astron Int J. 2017;1(6):197-203. DOI: 10.15406/paij.2017.01.00035

At present, there are two major concepts, adopted for description of seismic process. The simplest of them, namely, Poissonian model, now dominant, is a basis of widely accepted modern methods of Probabilistic Seismic Hazard Assessment. According to this model, the seismic events are independent of each other, i.e. the long-term correlations are absent in seismic process, which means that it can be described in terms of classic Boltzman-Gibbs (B-G) thermodynamics. Last decades, application of modern methods of complexity analysis revealed undeniable arguments in favour of existence of long-term correlations in temporal, spatial and energy distributions of seismic events, leading to power-law distributions in all three domains. As a result, nonlinear (hidden) structures were discovered in seismic data sets and their characteristics were calculated: it turned out that they vary with time, which is in contradiction with memoryless purely Poissonian approach. There is a hope that the analysis of temporal variations of complexity (seismic) measures offer a challenge of more well founded forecasting strong earthquakes.

Keywords: thermodynamics, poissonian model, solids, seismic process, seismic hazard

EQ, earthquake; CS, complex system; AE, acoustic emission; ETAS, epidemic-type aftershock sequence; B-G, boltzman-gibbs; PSHA, probabilistic seismic hazard analysis; SHA, seismic hazard assessment; SOC, self-organized criticality; NESM, non-extensive statistical mechanics; IFS, iterated function systems; RP, recurrent plots; RQA, recurrent quantification analysis; SSA, singular spectral analysis; DFA, detrended fluctuation analysis; PSP, phase space plots.

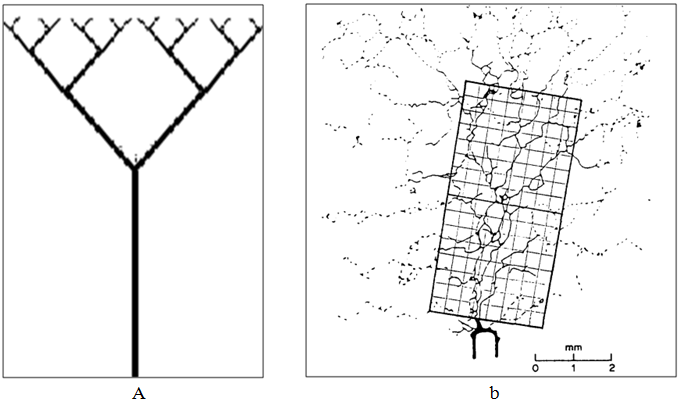

Several decades ago, geophysical objects and processes in Earth sciences were mainly considered as either random or deterministic. Complexity analysis reveals the enormous domain of structures and processes, located between completely random (white noise) and deterministic (Newton) extreme patterns and allows treating them in a quantitative manner. In this meso-scale domain, processes, though seem to be random, manifest certain nonlinear (hidden) temporal-spatial structures (Figure 1), which are invisible for routine statistical analysis, but what we can reveal by application of modern tools of nonlinear dynamics.1–6

There are many definitions of complexity. Almost all of them stress the following main properties of complex system (CS):

The fracture model of seismic process developed first as the application of fracture mechanics approach (one-crack-growth Griffiths model, damage mechanics model, kinetic theory of fracture) and later on-as the branch of fractal (percolation) theory,7 modeling fracture and elasticity of damaged (“dilute”) solids.8–12

Otsuka et al.,13 was probably the first, who got an idea on the possible application of percolation theory to description of seismic fracture process, but did not formulate it in the quantitative way. In14 suggested the percolation tree (branching) model, which considers fracture as evolution (propagation) of a single ramified crack till the critical state (Figure 2A). Chelidze et al.,8,15 suggested the percolation model of “diffuse” fracture process of solids, from the appearance of rare isolated cracks to formation of their clusters (due to interaction of close enough cracks) till the appearance of the so-called infinite cluster, which can be interpreted as a main fracture (Figure 2B). This critical point approach was further developed to describe fractal fracture energy of disordered solids,16 modeling acoustic and seismic emissions’ conditional amplitude sequence during fracture,17 role of anisotropy,18 etc. Chelidze et al.,7 shows that the conditional amplitude of elementary energy emission depends on the increment of the existing clusters’ size after addition of a single defect (“broken” site or bond). In turn this allow predicting power law distribution of amplitudes and appearance of such predictive signs of a critical state, as strong increase in the scatter of conditional amplitudes, increase of relative portion of strong events and, as a result, decrease of the magnitude-frequency relation slope or b-value. The same approach was used later by Sammis C et al.,19 and they obtained the similar expressions for . Allegre et al.,20 also developed scaling rule of rock fracture and suggested using it for earthquake prediction. The fractal models of elasticity and fracture were widely considered later on in many publications.12,21,22

Figure 2 A) Theoretical model of branched fracture; (B) Experimental network of cracks in the loaded thin slice of marble:95 even with a pre-executed groove (lower part of Figure, in the middle) at the initial stages of fracturing is developed the diffuse pattern of interacting cracks. Only on the final stage of fracture emerges the main crack, connecting several micro-cracks. The lattice, covering fracture network was used for calculation of two-dimensional (box) fractal dimension d=1.6.

Kinetic theory of fracture Zhurkov et al.,23 demonstrates that the “strength” of solid is just a limiting “engineering parameter” and the fundamental concept of fracture is the durability or the “lifetime’ of the solid under constant loading. The kinetic approach is founded on the idea that the decisive role in the destruction belongs to thermal fluctuations, which break the molecular bonds in the solid.23 If fracture mechanics was mainly aimed to finding the “limiting state” of the solid, such as critical stress, percolation and kinetic models describe the fracture as a developing process, which finishes by attainment of critical concentration of cracks or the end of the lifetime. Still, these models lack dynamical aspect: for example, the percolation theory of fracture is a good tool for description of the networks of interacting cracks and tectonic fractures24 and their clustering, but is not able to predict temporal behavior of fracturing system.

Seismicity is without doubt an example of complex system, as it demonstrates almost all characteristic features of complexity (section 1). Nonlinear approach swept into seismology from several directions: i. nonlinear effects in seismic waves’ propagation due to nonlinear elasticity of media; ii. nonlinearity in the earth material fracture/friction processes under stress on all scales from laboratory to earthquakes. The pioneering investigations in the first direction belong to AV Nikolaev and his colleagues.25 Later development is collected in the works of Guyer R et al.26 The second direction–analysis of complexity of earthquake (EQ) generation process, reflected in the seismic data sets–is in the focus of the contemporary seismology. The first evidence of seismic complexity revealed.27 Omori studied temporal correlation in the distribution of aftershocks’ number after the main shock: he obtained the first empirical power law for aftershock rate decay in time. After this, follows formulation of Gutenberg et al.,28 empirical law for earthquake frequency-magnitudes’ distribution. As a result, it was ascertained that the magnitude-frequency distribution of earthquakes (EQs) also obey simple scaling (power) law. That time the Gutenberg et al.,28 law was considered just as an empirical statistical rule. Only after introduction of the concept of fractal geometry of nature,29 the Gutenberg-Richter and Omori laws were interpreted as power-law scaling, characteristic for the complex fractal system.30–32 Fractal dimensions of EQs’ spatial and temporal (waiting times) distributions were first assessed correspondingly in.33–36 A comprehensive complexity analysis of seismic process is presented in.4,37,38

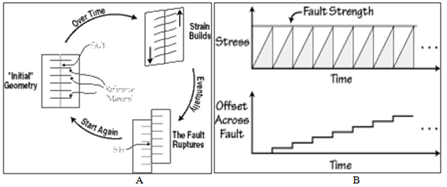

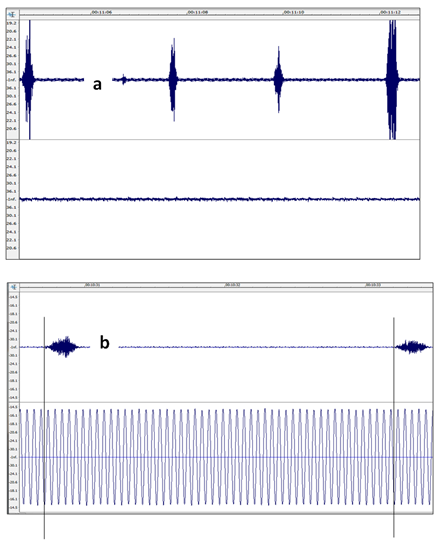

One of the main deficiencies of mechanical fracture models (section 2) is a lack of recurrence property, characteristic for the seismic fracture process (Figure 3). These fracture models work well for representation of just a single act of destruction, namely, they predicts some precursory effects of the final rupture of sample under stress or geological fault under tectonic force, as a result of approaching the critical state on the basis of analysis of sequence of events disregarding their dynamics in the time domain. Since a seminal paper of39 and several main succeeding works40–42 the stick-slip (Figure 4) is considered as the main mechanism, explaining the seismic process and recurrence of earthquakes. The temporal complexity of seismic process was revealed by dynamical friction instability (stick-slip) analysis, which leads to nonlinear Rate-and-State friction law formulation.42 Note that stick-slip is a typical example of very general and ubiquitous in nature integrate-and-fire complex physical systems with two time scales, where the slow nucleation (integrate, stick, accumulation) phase terminates necessarily, after approaching a threshold value, by a short slip (fire, stress drop) phase.43 Here we have to note that a half-century before Brace et al.,39,44 formulated the conceptual integrate-and-fire model of seismicity, so called elastic-rebound theory. Reid considered seismic cycles as the sequence of recurrent (sudden) releases of elastic strain energy, which accumulated slowly in the preceding period. Figure 4A presents screenshot of acoustic emission (AE) record during natural quasi-cyclic stick-slip process, observed on laboratory slider-spring system at driving a basalt block on the fixed basalt plate (upper trace) in the absence of forcing. It is evident, that the unforced stick-slip is a quasiperiodic process with a wide distribution of inter-event (waiting) times. Figure 4B shows stick-slip of the same slider-spring system, forced by weak periodical mechanical vibrations. This forcing (though much weaker than the pulling force of a spring) invokes the effect of phase synchronization: acoustic busts (slips) occur at definite phase of periodical forcing. As in all nonlinear integrate-and-fire systems, the stick-slip process is highly sensitive to a weak external forcing, which result triggering and synchronization phenomena.

Figure 3 The model of integrate-and-fire seismic cycle: (A) two blocks under stress are kept by friction from sliding (integrate stage). As the strain builds, eventually, the strain overcomes the frictional "strength" of the fault, producing earthquake, which releases the stored elastic strain (fire stage) after which situation returns to initial stage; (B) the process cycles periodically with some recurrence time. (http://eqseis.geosc.psu.edu).

Figure 4 (A) The screenshot of acoustic emission record, generated by slips during natural laboratory stick-slip process (upper channel). The lower channel shows that forcing is absent; (B) The screenshot AE record during stick-slip (upper channel), forced by periodical mechanical vibrations (lower channel). Periodical forcing is much weaker than the pulling force. Note the effect of phase synchronization: acoustic busts’ (slips’) onsets occur at a definite phase (vertical lines) of periodical forcing.45

The examples of natural integrate-and-fire systems are: i. laboratory experiments on triggering and synchronization of stick-slip process by weak mechanical or electrical forcing;44 ii. seismic activity, triggered by fluid injection in the wells;46,47 iii. reservoir triggered seismicity;48–51 iv. dynamical non-volcanic tremors, excited by and synchronized with teleseismic wave trains from the strong remote earthquakes;52 v. correspondence between global seismicity and Earth’s angular acceleration, found recently by Bendick et al.53 They believe that variations in the speed of Earth’s rotation, causing very small additional strains on the tectonic faults, could trigger seismic activity. The process is explained as the triggered seismic activity in the frame of integrate-and-fire model.

Note, that like generally in geophysics, there are direct and inverse problems in the earthquake time series analysis. Direct methods imply compilation of synthetic EQ sequences (catalogs) using some mathematical model, like stochastic approximation54 or epidemic pattern.34 The validation of such models is carried out by some statistical method, e.g. by maximal likelihood assessment, but is the close similarity of synthetic and natural catalogs enough for proving correctness of the adopted preliminary approximation? For example, we cannot differentiate between time series for random process and that of nonlinear system - logistic map - by their Fourier spectra, as they are similar. At the same time, they have quite different phase space plots (Poincare sections). The inverse approach presumes direct complexity analysis of experimental data (here EQ catalogs), without accepting any preliminary model, just looking for (hidden) structures, imprinted into time series by the natural processes, analyzing their fundamental characteristics and after this selecting the most appropriate physical model.

We can distinguish two major concepts, adopted for description of seismic hazard. The Poissonian model, suggested by Cornell 50 years ago55 is still dominant at present and is a basis of modern methods of Probabilistic Seismic Hazard Assessment or PSHA.56–59 According to this model, the seismic events are independent of each other and the statistical properties of seismic process are time-independent, i.e. the long-term correlations are absent in seismic process and it can be described in terms of classic Boltzman-Gibbs (B-G) thermodynamics. The Epidemic-Type Aftershock Sequence (ETAS) model, suggested by Ogata et al.,60 the best developed example of Poissonian methodology, also neglects the existence of memory and clustering in seismic sequences. Possibly, that is why PSHA maps fail in prediction of the main risk factor, the Peak Ground Acceleration in many areas of the world (Table 1). It is evident that the observed PGA values are much larger than predicted by the PSHA. These failures motivate the question “Why is the Probabilistic Seismic Hazard Analysis (PSHA) still used?”61 The matter is that despite of mentioned flaws, PSHA at present cannot be just “scraped”, as suggested Mulargia et al.,61 statistics shows that in developed countries the number of EQ victims is orders less than in developing ones, thanks to application of PSHA in former ones. The matter is that PSHA estimates are based on too short catalogs of strong EQs, decision process includes many expert assessments, founded on insufficient knowledge of EQ sources and their activity and, the most important, excludes time-space clustering of seismic events in a scale-free manner.

Expected (PSHA with Probability of Exceedance of 10% in 50 Years) and Observed PGA (g) for Recent Strong Earthquakes |

|||

EQ Location |

Date |

PGA, g |

PGA, g |

Kobe |

17.01. 1995 |

0.40–0.48 |

0.7–0.8 |

Gujarat |

26.01. 2011 |

0.16– 0.24 |

0.5–0.6 |

Boumerdes |

25.05.2003 |

0.08–0.16 |

0.3–0.4 |

Bam |

26.12.2003 |

0.16–0.24 |

0.7–0.8 |

E–Sichuan |

12.05.2008 |

0.16–0.24 |

0.6–0.8 |

Haiti |

12.01. 2010 |

0.08–0.16 |

0.3–0.6 |

Japan |

11.03. 2011 |

0.32–0.40 |

1.0–2.9 |

Table 1 Expected (PSHA with probability of exceedance of 10% in 50 years) and observed PGA (g) for recent strong earthquakes

From the above reasoning, it is evident that the fundamental property of complexity of seismic process should be taken into account in future seismic hazard assessment (SHA). This inevitably leads to need of developing time-dependent SHA, which is now in the embryonic stage. A big first step in development of complex model of seismic process was the concept of self-organized criticality–SOC.62 This model manifests all characteristic signatures of complexity: system consists of many components, which interact by exchange of energy and, due to these collective interactions, gain novel self-organized structure (phenomenon of emergency). The SOC belongs to the general class of self-sustained (relaxation, integrate-and-fire) oscillators with two time scales within each cycle: slow and fast (avalanche) intervals. It should be noted that the initial version of SOC does not demonstrate power law pattern for avalanches and excludes possibility of prediction of strong events; these deficiencies were eliminated in the later SOC versions.63

Sometimes the complexity analysis of seismic (earthquake) time series is considered as a branch of pure statistics. The statistical methods in complexity analysis are necessary to assess the robustness of computations of complexity parameters, as the existence of instabilities and amplification of weak effects in complex systems make the results of computations uncertain (not exactly reproducible), which calls for application of strict statistical analysis methods. Thus, statistics is only an auxiliary tool in the complexity analysis. At the same time, statistical physics, which explores physical interactions between components of the substance to explain the structure of real systems and processes, is universal tool for analysis of earthquake data sets, as it takes into account fundamental aspects of seismic process.64–68

One of main measures of a physical system is its entropy. B-G entropy cannot describe physical objects with large variability or multi-fractal systems.69,70 If in a simple fractal object, just a single exponent (fractal dimension) determines its dynamical behavior, description of multi-fractal systems’ dynamics needs introduction of a whole spectrum of exponents. Inspired by the concept of multi-fractality of complex objects and processes, Tsallis C et al.,69,70 proposed a generalization of the B-G statistics: a Non-Extensive Statistical Mechanics (NESM), as a tool for descripting dynamics of non-additive systems. In such systems, due to nonlinear interactions between components, the total entropy (so-called non-extensive entropy or Tsallis entropy) cannot be obtained by addition of entropies of components: this is one of the main signatures of complexity, namely, the property of emergency. In the limiting case of extensive (additive) system, Tsallis model reduces to B-G statistics. Application of Tsallis entropy measure provides new important information on the dynamics of seismic process.71–81

Many other tools of measuring complexity in experimental time series are developed by modern nonlinear dynamics,1–6 which give both qualitative and explicit methods for revealing hidden nonlinear structures. The basis of qualitative approach for reconstruction and testing of phase space objects, equivalent to the unknown dynamics, is using fundamental Taken’s time delay theorem.82 It is used for reconstruction of two and three-dimensional phase portraits (strange attractors), Poincare sections, calculation of Iterated Function Systems (IFS) and Recurrent Plots (RP).5,6,83 These methods preserve the general topological peculiarities of investigated dynamics and allow carrying visual, preliminary analysis of unknown dynamical process.

Besides qualitative methods of testing the phase space, such as mentioned Recurrent Plots, there are also robust methods of analyzing experimental time series. Namely, evolution of phase space trajectories may be analyzed by means of Lyapunov exponent (often by its maximal value, ) calculation. Ramified geometry of structures, reconstructed in the phase space, can be characterized by their fractal dimension (e.g. correlation dimension of time series), algorithmic complexity measures, Shannon and Tsallis entropies68,85 entropies. As the length of real seismic data sets is usually restricted, sometimes the use of the less sensitive to the time-series length method: recurrent quantification analysis (RQA) is preferable to more demanding Lyapunov-exponent and correlation-dimension calculations.2–6 For revealing hidden periodicities and synchronization phenomena in short and noisy time series, the methods of Singular Spectral Analysis (SSA) and Detrended Fluctuation Analysis (DFA) have been suggested.86,87

Below I present one example of application of phase space reconstruction method to earthquake (EQ) time series, which revealed nonlinear structures in the phase space plots (PSP) constructed for the area of strong Spitak earthquake (7 December 1988). The used catalog is covering the time long before, during and after the EQ occurrence. The whole EQ data sets from the test area catalog were declustered using Reasenberg algorithm89 and smoothed by the Savitzky-Golay filter.90 The results are presented as PSP, compiled in the following way:91 on the X axis are plotted the mean values of number N of EQs per n days and on the Y axis is plotted a differential of N for 10 days, i.e . The trajectories on PSP plots, obtained by connecting the consecutive phase space points in the clockwise direction (which corresponds to increase in time) form a “noisy attractor” with diffuse source area. Such attractors are common in biological systems.92

The phase space plots' analysis reveals some fine details of seismic process dynamics related to strong earthquake precursory/post-event patterns. The plots generally manifest two main features: a diffuse, but limited "source/basin" area, formed by a background seismicity and anomalous orbit-like deviations from the source area related to clusters, foreshock and aftershock activity. According to PSP in Figure 5, the deviating orbits are visible for 1967, 1971, 1986 and 1988. The largest orbit is definitely related to Spitak EQ (Dec. 1988) foreshock/aftershock activity. It seems informative to divide the most outlying orbit in Figure 5, marked as (1988. Dec) into pre- and post-Spitak EQ parts in order to assess duration of the "precursory" part of the trajectory. The full duration of the orbit is in Figure 5, starting at its exit from the “source” area and finishing at return to it, is approximately days. The duration of the "precursory" part is days and that of the “post-event” part days. Thus, a strong deviation of the orbit from the source area can be considered as the precursor of the strong event, due probably to foreshock (correlated) activity, not excluded fully by Reasenberg declustering. Indeed,93 show that after Reasenberg declustering many correlated events are still left in the catalog. As discussed earlier, the PSHA is using only declustered catalog, where non-stationary component is filtered: in other words, mainly stationary “source area” (Figure 5) is considered, but we see that the most interesting part connected with EQ preparation/relaxation, is just in nonstationary part, deviating from the stationary component. The above example illustrates significance of complexity approach for development of time-dependent seismic hazard assessment.94–95

Figure 5 Phase space portrait (noisy attractor) of daily series of EQ occurrences in the Spitak earthquake area (date: December 7, 1988, magnitude M=6.9), using 1960-2011seismic catalog for the distance R=100 km from the epicenter. The data were declustered and smoothed by the Savitzky-Golay filter. On the X axis are plotted the mean values of number N of EQs per n days and on the Y axis is plotted a differential of N for 10 days, i.e .

Early models of seismicity founded on the classic and fractal mechanics of fracture are useful for analysis of spatial patterns of fractures’ network, but lack the potential of predicting dynamics of process, such as recurrence. Application of modern tools of nonlinear dynamics reveal in seismic data sets nonlinear (hidden) temporal-spatial structures, like recurrence and clustering of earthquakes, which are invisible for or excluded by routine statistical analysis. The basis of Probabilistic Seismic Hazard Analysis (PSHA), aimed to assure safety of constructions, uses stationary Poissonian model of seismic process, which is in conflict with experimental data. As a result, PSHA understates effect of the strongest earthquakes of last decades. Complexity analysis shows that clustering and recurrence are essential features of real seismic data sets and these characteristics vary with time. This means that seismic process is time dependent and stationary approach is not enough to predict the risk of strong earthquakes. It is possible to measure complexity of seismic process and its variability with time using such effective tools of nonlinear dynamics, as Shannon or Tsallis entropy, Recurrence Quantification Analysis, phase space plot analysis, algorithmic complexity measures, etc. It is clear that application of mentioned modern tools of complexity theory will give strong impact to understanding the basic rules, governing seismic process and may be, in future will help to resolve the main problem of seismology-forecasting impending strong earthquakes.

Authors acknowledge financial support of the financial support of the Open Partial Agreement on the Major Disasters at the Council of Europe (Ref. No: GA/2017/08, FIMS PO No: 537534) and Shota Rustaveli National Science Foundation (Project No: 216732).

Author declares there is no conflict of interest.

©2017 Chelidze. This is an open access article distributed under the terms of the, which permits unrestricted use, distribution, and build upon your work non-commercially.