eISSN: 2378-315X

Research Article Volume 8 Issue 6

Department of Statistics and Epidemiology, Golgasht St., Tabriz University of Medical Sciences, Iran

Correspondence: Neda Gilani, Department of Statistics and Epidemiology, Golgasht St., Tabriz University of Medical Sciences, Faculty of Health, Iran, Postal code 51664, Tel (+98)9144141307, (+98) 4133565285

Received: July 12, 2019 | Published: December 5, 2019

Citation: Gilani N. Joint modeling of repeated ordinal measures and time to event data for CHD risk assessment. Biom Biostat Int J. 2019;8(6):204-212. DOI: 10.15406/bbij.2019.08.00290

Current joint models for longitudinal and time to event data are not sufficient for longitudinal ordinal outcomes. Three joint models for longitudinal ordinal variables and time to event data were suggested. In each joint model, cumulative logit, or continuation-ratio logit and or cumulative probit mixed effects models for the longitudinal ordinal outcome is associated with the time to event variable by random effects approach. Joint modeling likelihood approach, including ordinal repeated measure sub-model and time to event sub-model were constructed. A SAS macro on importance sampling procedure utilizing adaptive gaussian quadrature and conjugate gradient technique algorithm was used to obtain the maximum likelihood estimates of the parameters. This joint model provides inferences for both the longitudinal ordinal variables and time to event simultaneously. Also, a simulation study on various scenarios (concerning different sample size and different correlations among ordinal longitudinal variables) was conducted. The method was applied to the Tehran Lipid and Glucose Study (TLGS) data. The results in simulation study and real data set supports the applicability of the model.

Keywords: longitudinal data, survival, joint modeling, ordinal

TLGS, tehran lipid and glucose study; CONGRA, conjugate gradient; CLM, cumulative logit mixed effect model

In follow-up studies, both longitudinal and survival data are typically recorded for each sample unit. Since separate longitudinal and survival models are not ideal in this case, by the way the association between these two sets should be considered.1 In the past, the method was used to calculate this association that today of these methods is known as naïve methods. These methods can be cited Cox and two-stage models.2,3 Although these methods have advantages such as easy and quick calculation but using these methods the bias caused by measurement error or informative censoring of longitudinal variable cannot completely handled.4,5

Another way to use it more and more, the use of the class of models called "joint models for longitudinal and survival data " that actually includes the association structure between repeated measures and survival times is repetitive.6,7 This model was first proposed by Schluchter .8 Tsiatis and Davidian reviewed the motivation for and development of joint models; they deal with the structure of the likelihood for model parameters that illuminates the nature of common assumptions, and explain some suggestions for application and inference.9 in brief, using joint models, because of the correlation structure between variables it is possible to measure the similarity of the change in the different response variables from the model in addition to, the changes in the levels of one of the variables respond to changes at other levels leads to shall appear.10 However, most of the investigations are associated with continuous repeated measurements and studies considering the ordinal longitudinal variables are rare.11-13 In many situations instead of observing continuous outcomes, repeated ordinal outcomes are recorded over time. The joint modeling of ordinal longitudinal outcomes and the time to event data are quite enthusiastic in practice, however, have been less considered since turned out to be a little difficult and necessitates a very different methodology in modeling.11-14 Since, the data from ordinal level measurements increases the complexity of the likelihood, in this paper a methodology was developed whereby a joint likelihood, based on ordered longitudinal variable and time to event data, is maximized. This model consists of two parts: (I) different type of mixed effects ordinal regression sub-model to ordinal level trajectories which is a proportional odds model for the longitudinal ordinal measurements15 and (II) a parametric time to event model or survival sub-model.16,17 The two sub-models were linked via the joint distribution of the random effects in (I) and (II). The longitudinal sub-models used are cumulative logit, continuation-ratio logit and cumulative probit mixed effects models.15

Also, conjugate gradient (CONGRA) algorithm and importance sampling utilizing gaussian quadrature are used to optimize the joint likelihood function and approximate the integral likelihood function on random effects for jointly estimate the parameters addressed in longitudinal and time to event sub-models simultaneously as well as the association parameter and their precision.18,19

The rest of the paper is organized as follows: Section 2 describes the model and the likelihood. Section 3 describes computational method description (algorithm and execution). Section 4 describes a simulation study and the protocol. Section 5 describes application of the findings of the study on real data and finally sections 6 and 7 include discussion and concluding remarks on finding of this study.

As noted, this model consists of two parts: (I) The longitudinal sub-models used are cumulative logit, continuation-ratio logit and cumulative probit mixed effects models and (II) a parametric time to event model or survival sub-model.16,17 The two sub-models were linked via the joint distribution of the random effects in (I) and (II). Assuming that there are N subjects in the study, denotes longitudinal ordinal variable in time t for subject with categories. It is essential to note that in fact, there is no possibility of observing at any point in time and these observations are available only in specific time. Thus, longitudinal data observed includes measurements and is equal to the values of longitudinal measurements observed over times for subject . The proportional odds version of cumulative logit mixed effect model (CLM) for longitudinal ordinal outcome by conditioning on random component defined as

(1)

Where and are the design matrix of fixed and random effects; and β are category cut points and the vector of regression coefficients, respectively.15,20 Proportional-odds cumulative logit model is possibly the most popular logistic regression model for ordinal data.21

The continuation-ratio logits model(CRM) can be considered as such condition.

(2)

This model is applicable in cases where an subject should be present before being placed in category j in a higher category.15

The cumulative probit model (CPM) also can be written in this position which probit link function is the inverse of the standard normal cdf .

(3)

In cases where the main emphasis is on models with latent variables, particularly in econometrics; the cumulative probit model is used more than the cumulative logit model.22 For longitudinal sub-model, because of the similarity process, only cumulative logit mixed effect model is expressed in the following manner.

Considering a model (1) that are independent and identically distributed as

Where is unobserved real value of longitudinal measurement at time t and is monotonic link function. Because the value of contains the measurement error, then, is different from .

We assume are lative risk regression sub-model for the time to event data. Then the time to event sub-modelis as follows:

(5)

Where in is real survival time, is the history of unobserved real value of longitudinal measurement up to time t, is the baseline hazard function, is the vector of covariates corresponding to regression coefficients of γ, and finally the α is the association parameter for the expectation of the longitudinal endpoint and survival. Hence with this formulation, one assumes that the risk of an event at time t is dependent on the true value of the longitudinal outcome at that time.6 However, this assumption is unacceptable for survival function. Because in the terms of survival function, the model is as follow which will be incorporated in the joint model:

(6)

Among the methods to estimate the parameters in a joint model (23-28), the most popular is the method of maximum Likelihood.29,30 According this method, to combine the two sub-models of (I) and (II), the distribution of observed outcomes of is required where denotes the event indicator andadditionally, the time independent vector of random component . This component covers the association between the longitudinal and time to event sub-models as well as the relationship among the longitudinal measurements.

(6)

(7)

Where is the vector of parameters in time to event and longitudinal and random effects and is the dimensional vector of longitudinal measurements for i-th subject in the study. Assuming that the missing mechanism and visiting process are independent of real time to event and future longitudinal measurements, the i-th subject contributes the log likelihood as follow:

(8)

or

(9)

To clearly define the baseline hazard one straight forward choice is exponential hazard function which was used in this study. Also by assuming normal distribution for random component the likelihood would be of form below:

(10)

The logarithm of likelihood function is maximized with respect to using a standard algorithm.31,32

There are various optimization techniques for this likelihood function. Choosing an optimization algorithm depends on many factors for particular a problem that usually by trial and error can be achieved.18,33-36 In this paper we used Conjugate Gradient algorithm to optimize the likelihood function.18 CONGRA algorithm is best for large problems where the objective function and the gradient can be computed much faster than the Hessian and where too much memory is required to store the (approximate) Hessian. Although this algorithm requires many iterations but each iteration can be much faster.37 The main benefit of the technique is that the likelihood can be maximized straight forwardly by popular statistical packages such as SAS (Proc NLMIXED) which was used to estimate the parameters in this study. The inverse of Hessian matrix was computed to estimate the covariance matrix of the parameter estimates. Then the square root of the main diagonal entries of this matrix has given the standard error of each parameter.37 Also, importance sampling utilizing gaussian quadrature method is used to approximate the integral likelihood function on random effects.38 In this approach, obtaining the starting values the algorithm continue until converge with respect to the following criteria:18

(11)

Where is the value of parameters in the i-th iteration of .

To address the conditions under which the model would perform optimally, the simulation study was designed by various scenarios to assess the effect of changing in sample size (n=100, 200 and 500) and amount of correlation (r=0.1, 0.3, 0.5) among longitudinal measurements in 100 Monte Carlo samples. A user defined code in SAS 9.4 was used to generate the data in time to event sub-model and the GenOrd Package in R was used to generate data in longitudinalsub-model.39-41

To generate data in time to event sub-model, considering the exponential distribution for time to event, first the mean response was considered as a linear combination of in which the and are the intercept and slope of the regression. Also the covariate in the model is considered to be normally distributed . Afterwards to define the event as a binary variable (1: event, 0: non-event), a uniform random variable with mean equal to the mean of the aforementioned exponential distribution ( ) so that for time to event values higher than the value of this variable the observation considered as censor.

In the longitudinal sub-model, the ordinal variables were generated by a determined correlation matrix and marginal distribution. The marginal distribution of the variables is related to one another by Copula function; in the first step, the Gaussian copula functions are constructed to achieve the desired correlation matrix of variables. Then in the next step, samples are generated from the target distribution. In the GenOrd Package, Pearson and Spearman correlation coefficients are available. Also, the agreement between defined correlation and observed correlation among generated data, are tested and the boundaries of acceptable correlations are provided for defined marginal distribution.41 Finally, the generated data for these sub-models are integrated in the SAS to fit the proposed joint model of time to event and ordinal longitudinal outcome.

The results of simulations are presented in Figure 1 and Figure 2 considering bias, bias percentage and mean square of error (MSE) as the measure of evaluation.42 The charts of Figure 1 showed that by increasing the sample size, bias, bias percentage and MSE is reduced in constant correlation among longitudinal measurements scenario. In particular, for n=50 to the other samples, bias, bias and MSE of the difference is obvious more. Also, according to the charts of Figure 2, mostly by increasing the correlation among longitudinal measurements, bias, bias percentage and MSE is reduced in constant sample size scenario.

Hypertension is one of the most important modifiable risk factors for coronary heart diseases and problems are significant negative consequences on health and economy as well.43 studies show that treatment and control of hypertension can reduce the risk of cardiovascular disease and its complications.44-47 With respect to the blood pressure (BP) is changing with over time and life styles, thus the regular monitoring of these repeated measurements provide more useful information than a single measurement. In addition, the intervention and control other risk factors that affect both hypertension and CHD, is of great importance. Typically, classical modeling does not consider dependencies between longitudinal measures of anthropometric indices and CHD incidence. Therefore, in this study we utilized a joint modeling of longitudinal measures of BP levels and CHD risk, to know whether this outcome can be a significant indicator of predicting CHD incidence.

This study was performed under the framework of the Tehran Lipid and Glucose Study (TLGS), a community-based longitudinal study in four phases (phase I: (1999-2001), phase II: (2002-2005), phase III: (2006-2008) and phase IV: (2009-2011)), from district 13 of Tehran, the capital city of Iran. A multistage cluster random sampling technique was used to select more than 15000 people ages> 3y. All participants were followed for any hospitalized or death event annually up to 20 March 2012.48

In the current study, we first considered all participants age≥30 years and excluded individuals with a history of Cardio Vascular Disease (CVD) or stroke (n=487), participants without any blood pressure data (n=32), and subjects without follow-up of Coronary Heart Diseases (CHD) (n=715). Finally, 3784 women and 2889 men were recruited into the study. Informed written consent was obtained from all participants. The study was approved by the ethics committee of the Research Institute for Endocrine Sciences, Shahid Beheshti University of Medical Sciences, Tehran, Iran.

For the measurement of the ordinal longitudinal variable (BP), definitions and classification is based on ESH/ESC Guidelines for the management of arterial hypertension levels (optimal, normal, high normal and hypertension).49 Also coronary heart disease, as the time to event outcome of this study was definite as myocardial infarction, unstable angina pectoris, angiography proven CHD and CHD death. All of them are comparable with ICD10 rubric I20–I25. The event and its corresponding date were confirmed by an outcome committee.48 Baseline covariates included in joint model are as follows: the high risk age group, ages 45 and 55 years or higher in men and women respectively; Body Mass Index (BMI) was calculated weight (kg) divided by the square of the height (m2); hypercholesterolemia, serum cholesterol ≥6.2 mmol/l (240 mg/dl) or taking cholesterol lowering medication;; diabetes, fasting plasma glucose ≥7.0 mmol/l (126 mg/dl) or 2 hours post load glucose ≥11.1 mmol/l (200 mg/dl) or taking any medication for diabetes; low HDL cholesterol, HDL cholesterol <1.03 mmol/l (40 mg/dl);tobacco consumption, any tobacco product (cigarette, pipe, water pipe) in the past or currently on a regular or occasional basis; family history of CVD, history of myocardial infarction or stroke or sudden cardiac death in a male first degree relative <55 years or female first degree relative <65 and the high risk age group, ages 45 and 55 years or higher in men and women respectively. Details of data collection in TLGS have been published previously (48).

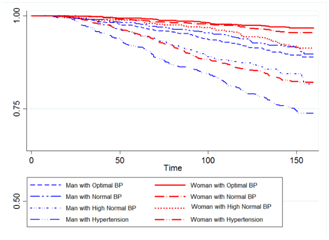

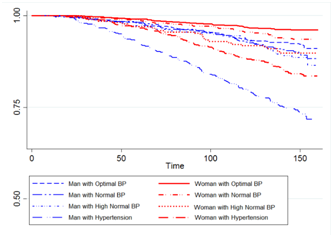

Figure 3 presents Kaplan-Meier estimates of survival time separated by sex and blood pressure levels in four Phase. It shows that the men at every level of blood pressure were more likely to have CHD than women were the same level of blood pressure. We have used the following CLM, CRM and CPM longitudinal and time to event model to analyze the data set. Wu-Hausman Specification Test is used to choose between fixed effects model or a random effects model in the analysis of data over time(50).CLM mixed effects model with random intercept and random slope is

(12)

CRM mixed effects model with random intercept and random slope is

(13)

CPM mixed effects model with random intercept and random slope is

(14)

Phase I Phase II

Phase III Phase IV

Figure 3 Estimated of Kaplan-Meier survival functions by sex and blood pressure levels in four Phases.

And, for the time to event data, using (5), we have considered a relative risk regression with the exponential function for baseline (according to Anderson-Darling Test) and with proportionality assumption (according to Therneau's Test).

Table 1 shows the results of the joint modeling on the data set. Three longitudinal models are compared using AIC and C criteria which show that the CRM model has the better fit to the data and a decline in standard error estimates. Also the obtained results from joint modeling showed that the risk of coronary heart diseases increases about 20% and 44% times in females and males, respectively, with increasing one level of the blood pressure (P<0.001).

The joint modeling of longitudinal and time-to-event data is a progressing area of statistical research thathas been used extensively in recent years.51,54 Thismodels also can be used when the main aim of the research is on longitudinal analysis with non-random dropout, and in cases where the target is on survival analysis with consideration of an endogenous time-dependent covariate.55 Andrinopoulou et al. suggested a joint model to investigate the degree of association between the trend of the repeated measures of aortic gradient and aortic regurgitation and time-to-events of death and reoperation.56

Concerning the endogenous nature of the ordinal longitudinal measures the two stage modeling, as utilized by other studies3,57,58 didn’t perform in our data well (as indicated by AIC). In such cases, since the models can’t be taking into account the measurement error in time dependent covariates, therefore the estimates of parameters are biased.10

In this study, the proposed model covers existing methods to handle longitudinal ordinal data using proportional odds models including cumulative logit, probit and continuation ratio models. As identified by Agresti15 in our data with sequential mechanism the latest model perform better than the others based on AIC. On the other hand, the estimates provided by this model is rather smaller than those provided by cumulative logit and probit models because this model estimates the probability in a defined category with respect to lower or higher order categories while in the probit or cumulative logit models, the probabilities are estimated in global scale.

Additionally, considering AIC and Cox Snell residuals, the exponential model had better fit in this data (results not shown). In this case the inferences are more accurate than the semi parametric cox model.59,60

Importance sampling procedure was carried out as a simple and effective approach to optimize model’s numerical integration and one of its advantages is the utilizing standard statistical packages such as PROC NLMIXED in SAS. Comparing to other integration approaches, importance sampling technique is a suitable procedure and one of its advantages is the utilizing standard statistical packages such as PROC NLMIXED in SAS to maximize the likelihood easily.19 NLMIXED is the powerful computational procedure to optimize linear and nonlinear models with normal random effects including various optimization algorithms.37

There was a substantial computation in the models provided; hence the “CONGRA” algorithm was used to optimize the models. The first-derivative method CONGRA is paramount for large computations where the objective function and the gradient can be subtracted much faster than the Hessian and where high amount of memory is required to save the Hessian matrix.37

Also, our simulation study showed that by increasing the sample size as well as correlations among ordinal longitudinal measures, the bias and mean square error of the parameter estimates have improved. These finding are in the line with those found by others,42,61 which can be reasoned by greater amount of heterogeneity in subjects along with higher correlations among longitudinal measurements. In the joint model with random effect, the independence of time to event and longitudinal process is being guaranteed by conditioned on random effect.62

The values of association parameter (α) is shown a significant association between changes in the two outcomes (P<0.001). In other words, that the risk of coronary heart diseases increases about 23% and 46% times in females and males, respectively, with increasing one level of the blood pressure. If Player and colleagues review, which aims to investigate the relationship between hypertension and cardiovascular disease was conducted, the results showed that with increased incidence of hypertension, the risk of cardiovascular disease increases.63 In a meta-analysis of 68 clinical exam and a 10-year follow-up period, it was shown that mmHg10 reduction in systolic blood pressure or diastolic blood pressure and reduce the incidence of cardiovascular disease is.64

One of the main shortcomings of joint models especially those proposed in this model, is the low convergence of corresponding algorithms and hence needing to high amount of computational power. Future direction in this regard can be proposed to investigate faster algorithm and specific software to implement this technique.

Also, the models can be adopted to cover the competing risks in the time to event sub- model. In addition, the longitudinal process is usually multifactorial and can be measured in the multivariate framework and the can be assessed in the longitudinal sub-modelin multivariate fashion.

Taking into account the prior information on parameter estimate as well as to achieve the exact inferences especially in smaller sample size, bayesian form of the proposed models are recommended to be investigated.

The authors acknowledge the TLGS participants and field investigators for their assistance in physical examinations, biochemical and database management.

The authors declare that there are no conflicts of interest.

©2019 Gilani. This is an open access article distributed under the terms of the, which permits unrestricted use, distribution, and build upon your work non-commercially.

2 7