eISSN: 2378-315X

Research Article Volume 6 Issue 3

Dept. of Statistics & Applied Probability, University of California, USA

Correspondence: Nava MM, Jammalamadaka SR (2017) A Preliminary Test Estimator in Circular Statistics. Biom Biostat Int J 6(3): 00168.

Received: July 26, 2017 | Published: August 30, 2017

Citation: Nava MM, Jammalamadaka SR. A preliminary test estimator in circular statistics. Biom Biostat Int J. 2017;6(3):342-348. DOI: 10.15406/bbij.2017.06.00168

The von Mises distribution plays a pivotal role in circular data analysis. This paper addresses the question as to how much better one can do in estimating its concentration parameter if partial information is available on the mean direction, either (i) through a prior or (ii) through a “pre-test”. These two alternate scenarios and the resulting estimators are compared with the standard maximum likelihood estimator, and we explore when one estimator is superior to the other.

Keywords: preliminary test estimator, circular statistics, circular normal distribution, concentration parameter, maximum likelihood estimates, bayesian, mean square error, nuisance parameters

Saleh AME,1 provides an introduction and thorough review on PTEs and Stein-type estimators for various linear models. In statistical inference, the use of prior information on other parameters in a statistical model, usually leads to improved inference on the parameter of interest. Prior information may he (i) known and deterministic which is then incorporated into the model in the form of constraints on the parameter space, leading to a restricted model, or (ii) uncertain and specified in the form of a prior distribution or a verified null hypothesis. In (ii), choosing certain restricted estimators may be justified when the prior information can be quantified i.e. comes with known confidence levels.

In some statistical models, certain parameters are of primary interest while other parameters may be considered as nuisance parameters. One procedure to mitigate the presence of nuisance parameters is to assess what value(s) such nuisance parameter(s) take, by a preliminary test with a null hypothesis restricting the nuisance parameter values. The null hypothesized value(s) of the nuisance parameter are either used or not, depending on whether the observed preliminary test statistic falls in the acceptance orrejection region of the hypothesis. That is, our final estimator for the parameter of interest is thus a linear combination, conditional on whether the preliminary test statistic is in the acceptance or rejection region of the test, and is called a Preliminary Test Estimator (PTE).2,3 and4 were among the first to implement the idea of preliminary test estimation (PTE) in an analysis of variance (ANOVA) framework to analyze the effect of the preliminary test on the estimation of variance. The idea goes back to a suggestion in,5 which considers testing differences between two means after testing for the equality of variances; then using the usual t-test with the pooled estimate for variance, if the variance test shows equality; otherwise, it falls into the category of Behrens-Fisher problem. In these problems it became clear that the performance of the PTE depended heavily on the significance level of the preliminary test. Han c6 were the first to attempt to find an optimum size of significance level for the preliminary test for this two-sample problem.

All Stein-type estimators7,8 involve appropriate test statistics for testing the adequacy of uncertain prior information on the parameter space, which is incorporated into the actual formulation of the estimator. Stein-type estimators adjust the unrestricted estimator by an amount of the difference between unrestricted and restricted estimators scaled by the adjusted test statistics for the uncertain prior information. Usually, the test statistics are the normalized distance between the unrestricted and restricted estimators and follow a noncentral chi-square or an F-distribution. The risk or the MSE of Stein-type estimators depends on the non-centrality parameter, which represents the distance between the full model and restricted model. The PTE may be considered a precursor of the Stein-type estimator. A simple replacement of the indicator function that we will see in the PTE with a multiple of the test statistic, leads to a Stein-type estimator.

The CND is the most widely used circular distribution in circular statistics. It plays as central role as the Normal distribution does in usual ‘linear’ statistics. The probability density for a CND

denoted by CND(), is:

(1)

The mean direction is also referred to as the preferred direction and the concentration parameter can be thought of as the inverse of variance as it is a measure of concentration around the mean direction. A larger value for implies that observations are more concentrated around the mean direction, while a value of close to 0 implies there may not be a strongly preferred direction. We consider now the maximum likelihood estimates (MLEs) for the parameter in a classical and Bayesian setting.

Maximum likelihood estimate for concentration parameter

Given a random sample from a CND(), the MLE for when in unknown is given by Jammalamadaka SR:9

(2)

When the mean direction is known, then the MLE for is obtained by substituting in place of in 2. Since the estimation of concentration parameter is of main interest here, we will denote ˆκMLE and ˆκµ the MLEs for when sample mean direction is used (if unknown), and when the mean direction is known, respectively. In both cases the MLEs carry the usual asymptotic properties. Analogous to the case of a linear Normal distribution, is superior (has smaller MSE) than ,9

If the sample comes from a population with population mean µ then the &:9

(3)

where we have inequality if and only if . We denote and , where , , and .

This raises the question whether we can go somewhere in between if we have partial information on µ.

MLE for when there is a prior on

In this semi-Bayesian setting we will place a prior on the nuisance mean direction, a convenient choice being a CN:

where and are the mean direction and concentration parameters for the prior. The value for measures confidence in the prior mean direction . A larger value of τ makes the prior distribution have higher concentration around µ0. A value of implies a uniform prior on for µ.

In this context, the parameter has a prior distribution, while the parameter is an unknown parameter as in the classical setting. The parameter κ is of interest, while µ is the nuisance parameter. We thus blend together classical and Bayesian methods to get an estimate for κ.

We begin with the usual likelihood given the data () independent and identically distributed:

(5)

Given the prior distribution on, we wish to estimate the concentration parameter κ. We derive the likelihood function for κ by first averaging out our prior knowledge on . The result is the likelihood for κ given by:

(6)

In 6, we begin with joint likelihood for the µ and κ which is just the joint density of the data. We then derive marginal distribution for the observations by integrating with respect to µ. After incorporating our prior knowledge on µ and integrating with respect to , we obtain a valid likelihood for which we want to maximize with respect to.

(7)

(8)

Putting and , and by the definition of the Bessel function , the resulting integral in 8 is our likelihood for which is given by:

(9).

The likelihood is a ratio of Bessel functions. Given the likelihood, prior distribution on , and data we can find the MLE for . There is not a simple analytical solution for the MLE, so numerical methods are required for the maximization of 9 with respect to κ leading to the semi-Bayesian MLE .

One interesting comparison would be of the frequentist MLE for κ as in 2 with the semi-Bayesian MLE obtained from 9, using a circular uniform prior distribution on in the latter, i.e. setting . In some cases, placing uniform priors result in Bayes estimates that are similar to classical MLEs. Using a circular uniform prior distribution on in 9, we derive the Fisher Information to find the variance of our semi-Bayesian MLE. From 9, with a circular uniform prior, the log-likelihood is,

(10)

and the semi-Bayesian MLE for κ is the solution to setting where ,

(11)

The solution for MLE in this case is found by,

(12)

Immediately we notice a difference when comparing in 2. Taking another derivative of 11 we have obtained the Hessian where ,

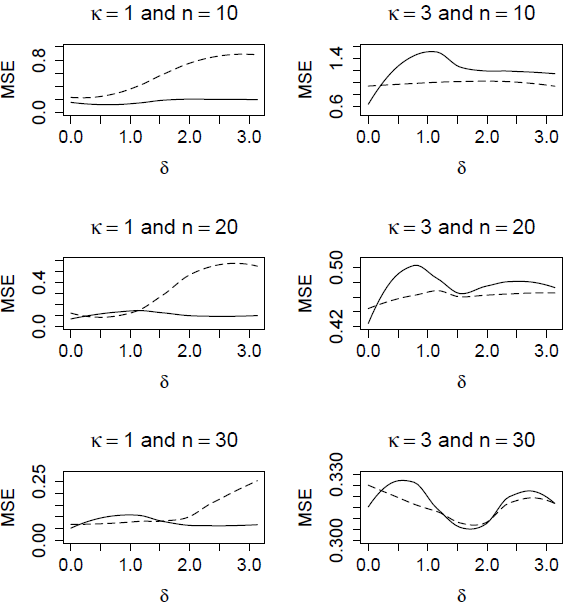

(13-15)

Then the Fisher Information (I) is given by,

(16)

where substituting the semi-Bayes MLE, the asymptotic variance (V ) of:

(17)

Therefore the asymptotic variance of the MLE can be found using 17. Next, we can compare the two MLE’s via their respective large-sample confidence intervals. The confidence interval for is given by,

Figure 1 displays histograms for and based on 1000 simulations from the CND with , using sample size of . In each setting the histograms of estimated values are nearly identical for and.

Figure 1 Histograms of (MLE) and (Bayes) with circular uniform prior for 1000 simulations from CND(µ,κ).

Preliminary test estimators

A preliminary test estimator (PTE) is a method of estimation that introduces sample-based prior information via a hypothesis test on the nuisance parameter to aid in estimating the parameter of interest.1 If we fail to reject the null, then we use an estimator evaluated using the null hypothesis value. If we reject the null hypothesis, we use an estimator based directly on the sample, the usual MLE. The parameter value in the null hypothesis represents our prior knowledge. The idea is when the true parameter value is in or near the null hypothesis value, the PTE will provide a better estimator in terms of mean squared error (MSE), or any other risk function.

We observe data from a CND with unknown mean direction and concentration parameter. We are interested in estimating the concentration parameter, with the mean direction being a nuisance parameter. Our preliminary test has null hypothesis of mean direction equal to a pre-specified direction, versus a two-sided alternative.

Our PTE for the concentration performs better than the usual MLE and Bayesian estimates for the parameter. The result is similar to the linear case where we have a normal distribution with unknown mean and variance.10 This methodology can be used to improve the estimation accuracy in many existing applications since the CND is one of the most commonly used distributions in circular statistics.

Test for assumed mean direction

Suppose we have observations from a CND with both mean direction and concentration parameter unknown. We want to test:

(18)

In the linear case with data from a Normal distribution, this is parallel to the standard Student’s t-test. In,9 the Likelihood Ratio Test (LRT) is based on the test statistic:

(19)

where we reject the null hypothesis for small values of the test statistic. Note the distribution for and depend on the nuisance parameter . However, the exact conditional test for the mean direction of the CN can be obtained by using the conditional distribution of , which is independent of. is the length of the projection of sample resultant vector, , towards the null hypothesized mean direction, . In the conditional test we reject null if is too small for a given , or equivalently, we reject the null if is too large for a given .

To illustrate the geometry of the test, suppose we have polar vector given by the null hypothesis, . Next, we have n observations and we calculate the length of projection,, of the sample resultant vector on the polar vector. Conditioning on the value of , we find the probability of observing our sample resultant vector, , and larger values when the null direction is true, conditional on the observed value of .

The space consists of sample resultant vectors that have projection length, c, on the polar vector. Suppose and are two resultant vector with equal projection length and . Then the direction of is further away from , than ’s direction.

For significance level , we find the rejection region via the exact conditional distribution of . That is, is the solution to the equation that satisfies:

(20)

As shown in [9], this critical point is the solution to:

(21)

where we solve for , for a given and . Equations for and can be found in.9 There is no analytical solution for in this case, and11 provides a table of rejection regions for various values of . To simplify our hypothesis test we use results in,12 where approximate confidence intervals for the mean direction are provided. Our test statistic derived from the approximate LRT is broken into two cases:

For , we reject if:

(22)

Where and is the upper quantile of the standard Normal distribution.

For , we reject if:

(23)

These approximations hold well for even small sample sizes when the concentration is high.

The PTE for the concentration parameter

Now we introduce our PTE for estimating the concentration parameter, where the mean direction is a nuisance parameter. Given observations, , with unknown mean direction and concentration parameter we test our null hypothesized mean direction via the aforementioned hypothesis test. Our PTE is given by:

(i) For ,

(24)

where is found by using 22 and solving for .

(ii) For ,

(25)

where χ2 is found by using 23.

we break the estimator into the two cases according to our hypothesis test. The PTE in either case selects only one of the two estimators according to the result of the hypothesis test. The performance of the PTE depends on the level of the test and the proximity of the true mean direction to the null hypothesized value. We measure performance in terms of mean squared error (MSE) of our estimator over different significance levels γ, and different true differences between the mean directions .

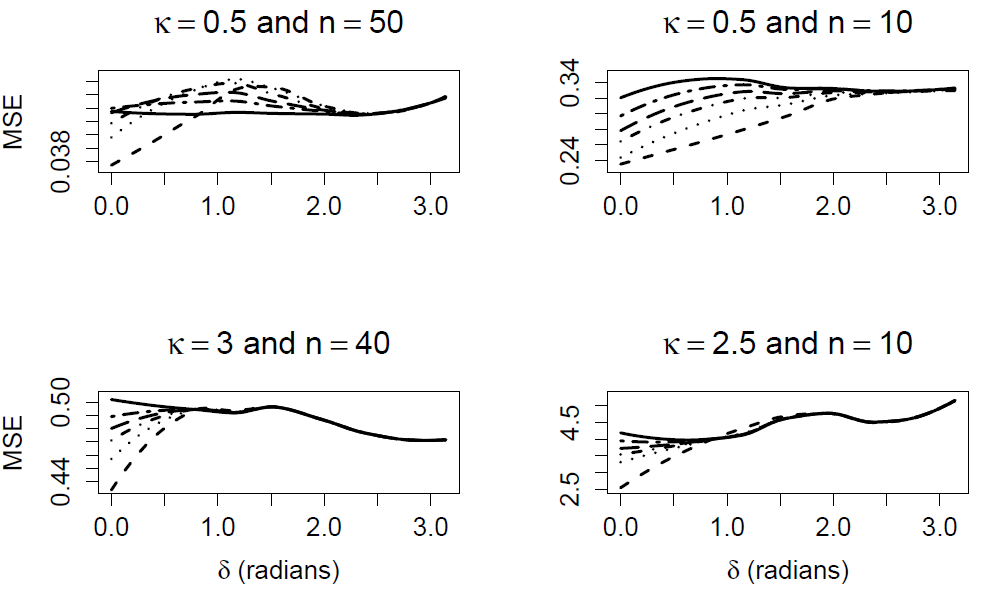

In Figure 2, we observe the simulation-based MSE of the PTE and MLE for the concentration parameter. We perform 1000 simulations of for . Here represent the 50 equally spaced points between 0 and π. For each , we record the MSE. Each line represents MSE of an estimator over values of , where represents the true difference between the population mean direction and the null hypothesized mean direction.

For significance levels the PTE performs at least as good as the MLE, and performs better when the true mean direction is closer to the null hypothesized value. For larger significance levels the test requires less evidence to reject the null hypothesis, and when we reject the null the PTE is equivalent to the MLE, . In Figure 2, we observe that as the significance level increases the PTE is more likely to use for smaller values of . To show the vast improvement in our PTE, we examine the mean-square relative efficiency (MRE) of the 2 estimators

PTE and MLE, defined by

(26)

Values larger than unity imply that the PTE performs better than the MLE. In Figure 3, we have the MRE of the MLE and PTE with across all values of . The relative efficiency is greater than 1 for all less than approximately 0.65 radians. In this example, the PTE can reduce the MSE by 20% when the true difference in mean directions is small. For., the MRE is less than 1 implying the MLE has the smaller MLE. This is due to our preliminary test failing to reject the null hypothesis. For , the preliminary test will almost always reject the null hypothesis value and the PTE will be the same as the MLE resulting in the MRE being equal to one.

In Equation 3, the MRE is maximum for , and when the PTE will almost always reject the null for large enough the MRE is equal to one. For the PTE may reject or fail to reject the null hypothesis depending on the sample observed. In the case it fails to reject, is no longer minimized at µ0 since is the population mean (Recall ). Therefore if is closer to than , and if is closer to than . If the latter case appears more often than the former case for some intermediate values of , then the MRE will be less than 1.

In Figure 2, we compare the MLE and PTE with from Figure 2 .The PTE with has the best results for smaller values of , but could perform worse than the MLE for intermediate values of . PTEs with perform at least as good as the MLE. Now, we illustrate other possibilities that can occur and the performance of the PTE.

In Figure 4, we simulate from four different realities and examine the performance of our PTE for the same significance levels as used in Figure 2. Note that the lines have same labels as in Figure 2. For each plot we have simulated-based MSEs for each line. We perform 1000 simulations of , for Here represent the 50 equally spaced points between 0 and π. For each δj, we record the MSE which creates our MSE curve over for each scenario.

Figure 4 Simulation-based comparison of PTE performances for sample sizes and concentration parameters . lines are labeled as in Figure 2.

First examine that in all scenarios, the PTE with significance level γ = 0.01 performs the best when the true difference in mean direction is null or small. In the top-left plot we have n = 50 simulated observations from CN(δ,κ = 0.5); top-right plot we have n = 10 simulated observations from CN(δ,κ = 0.5); bottom-left plot we have n = 40 simulated observations from CN(δ,κ = 3); bottom-right plot we have n = 10 simulated observations from CN(δ,κ = 2.5).

In the top-right plot all of the PTE’s in this simulation performed uniformly better (over ) than ˆκMLE. In the remaining three plots there are values of where the ˆκMLE has better performance. This occurs when our preliminary test fails to reject the null hypothesis for intermediate values of . The difference becomes more obvious when we have a large sample size and the value of κ is small as in the top left plot. Here the PTE’s MSE increases for intermediate values of , for relatively smaller significance levels. There is a similar pattern in the bottom two plots. This pattern is to be expected, since smaller significance level will require more evidence to reject the null hypothesis of the preliminary test.

In applications, the values of and κ are unknown. So how do we select the optimal significance level given n observations from ? Following the work of,1 we create tables to find a PTE with minimum and maximum MREs.

Tables were constructed through simulations. Given a sample size n and value for κ, we generate values from a CN(δ,κ) distribution to estimate the MRE over a grid of γ and values, where 0 ≤ δ ≤ π. For each γ, we compute the maximum MRE,

Emax, minimum MRE, , over all , and record the where is located, ∆min. For almost all cases the location of the maximum MRE is located at and the function MRE(δ) is monotone decreasing from to . For values , the function MRE() increases back to unity since the PTE will reject the null hypothesized values for larger . We then repeated this procedure for different parameter values for κ.

The mean resultant vector is the normalized length of R since 0 < R <¯ 1 and is a measure of concentration for a sample of observations. A value close to 1 implies high concentration and a value close to 0 implies little to no concentration around any single direction. This estimate does not depend on the knowledge of κ or of the mean of the distribution. For the CND, there is a one-to-one correspondence between statistic and the concentration parameter κ. Given a sample size n and κ, we observe the average over our simulations and use the average as an indication of strength of concentration. In practice, we advise the user to find the sample observed of the n observations, and then use the column of the table with the nearest value.

In Table 1, we provide a list of potential PTEs for n = 20. The rows list various significance levels γ for the PTE ranging from 1% to 50%. The columns list the different observed values for . Suppose we have a sample size of 20 observations and observe close to 0.779. Following the procedure in,1 we then decide the minimum MRE preferred is . Then using the Table 1, the optimal PTE corresponds to using Upon request, we provide tables for various sample sizes, where the tables require only knowledge of sample size, R¯, and the predetermined .

|

|

|

|

|

|

|

|

|

|

|

|

0.196 |

0.198 |

0.202 |

0.471 |

0.617 |

0.710 |

0.779 |

0.818 |

0.871 |

0.900 |

||

0.01 |

1.016 |

1.027 |

1.676 |

1.38 |

1.244 |

1.075 |

1.081 |

1.116 |

1.033 |

1.018 |

|

|

1.008 |

1.015 |

0.995 |

0.688 |

0.695 |

0.869 |

0.928 |

0.951 |

0.936 |

0.915 |

|

|

0 |

2.949 |

2.757 |

1.154 |

0.833 |

0.769 |

0.769 |

0.833 |

0.705 |

0.449 |

|

0.02 |

1.055 |

1.103 |

1.555 |

1.231 |

1.105 |

1.049 |

1.07 |

1.118 |

1.048 |

1.012 |

|

|

1.043 |

1.046 |

0.993 |

0.689 |

0.807 |

0.921 |

0.961 |

0.98 |

0.968 |

0.95 |

|

|

0 |

3.142 |

2.629 |

1.09 |

0.769 |

0.769 |

0.769 |

0.833 |

0.769 |

0.449 |

|

0.05 |

1.127 |

1.228 |

1.313 |

1.08 |

1.02 |

1.031 |

1.06 |

1.111 |

1.061 |

1.015 |

|

|

1.116 |

1.102 |

0.991 |

0.743 |

0.924 |

0.968 |

0.988 |

0.995 |

0.988 |

0.983 |

|

|

0 |

3.142 |

2.5 |

0.962 |

0.769 |

0.769 |

0.833 |

0.898 |

0.833 |

0.513 |

|

0.1 |

|

1.138 |

1.246 |

1.202 |

1.012 |

1.004 |

1.017 |

1 042 |

108 |

1.051 |

1.006 |

|

0 |

3.142 |

1.795 |

0.833 |

0.705 |

0.769 |

0.833 0.898 |

0.833 |

0.641 |

||

0.15 |

1.103 |

1.195 |

1.139 |

1.007 |

1.002 |

1.02 |

1.031 1.066 |

1.044 |

1.007 |

||

|

1.099 |

1.076 |

0.975 |

0.889 |

0.979 |

0.994 |

0.997 |

0.997 |

0.997 |

0.997 |

|

|

3.142 |

3.142 |

1.667 |

0.769 |

0.705 |

0.833 |

0.833 |

0.898 |

0.898 |

0.769 |

|

0.2 |

1.084 |

1.144 |

1.085 |

1.005 |

1.001 |

1.015 |

1.026 |

1.054 |

1.031 |

1.007 |

|

|

1.076 |

1.06 |

0.968 |

0.935 |

0.987 |

0.996 |

0.998 |

0.998 |

0.998 |

0.998 |

|

|

1.988 |

3.077 |

1.603 |

0.641 |

0.641 |

0.833 |

0.898 |

0.898 |

0.898 |

0.709 |

|

0.25 |

1.064 |

1.111 |

1.045 |

1.005 |

1 |

1.017 |

1.018 |

1.046 |

1.023 |

1.006 |

|

|

1.059 |

1.040 |

0.965 |

0.96 |

0.991 |

0.997 |

0.998 |

0.998 |

0.999 |

0.999 |

|

|

0 |

3.077 |

1.346 |

0.385 |

0.449 |

0 833 |

0.833 |

0.898 |

0.898 |

0.833 |

|

0.3 |

1.049 |

1.08 |

1.015 |

1.006 |

1 |

1.013 |

1.016 |

1.029 |

1.02 |

1.007 |

|

|

1.046 |

1.032 |

0.963 |

0.971 |

0.995 |

0.998 |

0.999 |

0.999 |

0.999 |

0.999 |

|

|

0 |

3.142 |

1.346 |

0.128 |

0.577 |

0.833 |

0.833 |

0.898 |

0.898 |

0.833 |

|

0.35 |

1.036 |

1.059 |

1.002 |

1.004 |

1 |

1.01 |

1.012 |

1.02 |

1.015 |

1.006 |

|

|

1.033 |

1.024 |

0.965 |

0.979 |

0.996 |

0.999 |

0.999 |

0.999 |

0.999 |

1 |

|

|

1.859 |

3.142 |

1.282 |

0 |

0.449 |

0.833 |

0.898 |

0.962 |

0.898 |

0.833 |

|

0.4 |

1.026 |

1.044 |

0.992 |

1.002 |

1 |

1.008 |

1.008 |

1.013 |

1.012 |

1.007 |

|

|

1.024 |

1.016 |

0.965 |

0.982 |

0.996 |

0.999 |

1 |

1 |

0.999 |

1 |

|

|

0 |

3.142 |

1.154 |

0 |

0 |

0.898 |

0.898 |

0.962 |

0.898 |

0.898 |

|

0.45 |

1.019 |

1.031 |

0.99 |

1.001 |

1 |

1.007 |

1.008 |

1.009 |

1.01 |

1.005 |

|

|

1.017 |

1.011 |

0.969 |

0.987 |

0.998 |

1 |

1 |

1 |

0.999 |

1 |

|

|

0 |

3.142 |

1.154 |

0 |

0 |

0.898 |

0.962 |

0.898 |

0.898 |

0.898 |

|

0.5 |

1.015 |

1.023 |

0.993 |

1.001 |

1 |

1.005 |

1.003 |

1.005 |

1.006 |

1.003 |

|

|

1.013 |

1.009 |

0.971 |

0.99 |

0.999 |

1 |

1 |

1 |

1 |

1 |

|

|

0 |

3.142 |

0.962 |

0 |

0 449 |

0.898 |

0.898 |

0.898 |

0.898 |

1.218 |

|

Table 1 n = 20: Mailmen and minimum guaranteed efficiencies for the PTE

Comparison of the PTE and bayes estimators

Both the PTE and Bayes estimators in 12, use prior information on the mean direction to aid in estimation of the concentration parameter. A smaller significance level for the PTE requires stronger evidence to reject the null hypothesized value . A smaller significance level may be chosen to coincide with a stronger belief in the mean direction . In the previously mentioned Bayesian setting of this chapter, a larger value for the concentration parameter τ focuses our prior distribution around the mean direction . A larger value in parameter τ represents a stronger belief in prior mean direction .

In Figure 5 we make a comparison of the MSE of our PTE with significance level of 1% with the Bayes estimator with CN prior centered around the null hypothesis value and with . We plot the MSE curve of each estimator over values of .

In each plot, the solid line is MSE curve for the PTE and the dashed line is the MSE curve for the Bayes estimator. For κ = 1, performs better overall for all sample sizes. For , performs uniformly better than . For and the estimators have similar performances for small values of , but the MSE for is much larger for large values of .

If κ = 3, we have different results when comparing the MSEs. In all sample sizes of the MSE of is best for small values of . Also, for all sample sizes, has the smaller MSE for the larger values of . In this case for large value of κ, would be the preferred estimator since the performance is better overall.

In reality we do not know the value of κ, so need a data driven way to select versus . If we suspect a high concentration then we suggest to use , and for a weak concentration then use . If given a sample size n, go to the corresponding PTE table for the same sample size. In the table, go to the 7th column which gives the expected under simulations. From your observed sample of size n, calculate R¯ in column 7, and compare to the value from the PTE table. If less than the PTE table value, then use , otherwise use .

In our work we have shown a superior estimator for the concentration parameter when the mean direction is unknown of a CND. In all cases the PTE has better performance in terms of MSE if is null or small. Another interesting result is in comparison for the PTE we developed an MLE for the concentration parameter based from the prior distribution of mean direction.

None.

Authors declare that there are no conflicts of interests.

©2017 Nava, et al. This is an open access article distributed under the terms of the, which permits unrestricted use, distribution, and build upon your work non-commercially.

2 7